This is the year of AI hardware, and it’s already been a disappointment. It began with the launch of the poorly designed Humane AI Pin, which seems to have crashed and burned out of the gate. And now, as I and other journalists have tried the Rabbit R1, it doesn’t seem to be inspiring much more confidence in this first wave of AI gadgets.

The Rabbit R1 broke out as an unexpected success story in January 2024. It wasn’t here promising the second coming of the smartphone like Humane. It was more like an AI toy, a gadget designed to explore the future while Rabbit established its longer term business model.

Developed by Jesse Lyu, who sold his last AI startup to Baidu, in conjunction with Teenage Engineering, the $200 Rabbit R1 drove 100,000 pre-orders since its January announcement. (You can read our interview with Lyu here, who offered thoughtful perspective on his product.)

Just last week, the first Rabbit R1 units shipped to the public, and despite all sorts of teased functionality (ranging from editing spreadsheets to programming autonomous AI agents), there’s simply very little it can actually do today. I wasn’t expecting the Rabbit R1 to be a smartphone replacement, but I’ve found that it’s not even fun as a sandbox to explore the future of AI. Not yet, at least.

My concern is that the Rabbit R1 simply launched too early to realize its own refreshingly playful potential. Hardware gets one shot to make an impression, otherwise, it goes into a drawer never to be seen again.

Rabbit’s POV

The R1 has a bit of a split personality. It features toy-like industrial design and lighthearted rabbit animations. It superficially promises to take you down a rabbit hole (pun so dang intended) where you can explore the wild and wonky powers of generative AI with the chillest possible interface. Push a button like a walkie talkie! Spin a roller to scroll through menus. Take a photo of something and just ask about it!

This is the side of Rabbit I believe captured the public’s imagination. Lyu said at launch that he wanted to create a Pokedex of AI. And the hardware does feels like that—right down to a highlighter orange case that’s so bright it seems to glow in the dark.

Yet while Rabbit has a strong brand and perhaps an even stronger vibe, it doesn’t actually present a point of view. And that’s clear as soon as you begin using it.

Despite all the implied fun, R1 still speaks to you in the same Siri-eque robot voice we’ve known for years. With almost no features, it has aimlessly prioritized integrations with companies like Uber and DoorDash. (Is this really the device I’ll use to order my car or my groceries?) Right now, it’s something like a limited version of the ChatGPT app has been shoehorned into a Playdate—a whimsical take on the Gameboy that was also produced by Teenage Engineering. And that’s not so much frustrating as it is confusing.

So, what does it do?

The hardest part of using the Rabbit has been just trying to figure out what I should do with it.

Press the walkie talkie side button and you can ask it questions via plain language searches that feel similar to ChatGPT or Siri. All of the answers are spoken back to you with subtitles on the screen. These conversations are supposed to be viewable online later in your personal “rabbit hole,” but I never got that function working. And in the immediate, searches yield no links that you can click to take you deeper into the internet. (Say the Rabbit finds a pair of jeans you want to buy—it can’t forward you to the shop to buy them.) For the most part, that means Rabbit’s information is an ephemeral question-response, which doesn’t feel all that more useful, natural, or satisfying than any voice assistants we’ve had for years. The device makes almost no utilization of its own screen for information (a UI I assume is still in development). So voice searching is for factoids alone.

You can record conversations, which Rabbit also says are saved to the online “rabbit hole,” but you can’t actually listen to them later, see the transcripts, or read their outlines. (I wasn’t able to do anything after recording a conversation in my testing, but some people have had success with Rabbit summarizing the conversation in spoken audio. Again, this seems to be an idea still under development.)

The most enticing function is the camera. Double tap a button, take a picture, ask a question, and the Rabbit will answer it. The entire UX of this experience makes sense. It justifies having this unique piece of hardware in your hand. And I can’t help but wonder if Rabbit shouldn’t have simply gone all-in with the camera, to have thought about this as a Polaroid for AI first and everything else second.

Right now, the camera is inconsistently useful. It is excellent as a Seeing AI alternative—basically an assistant device for people who don’t see well—beacuse it mostly just likes to list what’s in the image you’ve taken. It will identify generalized objects with ease: furniture, glasses, pet bowls, whatever.

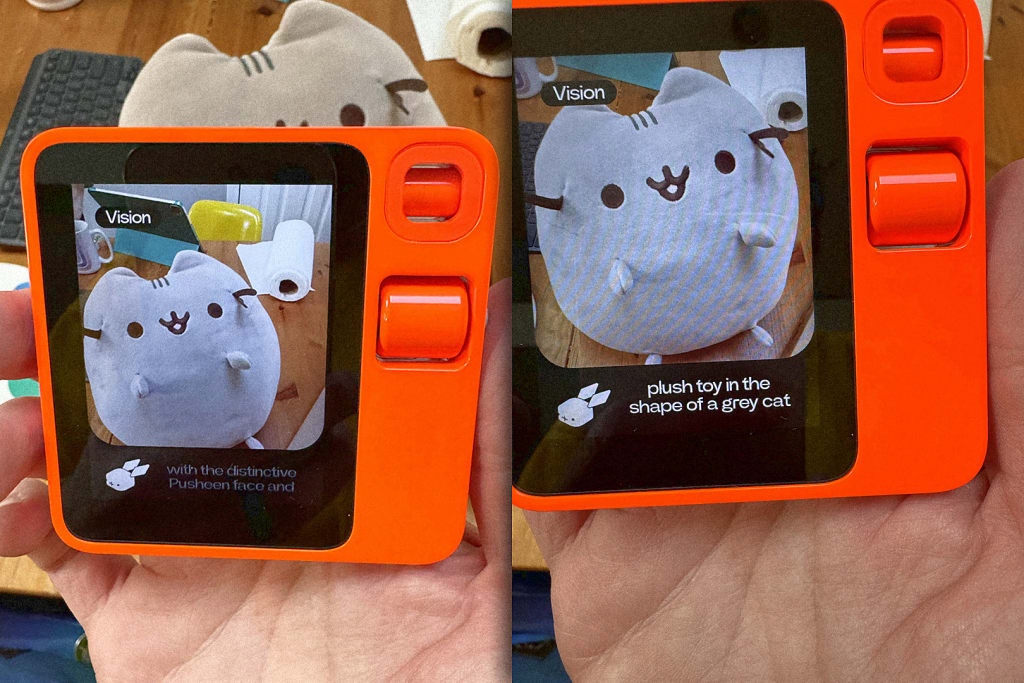

But listing what you already know is in front of you isn’t inspiring. What you want is a next level of synthesis, not just identification. Can it look at a stuffed animal and know it’s not just a stuffed animal, but a Pusheen? Sort of. Can it look at my fridge and know what I can make for dinner? Nope. Can it see a weird light on my air purifier and know what it means? Remarkably, yes.

I challenged the R1 with all of these tasks, and I was astounded when, with no context given, it identified the blue light on my Coway air purifier as signaling that the air was clear. This is the real “whoa!” stuff—that level where AI + search + a gadget could fast forward through layers of annoying googling and scrubbing YouTube clips. It’s insight in a flash, and that sensation is what I want from AI-as-utility, even if it’s not exactly fun.

Peering into my deepest secret place, my fridge—yeah, I’m unfussy enough to keep some Lites on hand, though champagne is my sin of choice—the R1 did a lousy job of offering me an idea for dinner. It misidentified some greens and suggested “a stir fry,” the sort of trolling, unspecific answer you could give to any pile of produce. It could have cataloged the foods I had, and offered some unique ideas. Instead, it poorly generalized what was in my fridge and punted with a stir fry. (I ordered tacos.)

When I asked it to identify the Pusheen stuffed animal, it was able spot not just a plush cat toy, but the specific brand of it. Simply identifying what the stuff around us is continues to be a huge challenge with endless potential, and a camera in your pocket could make a good helper. But here’s the catch: I took another photo of the Pusheen, and the second time around, it just identified it as a plush toy. When that specificity of “Pusheen” disappeared, so too did the point of my question. I received a similarly vague response when I pointed the camera at a Nike Flyknit Bloom sneaker. The camera identified a “Nike shoe,” something obvious to anyone given the swoosh.

Ensuring AI can deliver an insightful answer to any question is not something that Rabbit can solve alone. But the fact that AIs often give different answers to the same question limits the utility of a product like the R1. AI hardware needs to design itself around the fuzzy, ever-shifting boundaries of AI knowledge. And the Rabbit R1 doesn’t do this, which is a big reason why it’s consistently disappointing to use.

What can Rabbit be next?

While testing the R1, I began to suspect that the device may be too cheap to be all that intelligent. It is an extremely democratic machine—the R1 is $200 and charges no subscriptions, despite the fact that every query you make costs Rabbit money (not to mention, complex queries can cost significantly more time to answer). For Rabbit to manage queries without losing significant capital, it can’t possibly throw every challenge at the top tier ChatGPT (or equivalent) models because these models cost more compute and thereby more money per query.

Negotiating this cost balance, between when to use the best AI and the mediocre AI, is not simply a challenge for Rabbit. It’s a challenge for all the AI software and hardware we’ll see, and a reason why the big players like Microsoft, Google, and Apple hold an advantage from their revenue cushion, while boasting the scale to work out all sorts of hardware/software efficiencies around AI interfaces. (For instance, don’t be surprised when your smartphone handles most AI tasks on a local chip in the near future. Because it’ll be cheap and fast.)

Yet it’s hard to dunk on the Rabbit R1, even when what they’ve shipped is disappointing. Perhaps that’s because of its ties to the beloved hardware studio Teenage Engineering. Perhaps it’s because the entire tone of the product feels like the opposite of the Humane Ai Pin. Almost everything about the R1 signals that you’re playing with a toy, even if that’s an illusion.

In fairness, Rabbit’s ace in the hole, its real UI magical wonder trick, is still months from release. The company promises that you will be able to train little AI agents (a.k.a. “rabbits”) to do all sorts of tasks for you, from booking flights to cleaning up images in Photoshop. And if you’re not into training these Rabbits on your own, you’ll just be able to download ‘em like apps. Rabbit is essentially planning to build the App Store for AI, and that’s really the company’s promise.

I remain intrigued, but I’m also more skeptical than I was three months ago. Nothing that Rabbit has executed outside its unique industrial design, and intense public interest, is all that notable . . . yet. Rabbit may be architecting a do-anything machine, but to do so, it first has to build a do-something machine. Or at least make this entire little AI experiment more fun along the way.

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the final deadline, June 7.

Sign up for Brands That Matter notifications here.