The big social networks are busy stretching and twisting their content moderation policies to deal with all the kinds of disinformation appearing on their platforms in the run up to the election.

On Wednesday, Facebook and Twitter entered new waters when they both throttled the spread of a New York Post story claiming proof that Hunter Biden sold the influence of his father, vice president Joe Biden, to the Ukrainian energy company Burisma. The story’s dubious source is a repair shop in Delaware, whose Trump-supporting owner claims to have leaked a copy of Hunter Biden’s laptop hard drive to Rudy Guiliani. Facebook spokesman Andy Stone tweeted this Wednesday morning:

While I will intentionally not link to the New York Post, I want be clear that this story is eligible to be fact checked by Facebook's third-party fact checking partners. In the meantime, we are reducing its distribution on our platform.

— Andy Stone (@andymstone) October 14, 2020

The move is an about-face for Facebook, which has repeatedly insisted to lawmakers that it’s not a publisher and does not “curate” content. CEO Mark Zuckerberg has trumpeted the virtues of free speech and shunned being an “arbiter of truth” on the internet.

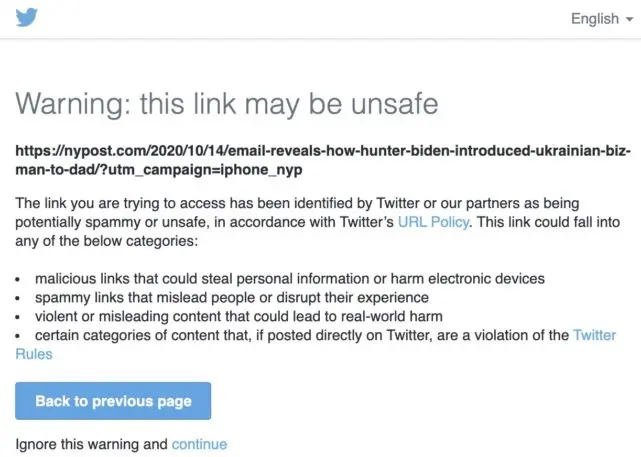

Twitter went even further, showing a warning screen saying “this link may be unsafe” to anybody clicking a link to the story and anybody trying to DM the story within Twitter.

Twitter says it has a policy against linking to content that contains “personal and private” information and/or information that was obtained through hacking. (The Post‘s story contained a personal email address and private photos of Hunter Biden that it claims were lifted from a laptop in a repair shop and leaked to Rudy Giuliani). Wednesday afternoon, without explanation, Twitter stopped showing the warning messages. “We know we have more work to do to provide clarity in our product when we enforce our rules in this manner,” the company tweeted later that evening.

Both Facebook and Twitter were quickly met with a wave of criticism from the Right. Senators Josh Hawley and Ted Cruz, who have been beating the censorship drum for many years now believe they’ve found their smoking gun, both quickly dashed off complaint letters to the Facebook CEO Mark Zuckerberg and Twitter CEO Jack Dorsey. Hawley complained to the Federal Elections Commission, and is now calling Zuckerberg and Dorsey to testify on the matter in front of the Senate Judiciary Committee.

The response to the New York Post story was just the latest in a number of policy moves by Big Social to turn back the tide of disinformation their platforms. Facebook last week said it was banning Qanon pages, groups, and Instagram accounts, and hundreds of groups and pages related to the unfounded conspiracy theory suddenly disappeared. This week, it banned anti-vaxxing ads (but not posts). It also banned posts that deny the Holocaust, and Twitter quickly followed suit. These moves are an escalation from Facebook’s decision in August to block users from linking to a new Plandemic video—a move experts said didn’t go far enough. At the time, Twitter affixed warning labels to links to the video.

Elections bring out the very worst in social networks, a reality that has been exacerbated by the pandemic. Users simply create and share more content about health and politics, and much of it is questionable. As these whack-a-mole attempts at banning dangerous content show, the biggest social networks seem to lack an overarching policy framework to deal with all the different flavors of disinformation that run wild over their networks from one day to the next.

The tools of limiting disinformation

Right now, big social networks have three main ways of dealing with disinformation. They can remove it, label it, or reduce its reach. In a Twitter thread, the Stanford Internet Observatory’s Renee DiResta says any of those approaches will be criticized as censorship.

The most practical and political way of dealing with disinformation is to allow it to remain on the platform, with a disinformation warning label if necessary. But reducing its reach, which DiResta defines as “throttling virality, not pushing the share of the content into the feeds of friends of the person sharing it,” may be the most effective.

That “reach” is really what is so revolutionary, and so dangerous, about social networks. The potential of a piece of content to go viral and reach millions of people in a short period of time is what separates social networks from earlier communications networks.

It works with cat videos, and works even better for posts containing rumors, conspiracy theories, and partisan hit pieces. It’s the reach of disinformation, more so than the content itself, that does the harm. That’s why social media companies may be better off controlling ability for content to spread, not drawing fire by making judgement calls on the content itself. As Aza Raskin of the Center for Humane Technology put it, they should control “freedom of reach.”

However, there may be a financial incentive to not take action in this way. The algorithms used by social media companies to distribute content have tended to favor dumb, divisive, and even dangerous content, boosting it to ever greater virality by putting it high in search results or in “recommended” lists. The Wall Street Journal‘s Jeff Horwitz and Deepa Seetharaman reported earlier this year that Facebook knew in 2018 that its algorithms “exploit the human brain’s natural attraction to divisiveness,” a warning that was suppressed by the company’s leadership. For years, YouTube’s algorithm set up an ideal recruiting tool for extremist groups by serving up progressively more radical videos after a user had watched an initial, relatively tame one. Bloomberg‘s Mark Bergan reported last year that an ex-YouTube employee set up a hypothetical alt-right “channel” of YouTube videos and found that, if it were real, it would be among a handful of the site’s most popular.

Facebook and others have recently made efforts to control this, but there remains a financial incentive to promote viral content because it rapidly creates more opportunities to show ads.

Developing a set of rules around what kinds of content should be throttled and what kinds can proliferate more freely may be a job that’s bigger than the tech companies themselves. The handful of social platforms that play an outsized role in public discourse may come to be seen as something more like public utilities, as the phone companies were in the last century. They’ve come to such play a central role in private and public life that there may be too many stakeholders to allow them to regulate themselves.

“Coming up with policies and new mechanisms to address virality, and curation, are two of the most significant things platforms and the public, and likely regulators, need to do to address the most destructive facets of the current information environment,” DiResta wrote on Twitter.

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.