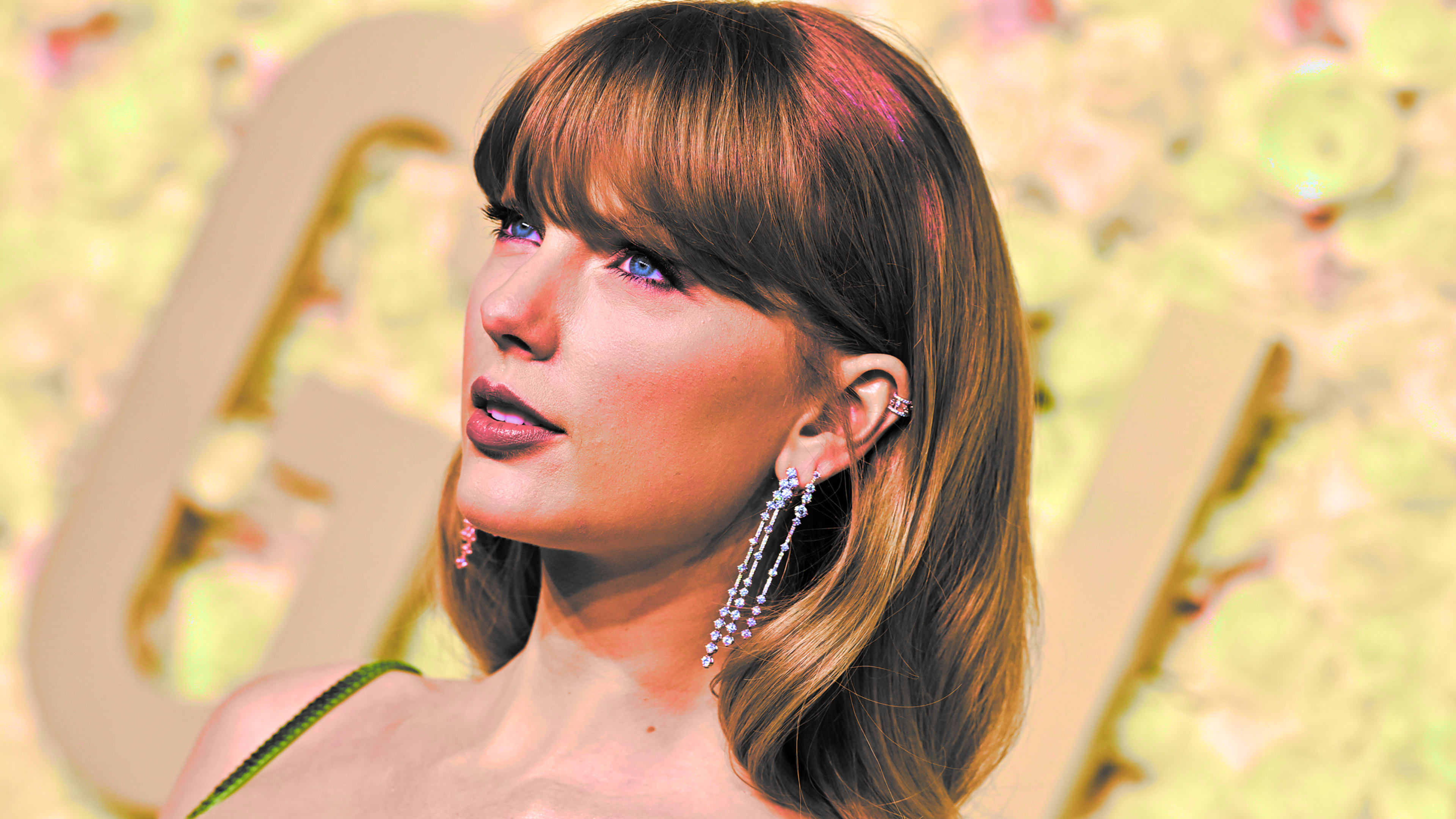

Taylor Swift has stared down record labels and fought for ownership of her music. She’s racked up Grammy Awards and led the world’s first billion-dollar tour.

But overnight, her power was punctured in the crudest, most depressingly familiar way possible for a woman: through deepfake pornography.

The crude images, which Fast Company is choosing not to show or link to, depict various AI-generated sexual acts. One account that posted the images was live for nearly a day before being banned, at which point the images had been seen more than 45 million times. Swift’s representatives did not respond to Fast Company’s request for comment.

Debasing women by reducing them to nothing more than sexual objects—and creating explicit images of them—has been happening for a long, long time. More than 100 years ago, the New York Evening Graphic was using composite photographs that placed some people’s heads on top of other people’s torsos to present sexualized images of women. But the confluence of a global megastar-like Swift, and the rapid, unfettered rise of generative AI tools makes the images that went viral on social media overnight a low point—and a warning about the future of sexual abuse online, if regulators and companies don’t act.

While many of Swift’s fans rallied together to flood results for search terms on social media with innocuous images to try and counteract the damage, the reality—all too depressing to victims of revenge porn and the nascent growth of deepfaked images—is that these AI-created images now exist and are out there in the wild.

The controversy highlights a number of issues, says Carolina Are, a platform governance researcher at the Centre for Digital Citizens at Northumbria University. One is the attitude with which society treats women’s sexuality, and in particular the sharing of nonconsensual images, whether stolen from them or generated using AI. “It just results in this powerlessness,” she says. “It’s literally the powerlessness over your own body in digital spaces. It seems like sadly, this is just going to become par for the course.”

Platforms, including X, where the images were first shared, have been trying to stop the sharing of the images, including suspending accounts that have posted the content, but users have reposted the images on other platforms, including Reddit, Facebook, and Instagram. The photos are still being reshared on X, which did not immediately respond to a request for comment.

“What I’ve been noticing in terms of all these worrying, AI-generated content is that when it’s nonconsensual, when it’s not people that actually want their content created, or that are sharing their body consensually and willingly to work, then that content seems to thrive,” Are says. “But then the same content, when it’s consensual, it’s heavily moderated.”

Swift is far from the first person to be victimized through nonconsensual, AI-generated images. (A number of media outlets have previously reported on the existence of other platforms that enable the creation of deepfake pornography; Fast Company is not linking to those platforms.) In October, a Spanish town was torn apart thanks to a scandal that saw deepfake images created of more than 20 women and girls, the youngest of whom was just 11 years old. Deepfake pornography has also been used as a means to extort money out of victims.

But Swift is perhaps the highest-profile, and the most likely to have resources to fight back against the tech platforms themselves, which may cause some generative AI tools to change tack.

One nonacademic analysis of nearly 100,000 deepfaked videos published online last year found that 98% of all such videos were pornographic, and 99% of those featured in those videos were women. Of those in the videos, 94% were in the entertainment industry. A linked survey of more than 1,500 American men found that three in four people who consumed AI-generated deepfake porn didn’t feel guilty about it. A third each said they knew it wasn’t the person, so it wasn’t harmful, and that it doesn’t hurt anyone as long as it’s only for their personal interest.

However, it is harmful and people ought to feel guilty: a New York law makes sharing nonconsensual sexual images that are deepfaked illegal, with a one-year jail sentence for doing so, and provisions within the U.K.’s Online Safety Act make the sharing of such images illegal.

“It’s clear that an ongoing and systemic disregard for bodily sovereignty is fuelling the harm posed by deepfakes of this kind, which are absolutely sexual abuse and sexual harassment,” says Seyi Akiwowo, founder and CEO of Glitch, a charity campaigning for more digital rights for marginalized people online.

“We need tech companies to take definitive action to protect women and marginalized communities from the potential harms of deepfakes,” says Akiwowo.

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the final deadline, June 7.

Sign up for Brands That Matter notifications here.