Halfway through the Epic Games presentation at the 2023 Game Developers Conference in San Francisco, a German actor named Melina Juergens scowled, sending ripples through the assembled crowd of developers in the Blue Shield of California Theater at Yerba Buena Center—and across the internet, as millions of people encountered the clip in their social media feeds.

The fascination with her expression had everything to do with the technology that made it possible. Juergens, known for portraying Senua in the Hellblade video game series, was helping Epic Games demonstrate a new technology, currently available in private beta and due for public release this summer. Called MetaHuman Animator, it can take raw video from an iPhone and map a fully three-dimensional model of a face that, in a game world, can not only laugh and cower and yes, growl, but also mimic all the subject’s other facial expressions with disturbing accuracy. Those expressions could come to life in an avatar being controlled by a human game player or in the face of an AI-powered NPC or non-player character. Or two NPCs. Or 200 of them.

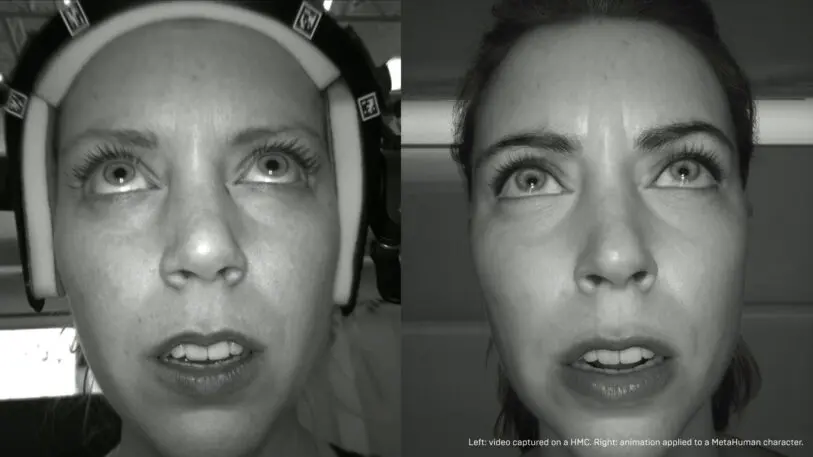

On the stage, Juergens scowled and mugged in front of a barebones setup—an iPhone 12 mounted to a tripod—which was then beamed the video to a PC on stage. Epic’s MetaHuman app recorded it and, within seconds, spit back a 3D computer-generated model of the moment: a photorealistic cartoon that was uncanny—too digital, yet not.

The primary news of the event was the release of Unreal Engine 5.2, an iteration of the software tools that developers use to create the 3D worlds of video games—and so much more. These days, Unreal is an integral part of entertainment media. Some 43% of game developers use Unreal, either as their primary game engine or a secondary tool. The software tool kit also now powers action films, automobile designs, and architectural models. Epic formally launched Unreal Engine 5 in the Spring of 2022. A year later, the newest version of it was already under the hood of everything from Epic’s massively popular Fortnite video game to the computer-generated effects in HBOMax’s House of Dragons and The Mandalorian on Disney+.

At the Game Developers Conference, Epic unveiled a slew of advancements that include a new shading system, Substrate, which helps developers enhance the interplay of light and shadow in their games. It also announced new tools for Procedural Content Generation, whereby developers define general rules and parameters for how a game’s world should look and behave ahead of time and then let the computer spin out new spaces and terrain on its own, in close to real-time.

The event was appropriately eye-popping. Epic executives showed off Substrate with a computer-generated animation of a Rivian electric truck bouncing through puddles and glens, kicking up wet mud and clouds of dust as it drove along, light glimmering off its glossy teal-painted exterior.

But the showstopper was MetaHuman Animator. To show it off, executives welcomed Juergens to the stage, where she scrunched her face to change her expressions—first fear, then that angry scowl (“Raaawr!”), and, finally, a bemused and wistful little look askance. On a massive screen above, the audience watched a projection of the app’s interface on the iPhone as it recorded her expressions.

Within seconds, the app converted Juergens’s face into a gray mask of polygons. After some clicking and dragging around on the app by an assistant, Vladimir Mastilovic, Epic Games vice president of digital humans technology (what a job title!), unveiled the app’s finished work—a copy of Juergens, neatly coiffed in gentle studio portraiture lighting. Epic then demonstrated how it could apply her animated expressions to a variety of different characters—not just one modeled after her.

The crowd erupted in applause.

“Broooo!” said an off-camera voice in a live stream by Ninja, who was following the news from the home PC where he broadcasts his gameplay to 18.5 million followers on Twitch. The professional gamer reeled back in amazement. “They did that in three seconds, using an iPhone!”

The technology will be a boon for indie filmmakers and game developers, or anyone else whose imagination outpaces their ability to hire actors in motion-capture suits. Currently, making CGI characters quickly and cheaply means settling for characters with dead eyes set in rigid polygon-heads. On stage, Juergens’s digital doppelganger had eyes that looked alive, and facial muscles that moved. These AI characters can give performances, in other words, bringing creators with small budgets closer to levels of realism previously reserved for blockbusters.

But the demo also triggered a lurch-in-the-stomach feeling among some viewers. It wasn’t the hyperrealism of the actress’s image. Juergens’s doppelgänger resembled the characters from any contemporary premium console game or CGI-heavy Hollywood blockbuster—so many of them were also created using Unreal Engine tools, after all. It was the manner of its creation, the speed and ease with which it appeared. Behold! A lifelike human, formed in less than a minute using a three-year-old iPhone model!

This movie feels familiar.

Tech lords concoct a fabulous new magic trick, make it fast and free to use, and loose it on the world. Users leverage that magic to invent entirely new forms of havoc. Algorithms crank that havoc to previously unimaginable intensities. Hey look: That new version of Friendster with the “poke” button? Ten years later, it’s stoking riots in Myanmar. That fun video-streaming where we all watched Lazy Sunday? Its automated video suggestions are prodding teenagers toward fascism and ISIS. The time-to-perdition is getting exponentially shorter. We all started playing with the AI chatbots in November; we goaded one into an existential crisis by February.

So, even as Epic’s new human animation tool will undoubtedly unlock new forms of creativity, there’s also the very real possibility that AI-powered, free-to-use game engines and the metaworlds they power will soon experience their own TIWWCHNC (“this is why we can’t have nice things”) moment.

Maybe in the future, users of apps like MetaHuman will scrunch their faces in their iPhone screens and deploy different versions of these fake humans across the metaverse, creating a spammy NPC army offering online betting credits or neo-fascist lies. Maybe in the future, only hip teenagers will be able to discern real humans from the fake. The rest of us won’t know whether we’re actually FaceTiming with our grandkids or whether they’ve handed us over to lookalike chatbots. (Back in our day, we used our real faces to ignore our elders, thank you very much.) Maybe this makes you furrow your brow? Never fear: Soon enough, your metahuman will be able to smile big enough for the both of you.

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.