Facebook says it’s designing a pair of augmented reality glasses that can add digital content to the world in front of us. They might be years away from shipping. And to be useful to us—to walk us through a pizza recipe or help us find the car keys—they need to offer a built-in assistant with some serious AI smarts. The challenge is getting enough video footage—shot from the perspective of the user—to train the assistant to make inferences about the world as seen through the lenses of the glasses.

That kind of first-person training video is scarce. So Facebook partnered with 13 universities to create a large new data set of “egocentric” training video called Ego4D. The universities recruited a total of 855 people in nine countries to strap on GoPro cameras to collect the video. In all, participants captured 3,025 hours of first-person video from their everyday lives.

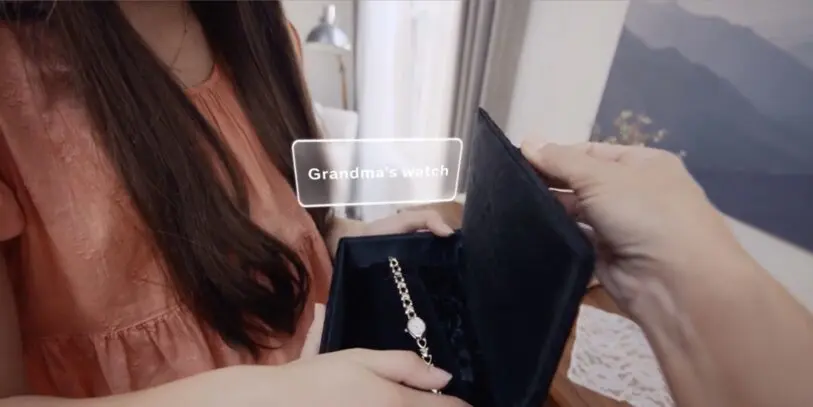

The new data set will help Facebook researchers begin the process of creating and training an AI assistant to understand how users interact with other people, objects, and the environment around them. The AI, Facebook says, will be trained to recall things a user has seen or heard in the past to help with present activities, and to anticipate things the user might need in the future.

Facebook has boiled those general concepts down into five more-specific AI tasks, which hint at how the company sees its future AR glasses being useful. Facebook’s lead researcher on the Ego4D project, Kristen Grauman, told me the tasks were chosen based on how well they “span the fundamentals needed to build any or many applications.”

“Audio-visual conversation transcription” listens to social conversations the user has, and records them or transcribes them into text that could be recalled later. If you’re following a recipe, you might call up something your grandmother said in the past about a secret cooking tip, for example.

“Social interaction” adds a layer onto the audio-visual conversation transcription task, Grauman says, by detecting “who is looking at me and when, who’s paying attention to me, and who’s talking to me.”

Grauman says that the data set created by Facebook and its university partners contains anywhere from 50 to 800 hours of video footage for each of the use cases. Figuring out what it showed involved plenty of human labor: “Someone watched the video and every time something happened, [they] paused and wrote a sentence about it,” she says. The process yielded about 13 sentences per minute.

In all, the annotation job took a quarter of a million hours of work by professional labelers. But these annotations are vital for teaching the AI models to make inferences and recall things. “It’s really cool because it gives us the language-vision connection and it gives us a way to index the data from the get-go,” Grauman says.

The data set will lay the groundwork from which researchers can push the AI to understand a variety of everyday tasks the user might need help with. But training an AI model to classify and predict the universe of things, people, and situations a user might encounter during their day is a very big challenge, and Facebook has a long way to go toward producing a helpful and versatile assistant.

“The first real barrier is the data, so we’re taking a good crack at that through this contribution,” Grauman says. “But even with the data, now the fun begins in earnest as far as the core research challenges.”

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.