This story is part of Doubting the Dose, a series that examines anti-vaccine sentiment and the role of misinformation in supercharging it. Read more here.

It takes about three taps on Instagram to find numerous sources of misinformation about the COVID-19 vaccines.

The problem has been well-reported. And Facebook, which owns Instagram, has made several rounds of changes to discourage the spread of vaccine misinformation on its platforms. Facebook says it’s already removed millions of Facebook and Instagram posts containing false information about COVID-19 and the vaccines. But anti-vaccine content remains a pervasive presence on one of the most popular social networks.

Vaccine misinformation that spreads on social platforms like Instagram is one component of the ongoing “infodemic,” a dimension of the crisis that’s impacted how people think about the pandemic and the public health initiatives combating it. Currently almost a third of Americans do not plan to get vaccinated, as a Pew Research study from early March shows. And in order to reach herd immunity—when 80% to 85% of the population carries antibodies—a significant segment of the fearful, doubtful, and paranoid will need to be convinced to get their shot, for the good of everybody.

“The more people who remain unvaccinated, the more opportunity the virus has to take hold in a community and create an outbreak,” says Summer Johnson McGee, dean of the School of Health Sciences at the University of New Haven. “As populations reach herd immunity, less social distancing, greater social mixing of groups, and larger-capacity events should be possible without fear about major outbreaks and lockdowns.”

As the numbers of willing-yet-unvaccinated people go down in the next few months, a new phase in the information war may begin. If curbing misinformation’s spread has been the focus so far, then actively changing the minds of vaccine doubters may soon become a pressing priority. The health system, public health agencies, and Big Pharma might have to adopt new tactics for changing vaccine hesitancy to vaccine willingness—and the Biden administration is launching a vaccine confidence drive to do just that.

One focus of this effort should be Instagram, which has become a microcosm of the broader infodemic. The photo-sharing app saw huge growth in explicitly anti-vaccine content and more insidious types of COVID-19 misinformation during the pandemic’s early days, and remains a hub for people and posts that sow doubt about the vaccine. Similar to other social networks, it also has a recommendation algorithm that feeds users more and more extreme content.

Facebook’s response has been to launch blanket measures to provide factual information and take down blatant violations. But while this is a good first step, Instagram could also take the lead in targeting hesitancy directly, becoming a first line of defense against vaccine falsehoods for users who are particularly susceptible to believing them.

Instagram’s anti-vax problem

For much of the pandemic, Instagram has proved a fertile ground for spreading paranoia about the virus and the vaccines. AdiCo, a misinformation blog, found last year that during the first months of the pandemic the Instagram accounts of some anti-vaccine influencers, such as Robert Kennedy Jr., saw rapid audience growth relative to the growth of their Facebook pages. RFK Jr.’s Instagram followers almost tripled to more than 350,000 in March and April 2020, the blog reported. Meanwhile, RFK’s Facebook page grew only 24% to 127,000 followers. (The World Health Organization declared COVID-19 a global pandemic on March 11, 2020.)

Lyric Jain, CEO of the misinformation detection platform Logically, says the peddlers of vaccine misinformation formed early connections with social media users who felt uneasy about the virus.

“They were quite good during the early stages of the pandemic at identifying [vulnerable] communities and targeting them with narratives that would likely move them along that journey toward being an anti-vaxxer,” Jain said during a panel on misinformation at the Fast Company Most Innovative Companies Summit earlier in March. “They were very good at identifying the kinds of levers and pain points that likely had the greatest resonance with individuals—nurses, doctors, mothers, early mothers, woman who were pregnant.”

It doesn’t have to prove anything. It needs only to plant a seed of doubt.

The insidiousness of vaccine misinformation on social media is that it doesn’t have to prove anything. It needs only to plant a seed of doubt. With enough repetition of the message, skepticism and hesitation set in—and it could be enough to keep someone from getting themselves or their family members vaccinated.

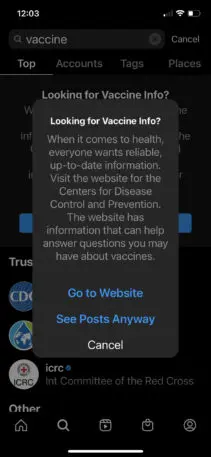

The problem persists on Instagram today. Simply typing “vaccine” into the Instagram search box brings up a number of anti-vaccine accounts. In fact, at the time of this writing, 8 of the first 10 accounts returned by the search are anti-vaccine or vaccine conspiracy theory accounts. With some, you can tell just by the name. Others have more innocuous handles, and I had to go into the actual posts to find the vaccine scare content, misinformation, and conspiracy theories.

There are also accounts that specialize in collecting reports of people getting very sick or even dying after getting their shot, the implication being that the illness or death was caused by the vaccine. But a little digging usually reveals that authorities could not establish that the vaccine caused the illness or death. In the current environment of doubt and paranoia over the vaccines, it’s not surprising that people might jump to conclusions that support what they already believe.

Deeper and deeper

There’s evidence that Instagram’s content suggestion algorithm can send users deeper and deeper into this way of thinking. The misinformation watchdog group the Center for Countering Digital Hate (CCDH) recently published a study that examined how often Instagram’s content suggestion algorithm offered posts containing misinformation.

To test the recommendation algorithm, researchers set up three groups of Insta accounts: One group followed anti-vax, QAnon, health influencer, and white supremacy accounts; one followed only accounts that have been certified as trustworthy information sources (like the U.S. Centers for Disease Control and Prevention); and a final group followed a mix of anti-vax, QAnon, influencer, and official health information accounts. The researchers found that Instagram suggested posts and accounts containing misinformation to the test accounts that already expressed an interest in anti-vaccine and QAnon content, and did not suggest misinformation content to the accounts that followed only official sources of vaccine information. In all, Instagram suggested 104 misinformation posts during the September to November 2020 study period. Half of them contained COVID-19-related misinformation, and a fifth of them contained vaccine misinformation specifically.

The researchers found that if an Instagram account followed a health influencer with loose ties to the anti-vax movement, the algorithm might suggest posts from more hard-core and high-profile accounts. If the algorithm detected the user’s interest in anti-vax content, it might suggest other types of radicalized content, such as posts containing anti-Semitic conspiracy theories, the researchers say.

Imran AhmedOnce you believe one conspiracy theory, you start thinking and believing others. People rabbit-hole.”

“Conspiracist content is driven by people who display epistemic anxiety, but at the same time it never satisfies them,” CCDH CEO Imran Ahmed says. “So once you believe one conspiracy theory, you start thinking and believing others. People rabbit-hole.”

There are business reasons for the way the content suggestion algorithms work. Running a free social network involves a constant process of keeping people engaged and looking at content longer, so that more ads can be displayed. Content is ordered not by factualness or relevance, but in a way that’s most likely to keep users scrolling, Ahmed says.

An Instagram spokesperson says the CCDH study ran during the fall of 2020, and so may not reflect any content moderation changes put in place after that, adding that the company has removed 12 million pieces of COVID-19 or vaccine misinformation since the start of the pandemic. The spokesperson also took issue with the sample size of the study, which she said was too small to represent the experiences of all Instagram users.

What Facebook has done

Facebook has, over the course of months, gotten more aggressive about removing, labeling, or downplaying false or misleading information about the vaccines on its platforms.

It booted high-profile anti-vaxxer Robert Kennedy Jr. from Instagram after Kennedy repeatedly posted false and debunked claims about the virus and the vaccines. But the company left up Kennedy’s Facebook page, which has more than 300,000 followers. A Facebook spokesperson said Facebook and Instagram use different systems for counting how many community guidelines violations must occur before an account is removed. Kennedy had exceeded the limit on Instagram, but not on Facebook. The company doesn’t share how many violations are needed to trigger a suspension because it fears misinformation spreaders would use that information to game the system.

Facebook finds itself in a position of balancing users’ freedom of speech against the harm to public health that can result from spreading vaccine misinformation. But, as with misinformation about the 2020 election, the platform has been forced to progressively err on the side of restricting speech, this time for the sake of public health.

In early February 2021, the company updated its policies to bar the posting of debunked false claims, like the one about the vaccines causing autism, or the one about the vaccines causing COVID-19. For posts that don’t exactly parrot lies but do express doubts about the safety or efficacy of the vaccines, Instagram has begun to add a label providing relevant information from verified sources to “contextualize” the post. But Instagram is applying these labels to any post that mentions COVID-19 or the vaccines in any context; after the labels have been seen a number of times they may be easy for users to ignore.

Facebook also said that when people search for accounts using words related to vaccine misinformation, it would return results for official sources of vaccine information—a blanket change made almost a year into the pandemic. Now, when you search for accounts on Instagram using COVID-19- or vaccine-related keywords, the results are topped by the accounts of the CDC; Gavi, the Vaccine Alliance; and the Red Cross. But the real search results—including a variety of anti-vaccine and conspiracy theory accounts—appear just below.

After 8,000 people attended an anti-vaccine rally at Dodger Stadium in Los Angeles that was organized on Facebook, the Biden administration asked the company to stop the spread of vaccine misinformation on its platforms, fearing that it could grow into a real-world movement, Reuters reports.

On March 15 Facebook announced the launch of its COVID-19 Information Center, which it said will now appear at the top of Instagram users’ feeds. At last check the link to the information center does not appear at the top of my feed.

Countering vaccine lies

However, these broad measures may not ultimately affect the change that’s needed.

Logically’s Jain believes social networks should start by gaining a much better understanding of the types of users who are most susceptible to misinformation and conspiracy theories on their platforms. Facebook may be thinking along those lines already: The Washington Post’s Elizabeth Dwoskin reported that Facebook is now conducting a large internal study to understand how the proliferation of vaccine misinformation across Facebook and Instagram is perpetuating vaccine hesitancy throughout the country.

“Once we have an understanding of what they’re doing and which groups they’re targeting, we’ve got to stop the bleeding,” Jain said. “We’ve got to stop more people [from] going down that breadcrumb trail and then understand who’s least far down that journey and can we bring them back.”

Lyric JainWe’ve got to stop more people [from] going down that breadcrumb trail.”

But bringing people back may require much more than just reducing their exposure to misinformation. It may require more active refutation of the falsehoods that underpin some anti-vax propaganda. Facebook knows this. The company recently announced that it is providing millions in ad credits to health advocacy groups to run ads on Facebook and Instagram containing the truth about the vaccines. That’s a positive step, but it’s still Facebook outsourcing the work of fixing its own problem.

Facebook alone has the detailed information on which users have consumed anti-vaccine misinformation on Facebook and Instagram. Perhaps it should use that data to target its own users directly with information that corrects the record. Jain says this can be done using the same powerful social communication and message targeting tools used by anti-vax groups to plant the seeds of vaccine doubt in the first place.

How effective Facebook is at controlling vaccine information on its platforms could have a real impact on the kind of world we’ll all be living in this coming summer and fall. A world in which just 65% or 70% of the population reaches immunity could be more dangerous and more restricted than a world in which 85% or 90% has been vaccinated.

After all that Facebook and Instagram have done to perpetuate vaccine anxieties over the past year, profiting from the ad sales all the while, it’s time the social networks use their power to counter vaccine lies with scientific truth.

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.