Today is Data Privacy Day. Don’t kick yourself if you haven’t heard of it—not many people have. Yet it’s never been more important, as technology for capturing, analyzing, and categorizing virtually any imaginable piece of data about us—from our heartbeats to our most private thoughts—becomes only more advanced, invasive, and pervasive.

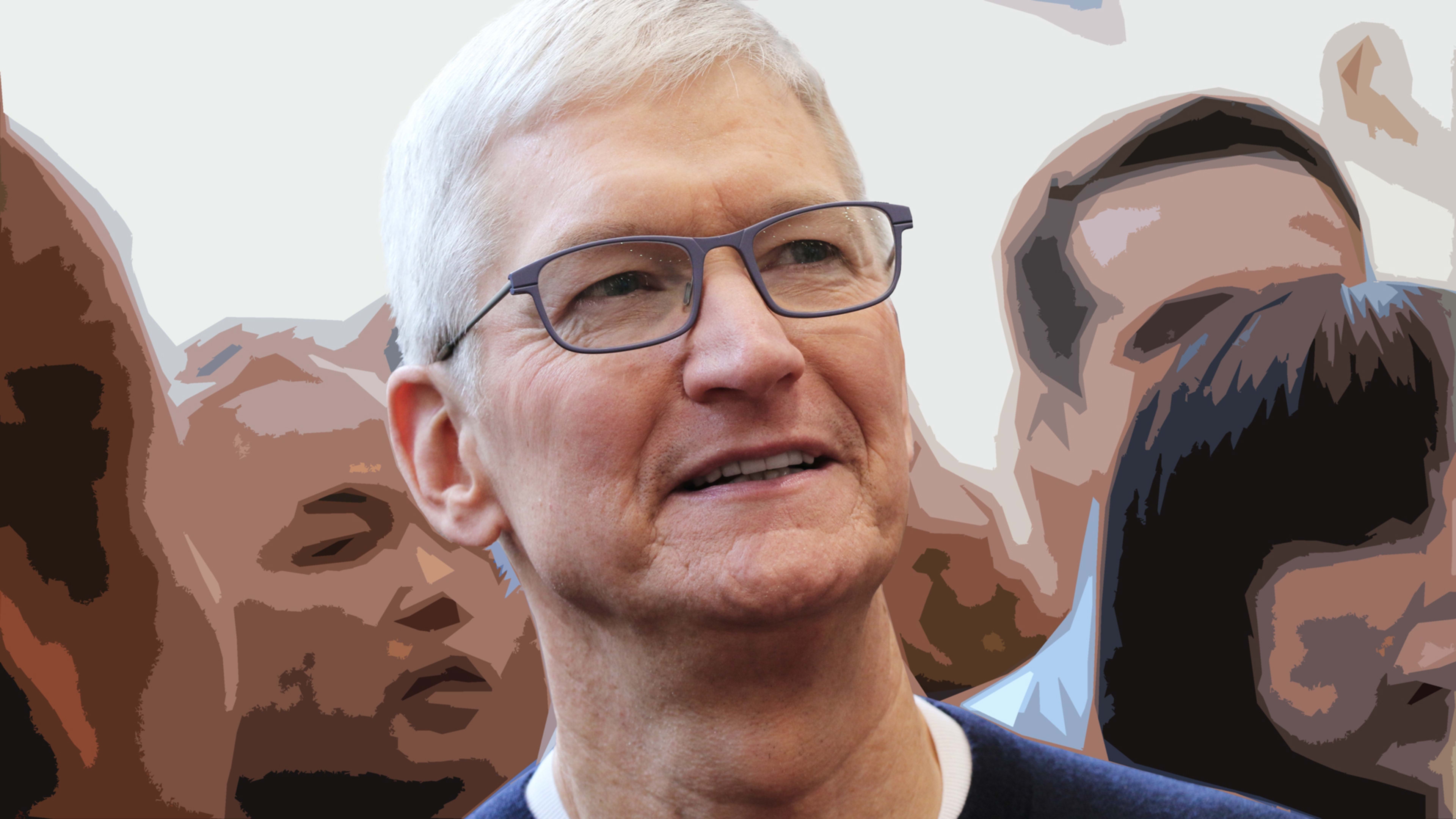

To mark the day, Apple CEO Tim Cook gave a live-streamed keynote speech opening a panel discussion on the importance of user privacy at the Brussels-based Computers, Privacy & Data Protection conference this morning. That he keynoted the panel is no surprise—Apple is the only Big Tech company that’s fully embraced privacy, not just as a feature, but also the mantra that it’s a fundamental human right.

If you care about privacy—and you should—Cook’s frank speech is worth a watch. He elaborated on his thoughts when I interviewed him moments afterward. Our talk was not the typical kind of PR-managed privacy patter some company execs give. And while I’ve heard Cook speak many times about privacy before, this time it felt like the gloves were really off.

It wasn’t just his tone on privacy. Cook also didn’t hold back as he spoke about tech-fueled extremism, his distaste for lumping “Big Tech” companies together, government regulation, self-censoring, and the chances for a federal privacy law in America.

On the biggest 21st-century threats

In a comparison that I’ve never heard anyone make before, Cook says that the erosion of privacy ranks with the disastrous manmade global warming the planet is experiencing.

“In terms of privacy—I think it is one of the top issues of the century,” he tells me. “We’ve got climate change—that is huge. We’ve got privacy—that is huge. . . . And they should be weighted like that and we should put our deep thinking into that and to decide how can we make these things better and how do we leave something for the next generation that is a lot better than the current situation.”

We have to have ethical AI, just like we have to have ethical data privacy and data collection.”

I brought up Elon Musk’s favorite doomsday villain, artificial intelligence, and asked where that ranks as a technological threat compared to greater privacy erosions. “I think both of those can be used for bad things and can be amplified by technology,” Cook says. “And so which one is above the other one? I don’t know. I would say we can’t let ourselves just choose one of those two to focus on. We have to have ethical AI, just like we have to have ethical data privacy and data collection. There’s an intersection of those two as well, right? Both are paramount and have to be worked on.”

On how lack of privacy changes our behaviors

At the start of our conversation, I mentioned to Cook that—as someone who sees the value in data privacy—I was excited about Apple’s upcoming App Tracking Transparency feature and its new App Store privacy labels. But while many people I know do care about such new privacy-enhancing features, I also know plenty of others who just shrug and say, “What do I care if an app collects my data? I have nothing to hide.”

I asked Cook how he’d respond to people that take a nonchalant attitude towards privacy. He pointed out that while people may state they don’t care, just knowing that companies suck up so much information about them—shopping data, search queries—can eventually lead the same people to self-censor themselves.

“I try to get somebody to think about what happens in a world where you know that you’re being surveilled all the time,” he says. “What changes do you then make in your own behavior? What do you do less of? What do you not do anymore? What are you not as curious about anymore if you know that each time you’re on the web, looking at different things, exploring different things, you’re going to wind up constricting yourself more and more and more and more? That kind of world is not a world that any of us should aspire to.

“And so I think most people, when they think of it like that . . . start thinking quickly about, ‘Well, what am I searching for? I look for this and that. I don’t really want people to know I’m looking at this and that, because I’m just curious about what it is’ or whatever. So it’s this change of behavior that happens that is one of the things that I deeply worry about, and I think that everyone should worry about it.”

On “Big Tech”

I told Cook that back in the early 2000s it seemed like most people—including many journalists—treated tech companies like white knights on the hill who were going to solve all the world’s problems. It seemed like every month a new gadget or software was coming out that was vastly improving our daily lives: the iPod, Wikipedia, Google Maps. But almost two decades later, tech companies—especially Big Tech ones—are increasingly demonized, in part due to privacy concerns. Does Cook think that demonization is warranted?

Technology can be used to amplify, can be used to organize, and it can be used to try to manipulate people’s own thinking.”

On tech’s role in extremism

Speaking of values, Cook doesn’t shy away from confronting the role some tech companies have played in the radicalization and propagation of extremist ideologies in America. When we spoke, he was the one to point out that a lack of privacy writ large is also what enables data to be collected to build profiles about people that are then “being misused in a way to stir up extremism and other things.”

I asked him if it’s fair to blame some technology companies for the role their platforms may have played in the radicalization of people such as some of the people who stormed the U.S. Capitol earlier this month, which led to five deaths.

“Technology . . . can be used to amplify, can be used to organize, and it can be used to try to manipulate people’s own thinking,” he says. “And so I think a sort of a fair view—and I hope it gets one—a fair assessment would say that technology was a part of that equation. And we shouldn’t run from that. We should try to understand it and try to figure out how are we going to not have it happen again. How are we going to improve?”

On privacy legislation

In Cook’s address at the Computers, Privacy & Data Protection conference, he talked favorably about the European Union’s General Data Protection Regulation (GDPR) law, which provides data and privacy protections for its citizens. It made me wonder how much he thinks governments have a responsibility to step in with privacy legislation when many technology companies don’t seem to be willing to provide greater protections themselves.

“I think GDPR has been a great foundational regulation,” he says. “And I think it should be the law around the world. And then I think we have to build on that—we have to stand on the shoulders of GDPR and go to the next level.” That next level includes looking at regulating privacy protections that Apple already takes seriously, such as its four privacy pillars of data minimization, on-device processing, transparency, and security. “I think we need to think through each one of those at a level of depth that we could write a new regulation for. Because I worry that companies are not going to do it themselves.”

That being said, Cook admits he is generally not a fan of regulation. “I think it can have a lot of unintended consequences,” he explains. “But in this particular case, I think we’ve run the experiment. It hasn’t worked very well. And I think we need governments around the world to join and hopefully come up with a standard for the world instead of having a patchwork quilt.”

On the future

I ended by asking Cook if America, with all its tech giants and their accompanying powerful lobbying efforts, will ever implement legislation along the lines of the EU’s GDPR. Considering the rather grave and frank tone of our conversation, I was heartened to hear that he’s hopeful such regulation will indeed be realized.

“I think people are seeing the results of not having it,” he says. “Not everyone, but the vast majority of people are not happy with the way things are going. And I think that as people begin to feel that way the representatives of those people begin to change their views as well. And so I’m very optimistic about this—call me naive, but I’m optimistic about it.”

It’s an optimism that I share. And its fruits can’t come soon enough.

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.