We’ve all heard about the gender gap, the fact that men make more money working the same jobs that women do, and that men have more of a voice in politics and in the media. Indeed, in my profession, 60% of bylines belong to men—and that figure jumps to nearly 70% if you look specifically at breaking news.

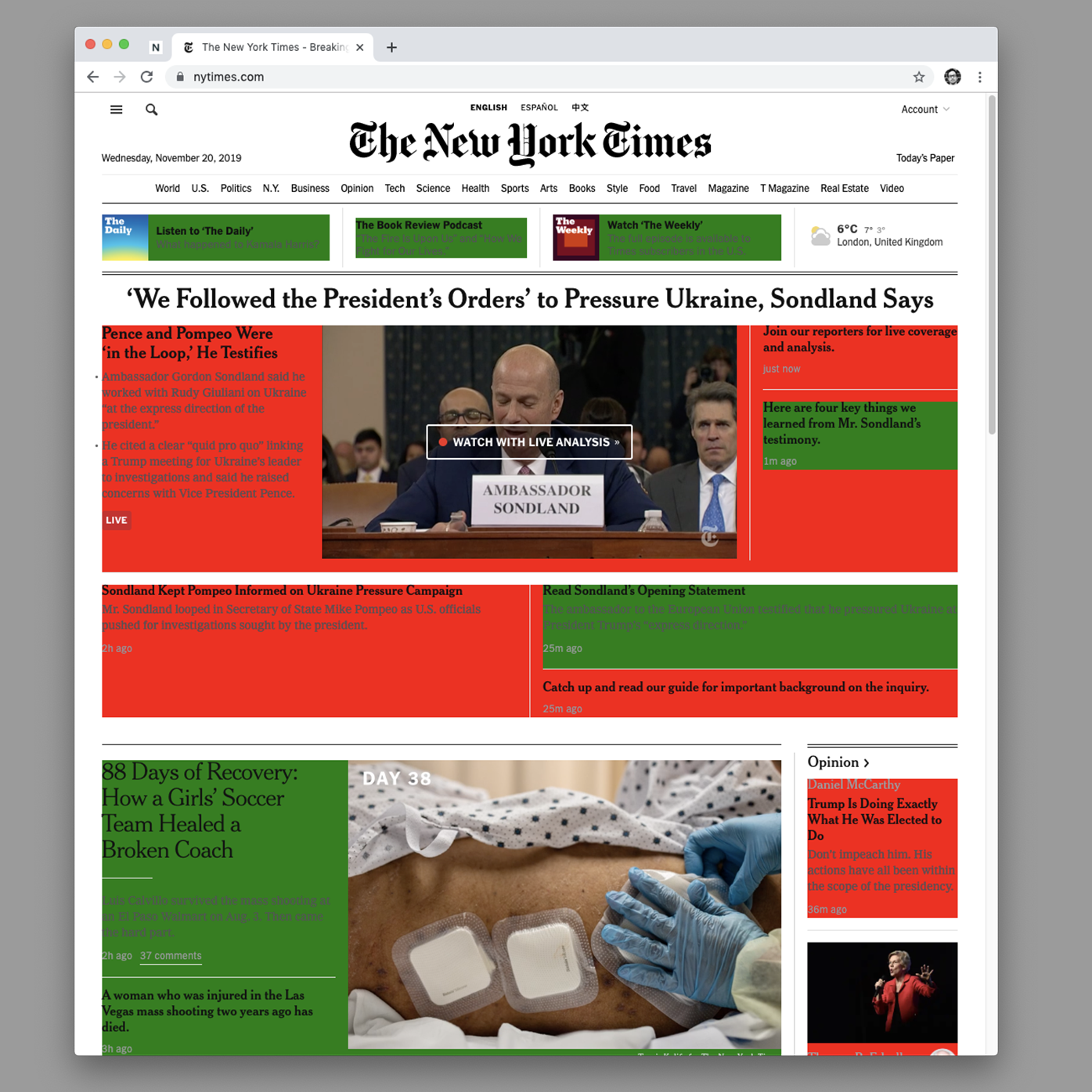

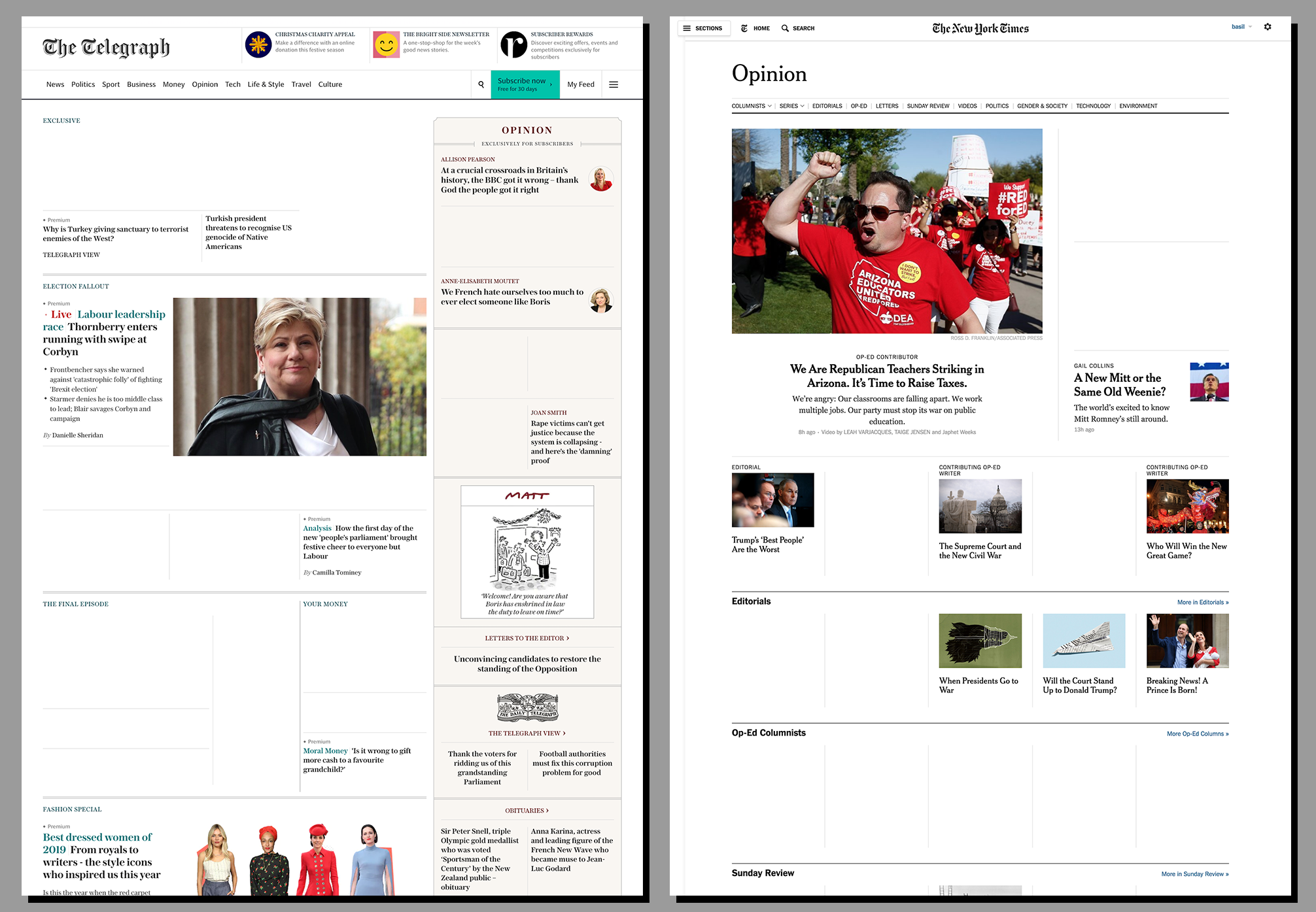

But this phenomenon is mostly invisible. It’s hard to glance at The New York Times or The Wall Street Journal and instantly know how much of the content was written by women or men, unless you’re in the mood to count bylines. Or it was, until the U.K. data and design studio Normally developed the Gendered Web plug-in, a filter for news sites that makes every story written by men disappear, so that only the voices of women remain.

“We weren’t necessarily trying to make a point [about gender] when we started out,” says Marei Wollersberger, Managing Director and Co-Founder at Normally. “What we wanted to do was see what it might feel like to experience the web in different ways.”

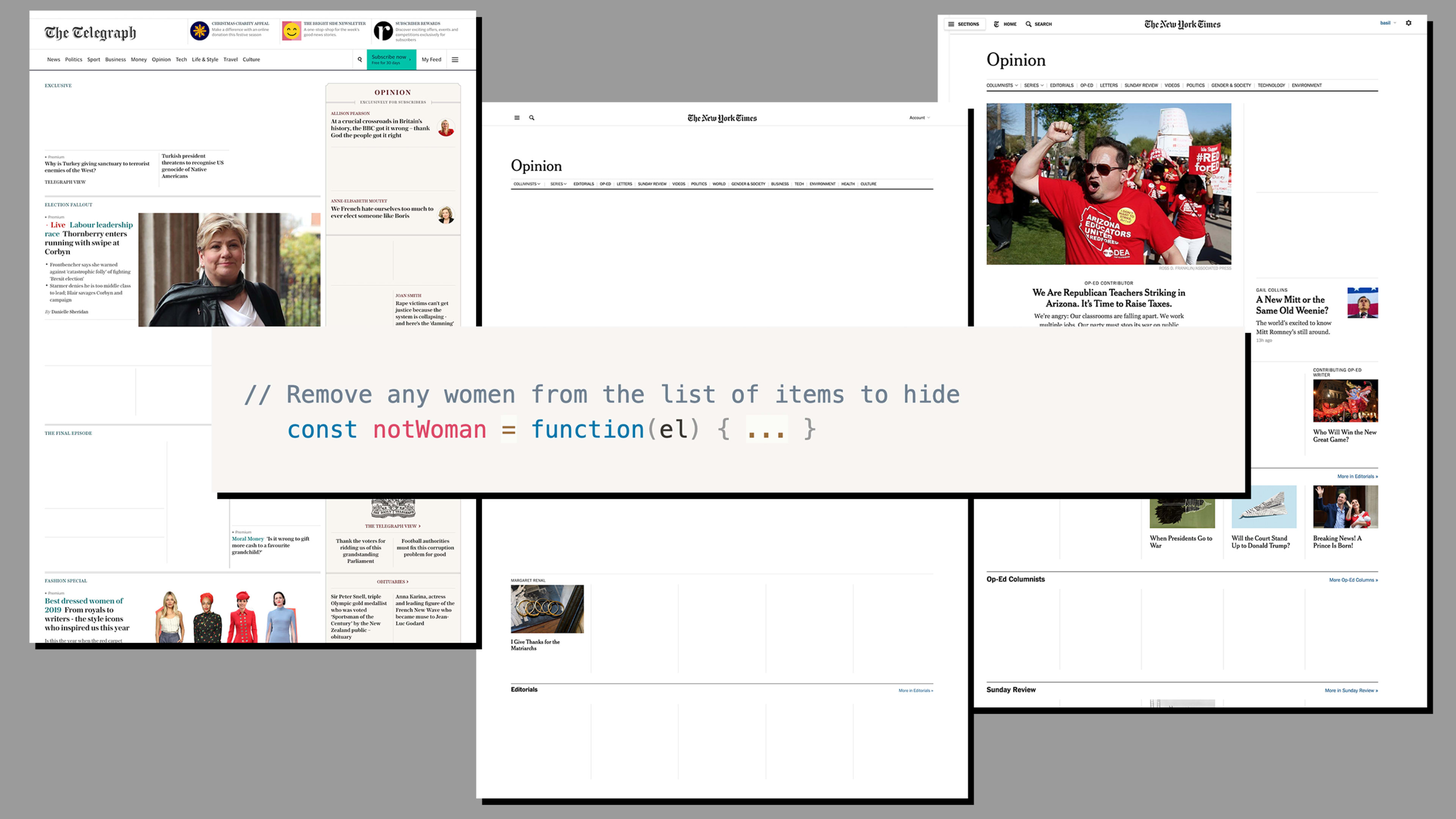

But early experiments quickly brought them to explore the concept of gender and how, as we browse the web, our presence within a man-centric bubble is constantly reinforced. Using The New York Times as a first example, the team coded a filter that would scan bylines to sort out the women’s names and allow only those to appear.

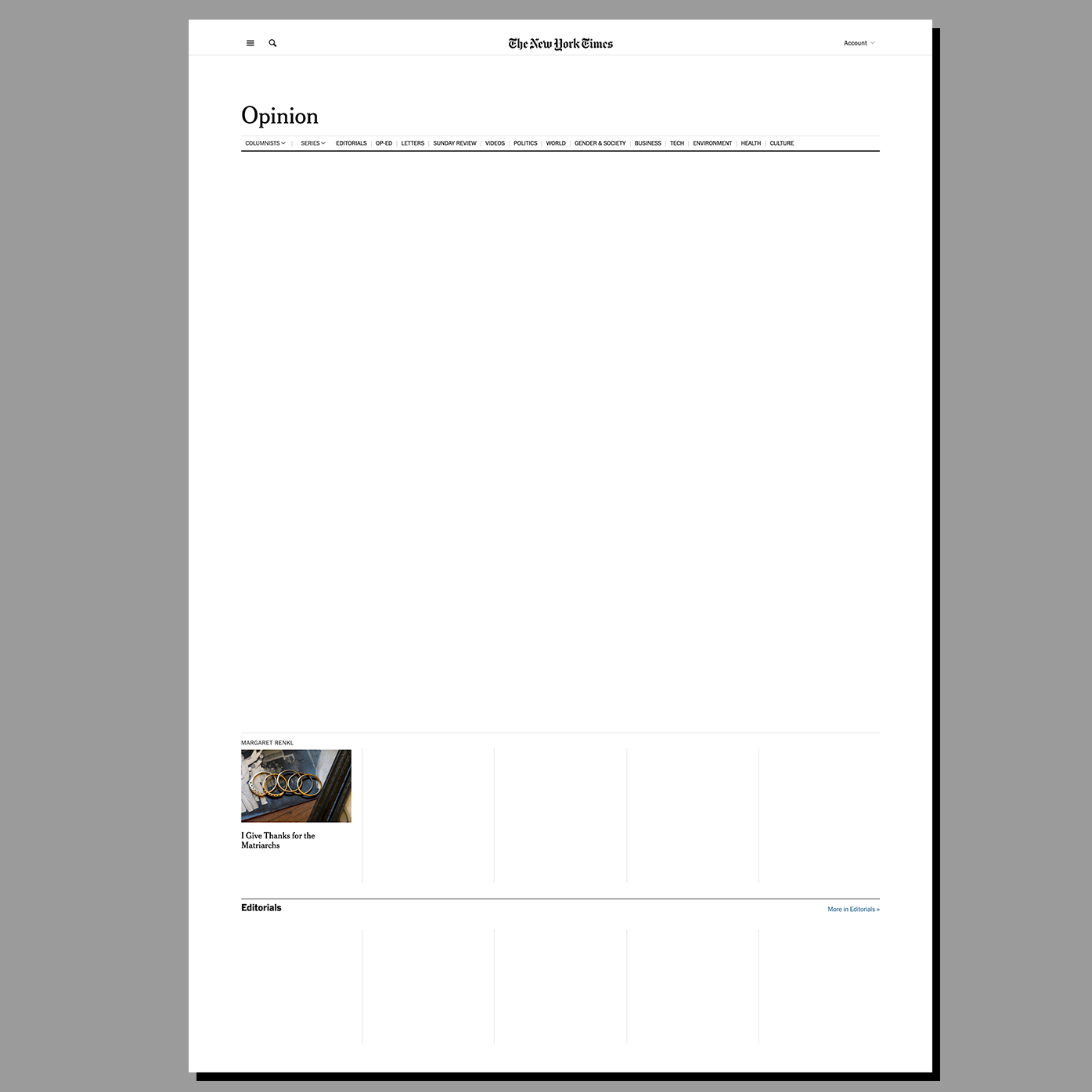

The results they shared are disquieting. At times, The New York Times and its peers become largely empty publications. In one instance, the New York Times Opinion page is entirely devoid of content, save for one tiny thumbnail in the bottom left corner. Whatever harrowing stats you’ve read about the gender gap, this single visualization hits harder.

The Normally team, however, has opted not to release the plug-in, and for a lot of good reasons. First, the way it’s coded isn’t really sustainable. It required hand-coding each person’s name and their identified gender (scraped from Twitter). Also, turnover is frequent in the industry, meaning that Normally would need a large team of people to constantly code and update major news sites. Furthermore, the filter literally includes a list of female writers in its code, which could be viewed, and perhaps even misused, for nefarious reasons.

“As we know . . . there are unintended consequences of things [designers] make . . . there could be a reverse of this filter to hide women’s voices on the internet,” says Basil Safwat, design director of AI.

Finally, the plug-in probably isn’t something most people would want to use every day. The idea of a gender-filtered web is gutting when you first see it, but it’s not practical to tune out important news on a daily basis, even in the interest of a larger political purpose.

Instead, the project is meant to inform Normally’s own thinking on how we should be designing products for the internet. And the company believes it’s onto something as a result.

“What we identified as interesting is [viewing the web] according to your own criteria instead of an algorithm that’s operating on your behalf about what you want to see next,” says Safwat. “The idea is, you’re in charge of your own algorithm.”

In other words, while the gender gap is affecting what we read online, the algorithms at play are perhaps an even deeper-seated issue in the digital world in particular. Normally has run other experiments, such as filtering conservative and liberal content, for instance, to see what that kind of control over news flow would feel like. That’s clearly not the right solution, either, as it would simply further ensconce us in a bubble of our own making.

And while the studio doesn’t claim to have an answer to the issue yet, their conclusion seems reasonable: We’re already living with the consequences of constantly curated voices and content, whether it’s the editors behind The New York Times or Fast Company, or the algorithms steering Twitter or Facebook. And the more automated these filters become, the more need there will be for tools that give users back some level of control.

In other words, the levers determining what we see are already there, and someone is pulling them whether we like it or not. The solution isn’t to filter out men or to filter out certain viewpoints, but to identify those levers of the manipulation machine and, when they’re in error, break them appropriately.

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.