Be wary of any company that claims to be saving the world using artificial intelligence.

Last week, the New York Times published an investigation of One Concern, a platform designed to help cities and counties create disaster response plans. The company claimed to use a plethora of data from different sources to predict the way that earthquakes and floods would impact a city on a building-by-building basis with 85% accuracy, within 15 minutes of a disaster hitting a city. But the Times reports that San Francisco, one of the first cities that had signed on to use One Concern’s platform, is ending its contract with the startup due to concerns about the accuracy of its predictions.

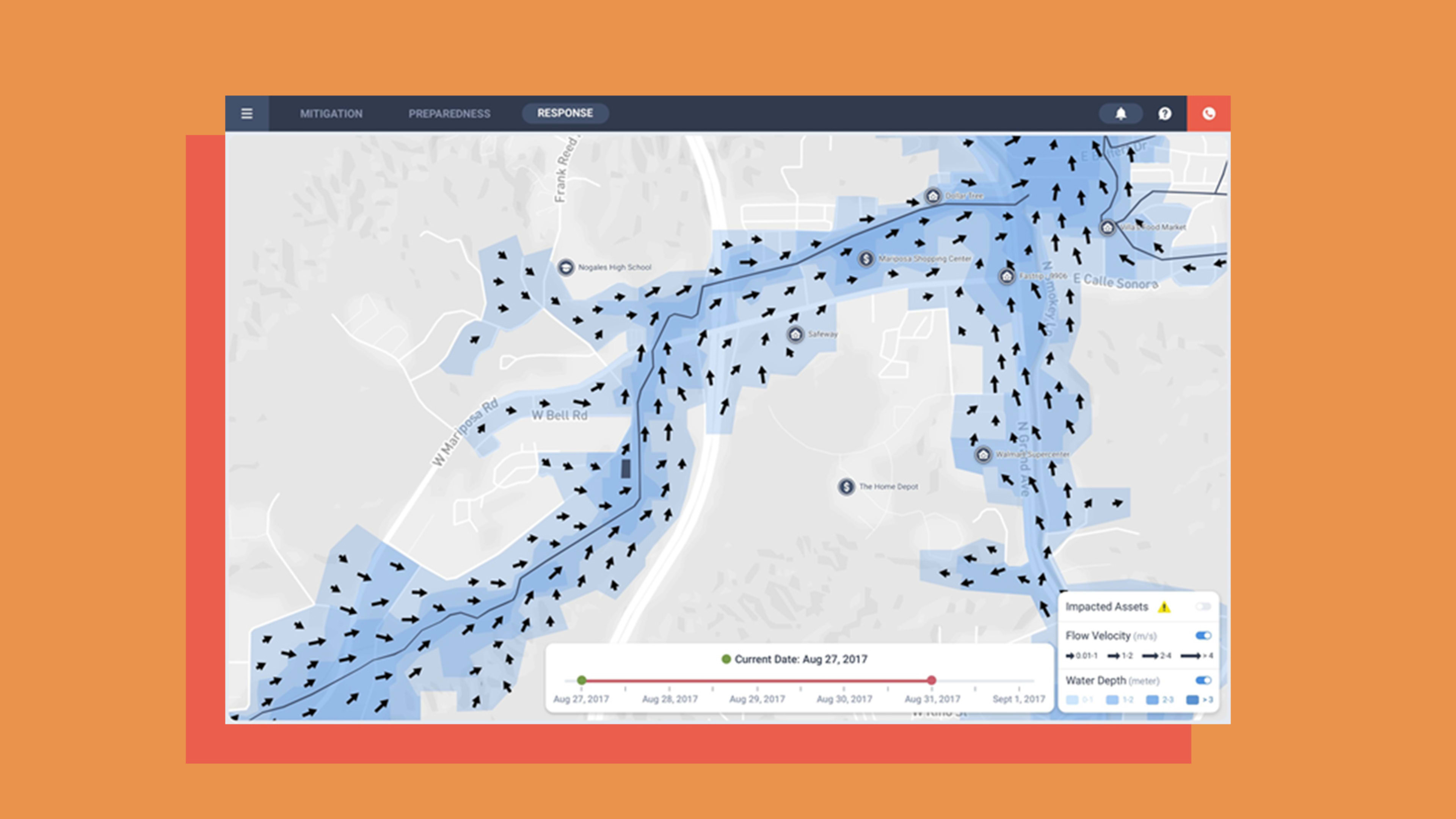

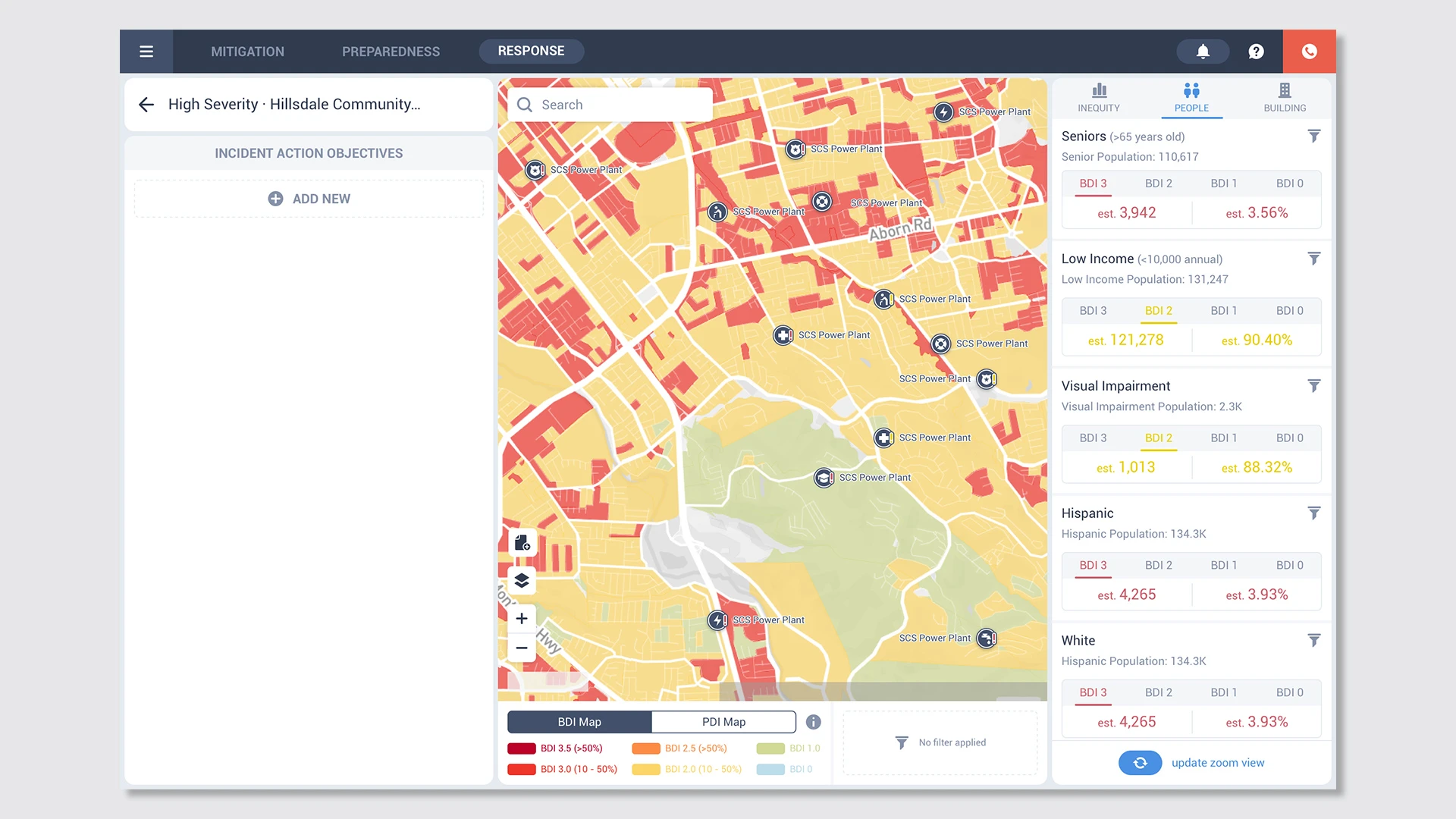

(which was honored in Fast Company‘s 2018 Innovation by Design awards and 2019 World Changing Idea awards) . The heat map-style interface is supposed to show city officials close to real-time predictions of damage after an earthquake or flood, as well as run simulations of future earthquakes and provide damage levels for each block, helping planners decide how to distribute resources to reach people who will be most in need of help.

As I wrote back in November 2018 of One Concern’s interface:

It’s almost like playing SimCity, where planners click on a fault, watch what happens to each building, and then add icons like sandbags, shelters, or fire trucks to see how these preparation tactics influence the simulation. All of this happens within a relatively simple color-coded map interface, where users toggle on different layers like demographics and critical infrastructure to understand what the damage means in more depth.

It was this easy-to-use design that convinced San Francisco’s former emergency management director to sign on to use the platform because it was much simpler and more intuitive than a free service provided by FEMA to predict earthquake damage.

But the technical sophistication just wasn’t there, according to the report. An employee in Seattle’s emergency management department told the Times that One Concern’s earthquake simulation map had gaping holes in commercial neighborhoods, which One Concern said was because the company relies mostly on residential census data. He found the company’s assessments of future earthquake damage unrealistic: The building where the emergency management department works was designed to be earthquake safe, but One Concern’s algorithms determined that it would have heavy damage, and the company showed larger than expected numbers of at-risk structures because it had calculated each apartment in a high-rise as a separate building. This employee shared all of these issues with the Times.

One Concern declined to comment publicly on the report. In the Times story, One Concern’s CEO and cofounder Ahmad Wani says that the company has repeatedly asked cities for more data to improve its predictions, and that One Concern is not trying to replace the judgment of experienced emergency management planners.

Many former employees shared misgivings about the startup’s claims, and unlike competitors like flood prediction startup Fathom, none of its algorithms have been vetted by independent researchers with the results published in academic journals. The Times reports: “ as well as widely available free public data, while charging cities like San Francisco $148,000 to use the platform for two years. Additionally, the Times found that One Concern has started to work with insurance companies, which could use its disaster predictions to raise rates, in part because only a few cities have paid for its product so far—a move that caused some former employees to feel disillusioned with the company’s mission.

As the Times investigation shows, the startup’s early success—with $55 million in venture capital funding, advisors such as a retired general, the former CIA director David Petraeus, and team members like the former FEMA head Craig Fugate—was built on misleading claims and sleek design.

Excitement over how artificial intelligence could fix seemingly intractable problems certainly didn’t help. I wrote last year of One Concern’s potential: “As climate change heralds more devastating natural disasters, cities will need to rethink how they plan for and respond to disasters. Artificial intelligence, such as the platform One Concern has developed, offers a tantalizing solution. But it’s new and largely untested.”

With faulty technology that is reportedly not as accurate as the company says, One Concern could be putting people’s lives at risk.

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.