Facebook says it will soon expand the set of tools used by its content moderators to limit the psychological effects some may feel after reviewing harmful or disturbing Facebook posts.

The news comes two days after the publication of a damning exposé by the Verge‘s Casey Newton of a Facebook content moderation facility in Tampa, Florida, run by a third-party vendor called Cognizant. Newton interviewed current and former Facebook moderators who described an unsanitary and rancorous workplace where one moderator had a heart attack and died on the job, and managers appeared to care more about pleasing Facebook than ensuring employee welfare.

Facebook provided this response to the story:

We know this work can be difficult and we work closely with our partners to support the people that review content for Facebook. Their well-being is incredibly important to us. We take any reports that suggest our high standards are not being met seriously and are working with Cognizant to understand and address concerns.

According to Facebook, its work to protect the mental and physical condition of its content moderators—the majority of whom work for third-party community management centers run by companies such as Cognizant and Accenture—began long before the appearance of the Verge’s story.

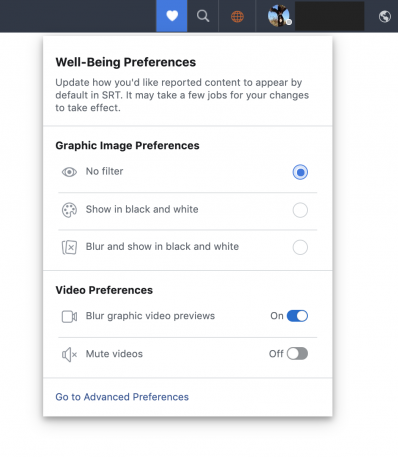

In May, the company quietly announced it would be giving moderators new controls to help shield themselves from the ill effects of continually watching disturbing content. Moderators will have a preferences pane where they can set their software tool to blur potentially disturbing images or video and mute audio. Facebook has been testing these options with moderators at its Phoenix and Essen, Germany, contract moderation sites. Moderators can also view video or images in grayscale, or stop the videos from auto-playing.

In some cases, moderators can make the determination of whether the content satisfies or breaches the Facebook content policies by viewing only the text, while leaving the images blurred out, says Chris Harrison, a psychologist and member of Facebook’s global resiliency team. He adds that shielding moderators from harm begins with giving them more control of what they’re seeing and how they’re seeing it, so just the existence of the new preferences helps. “The research that we have reviewed indicates pretty strongly that shifting things from color to grayscale or monochrome decreases the level of activation in the limbic system [the brain structures related to emotion],” he says.

Harrison has been talking to both moderators and managers at the Phoenix and Essen sites, and says that he’s encouraged by the relief the preference options bring to those using them. However, he admits that adoption of the new preferences has been slow—Facebook isn’t saying exactly how many of the moderators at the two sites have elected to use them—and the company is still in the process of educating moderators about the new options. Harrison says many reps simply don’t feel they need the new options.

Facebook says it plans to refine the preferences, based on the feedback from the Phoenix and Essen, and expand the preferences to more moderation centers in the third quarter.

The company is also working on a new feature that blocks out the faces of the people in the images or video. As Harrison explains, humans take cues from the faces of other people around them to get a sense of their safety, an ingrained trait that people can’t turn off. “We’ve reviewed some research suggesting that if we block the faces in photos or videos we’re continuing to turn down the dial . . . in the limbic system,” he says.

The digital tools are part of a larger overall plan at Facebook to preserve the well-being of its 13,000 frontline content moderators around the globe. The plan also includes programs supporting five main areas developed by Harrison and others: mindfulness, cognitive and emotional fitness, social engagement and support, physical health, and meaning and purpose.

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the final deadline, June 7.

Sign up for Brands That Matter notifications here.