It wasn’t supposed to turn out this way.

On the eve of a big announcement, Adobe wrote me to offer an exclusive first look at Characterizer. It’s a new feature in the company’s Character Animator app, and it allows you to take any portrait drawn in any style, then apply it like a filter to your own face in real-time video.

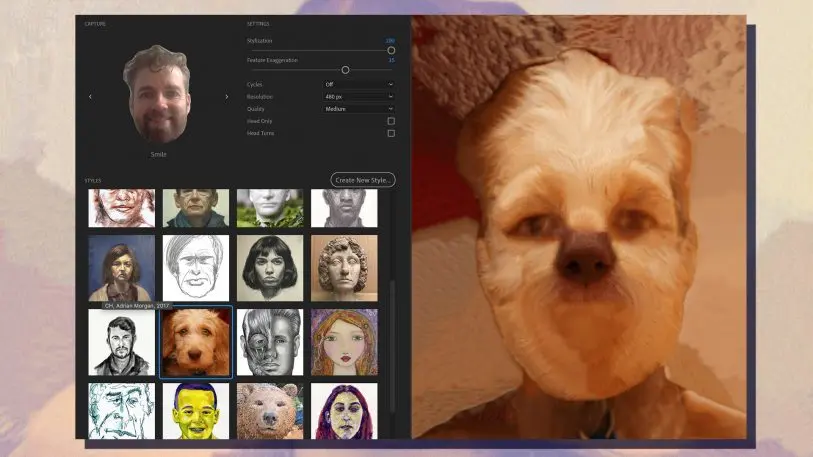

Design and AI nerds know this trick as “style transfer,” and it already exists in apps like Prisma. Adobe is trying to push the medium further with options for deep customization, pushing this nascent tech toward ubiquitous professional use. The company showed off a sneak peak last year under the code name “Project Puppetron.” In an incredible onstage demo, the technology rendered someone as a bronze statue in real time. It even synced a stylized person’s lips to their voice, too, enabling one to broadcast as, say, a Rembrandt painting on Twitch.

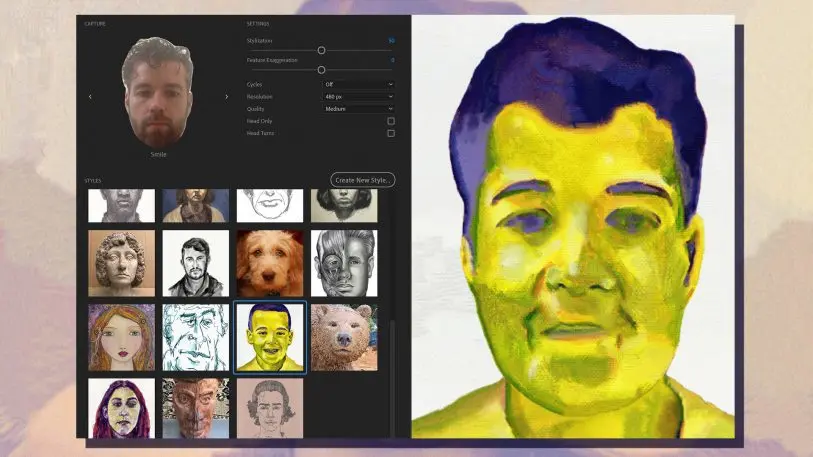

Unfortunately, after testing Characterizer for myself, it appears that results may vary. Because the app turned me into a grotesque monster. At best, I looked something like the late president Ronald Reagan. And at worst . . . Conan O’Brien meets an inflatable sex doll.

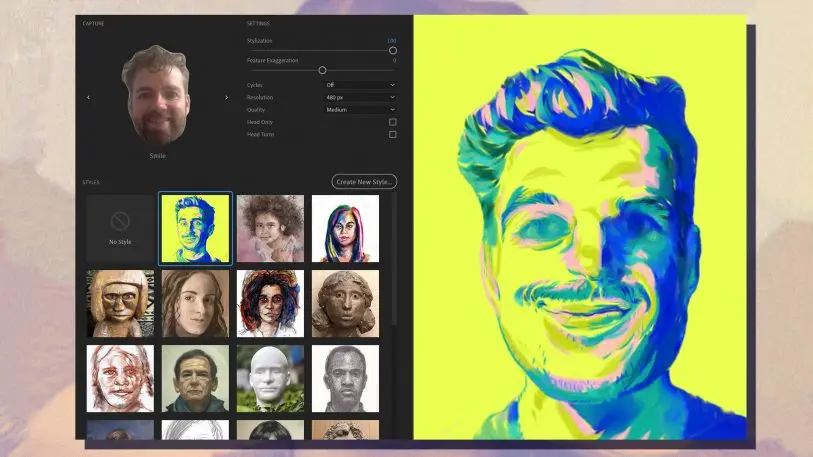

I really wanted to like Characterizer. I was downright excited to try it. In a video conference with Adobe’s Sirr Less, who is senior product manager for Character Animator–during which he presented to me as a teenage witch with purple hair, who accentuated points with a flourish of her wand–Characterizer worked superbly. He showed me how, while running the app on a stock 13-inch Macbook, the software cued him to take a few photos of his face smiling, then frowning. It asked him to read a few words aloud: “Photoshop.” “Lightroom.” All the while, Characterizer was using artificial intelligence to understand how his mouth moved to create certain phonemes.

After just a couple minutes of setup, Less was off, turning his face into a comic book character one moment and a wooden sculpture the next. In each instance, the intricacy of the original art shined through. It’s just the sort of machine learning trick we’ve seen online before, but now it’s coming to Adobe’s flagship animation product. On September 12, it will be baked into anything creators want to do–and will be interoperable with all other Adobe products, too. The significance of this platform-wider interoperability can’t be understated. Characterizer is really Adobe’s first attempt at making a viral AI phenomenon a regular part of a creative’s routine.

“There’s always going to be someone with the next Animoji, or Snap filter or lens. Whatever the thing of the moment, there will always be someone who does that and well,” says Less. “But the real beautiful advantage to our animation products is not just they work, but how they work with each other.”

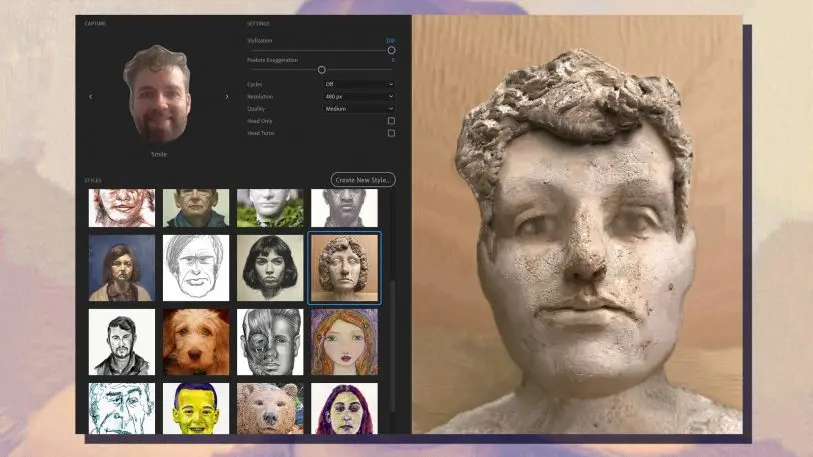

As I install my seven-day trial of Character Animator to test Characterizer for myself, I find the setup to be just as easy as promised. With my Macbook on my lap, I make faces at my screen, following the audio cues of a tutorial. I smile. I say woo-hoo. I do it all! Characterizer seems to see me fine, and it cuts my head out into a dozen permutations. But when I try to apply a filter?

Reagan.

A personalized bust that I WOULD NOT PAY FOR!

What happened to my beard? What happened to my hair? What happened to me?

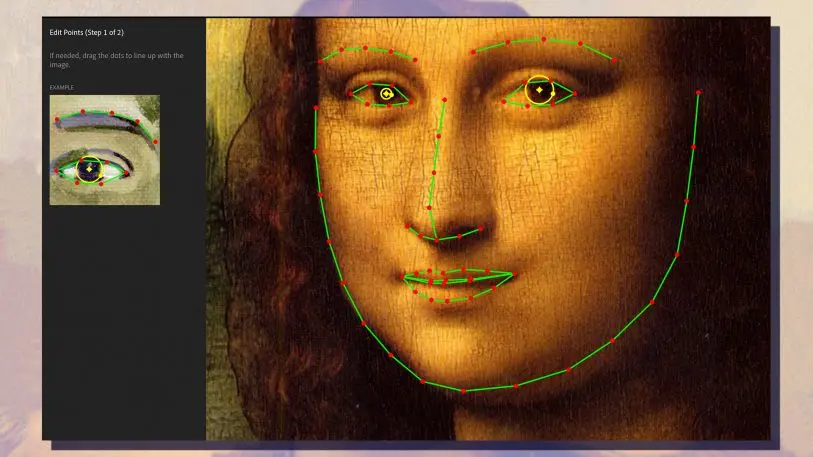

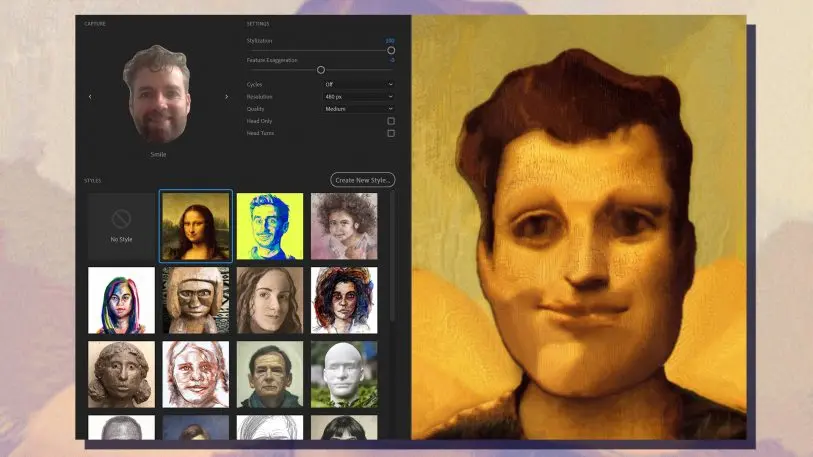

Since Characterizer lets you import any portrait you like to style transfer, I decide to throw it a lob. The Mona Lisa, the most famous portrait of all time. Surely this would be superb. And indeed, setting her up, Adobe’s “Sensei” AI finds her mouth and eyes perfectly. I help paint in her hair, face, and neck. This will allow the software to puppet her face onto mine.

But once it’s done? I see a very scary, NSFW Conan. And pretty much anything I say results in him making an O-face. So I try training the software on my face again, twice, in different lighting, and I get more or less identical results. Characterizer might work great for some people, but it doesn’t work very well for me.

When I ask Adobe if my magnificent beard might have been confusing the model, I’m assured by a spokesperson that, “This is the very early part of an ongoing development that will include more specifics like the machine learning responding to diversity in facial hair and other idiosyncrasies.” Did Adobe just throw shade? WHAT OTHER PERSONAL “IDIOSYNCRASIES” ON MY FACE ARE YOU REFERRING TO, ADOBE?!?

In all seriousness, Adobe isn’t betting the company on Characterizer. It’s a new feature that’s part of an app that’s still technically in beta itself. From speaking with Less, it seemed like the development team sees the feature mostly as a user-friendly toe dip into the full world of Character Animator itself, a relatively young app for Adobe that’s less than a year out of beta itself.

AI and machine learning are revolutionizing the digital imaging industry. We’ve already seen how a single person dubbed “Deepfakes” has gone so far as to beat Hollywood mainstays like ILM at their own game. For now, it’s funny when this technology doesn’t work as anticipated. But give it just five years, and these AI tricks may need to be as core to Adobe’s experience as magic wands and clone stamps.

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.