An Iowa Uber driver who is transgender was required to travel two hours to a local Uber office after a facial-recognition security feature was unable to confirm her identity, CNBC reports. Uber says such issues can often be resolved with a simple phone call, but for Janey Webb, the driver in question, the issue meant she missed out on about three days of work, including a potentially lucrative July 4.

And, according to CNBC, the problem isn’t limited to her. “I don’t think Uber is this evil company,” said Lindsay, another driver who was suspended for photo inconsistencies. “Yeah, their focus is on profit, and one of those things is automating as much as possible, and when you have a system that is so overly automated, people like myself fall within the cracks.”

An Uber spokesperson defended the company’s policies in an email to Fast Company. “We want Uber to be a welcoming, safe, and respectful experience for all who use the app,” the spokesperson wrote. “That’s why we maintain clear Community Guidelines and a non-discrimination policy for riders and drivers, in addition to many safety features. We continue to focus on ways to advance our tech and constantly improve our app experience.”

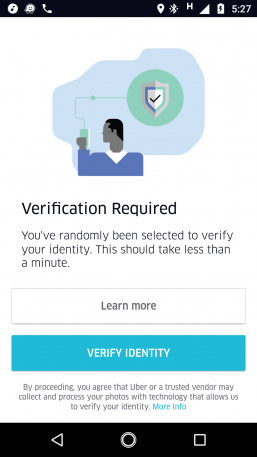

The feature in question, called Real-Time ID Check, is designed to reduce fraud by periodically prompting drivers to submit selfies to Uber before they begin a driving session. The company uses Microsoft Azure’s Cognitive Services technology to match the selfie to photos on file, it says.

“During our pilot of Real-Time ID Check over the past few months, the majority of mismatches were due to unclear profile photos,” the company said when the feature launched, in 2016. “And more than 99% of drivers were ultimately verified. Given that verification takes only a few seconds to complete, this feature proactively and efficiently builds more security into the app.”

Uber didn’t immediately provide more up-to-date statistics on how often or in what situations the tool has failed. But this apparent failure raised questions more generally about when facial recognition can be relied on in cases where people’s appearances may change over time. Webb began her gender transition around the time last year that she started to drive for Uber, and her appearance is now quite different, according to CNBC.

Face and so-called automatic gender-recognition systems (AGR) have already provoked concerns among people with diverse gender identities, according to researchers at the University of Maryland. People who transition gender can present a challenge for facial-recognition programs, others have found.

In order to better train such algorithms, Karl Ricanek, a professor of computer science at the University of North Carolina at Wilmington, had begun compiling a set of links to YouTube videos showing people’s physical evolutions during the transition process, but The Verge reported last year that he’s since stopped sharing the list out of privacy concerns.

Related: Cop cameras can track you in real time and there’s no stopping them

“What kind of harm can a terrorist do if they understand that taking this hormone can increase their chances of crossing over into a border that’s protected by face recognition? That was the problem that I was really investigating,” Ricanek told the website about his research, which has been funded in part by the FBI and the Army.

Separate research has shown that other facial changes, such as from changes in weight or plastic surgery, can also throw off facial-recognition systems. Researchers have also warned that leading commercial face-recognition algorithms have lower rates of accuracy for dark-skinned faces, echoing a trend in which algorithms can amplify human bias. As these systems are increasingly used to verify identity–or to identify criminal suspects–researchers and the companies that rely on them will have to pay close attention to exactly when and why they fail.

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.