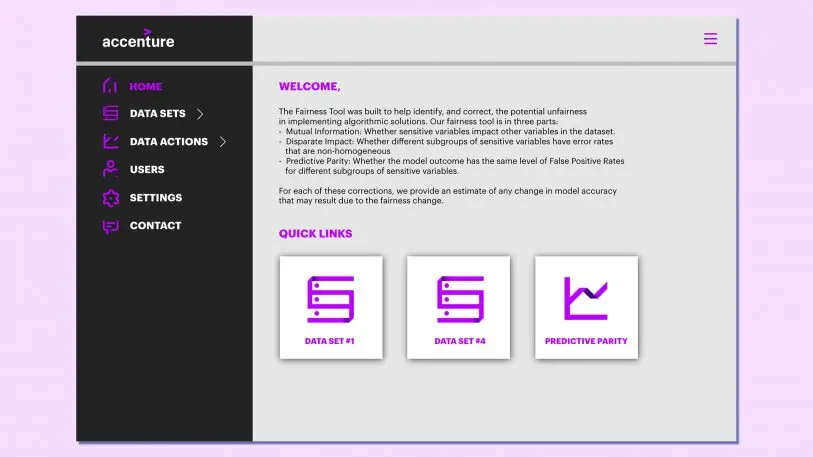

Algorithms might help decide the terms of your next loan, pull data from your online shopping history to help determine your credit score, assess whether you should be offered a job, or decide whether someone is likely to commit a crime in the future. In theory, AI can eliminate some bias on the part of people making decisions–but since algorithms are designed and fed data by humans, the results often still aren’t fair. A new tool from Accenture, called the Fairness Tool, is designed to quickly identify and then help fix problems in algorithms.

“I’m hoping is that this is something that we can make accessible and available and easy to use for non-tech companies–for some of our Fortune 500 clients who are looking to expand their use of AI, but they’re very aware and very concerned about unintended consequences,” says Rumman Chowdhury, Accenture’s global responsible AI lead.

The tool uses statistical methods to identify when groups of people are treated unfairly by an algorithm–defining unfairness as predictive parity, meaning that the algorithm is equally likely to be correct or incorrect for each group. “In the past, we have found models that are highly accurate overall, but when you look at how that error breaks down over subgroups, you’ll see a huge difference between how correct the model is for, say, a white man versus a black woman,” she says.

The tool also looks for variables that are related to other sensitive variables. An algorithm might not explicitly consider gender, for example, but if it looks at income, it could easily have different outcomes for women versus men. (The tool calls this relationship “mutual information.”) It also looks at error rates for each variable, and whether errors are higher for one group rather than another. After this analysis, the tool can fix the algorithm–but since making this type of correction can make the algorithm less accurate, it shows what the trade-off would be, and lets the user decide how much to change.

There are multiple ways to define fairness–one recent talk at a conference on fairness considered 21 definitions. Since it’s difficult to agree on what “fair” means, there are statistical limitations in modeling, and adjusting for bias in every variable sacrifices accuracy, Chowdhury says that “there’s no way to push a button and settle for algorithmic fairness.” But the Fairness Tool is a way to begin fixing some important problems.

Accenture is currently fielding partners for testing the tool, which it has prototyped so far on a model for credit risk, and is preparing for a soft launch. The tool will be part of a larger program called AI Launchpad, which will help companies create frameworks for accountability and train employees in ethics so they know what to think about and what questions to ask.

“Your company also needs to have a culture of ethics,” she says. “If I’m just a data scientist and I’m working on a project–and like all projects I’m behind on time and I’m way over budget and I’m trying to get this thing done–and I run it through this Fairness Tool and I find that actually there’s some serious flaws in my data . . . if I don’t have a company culture where I can go to my boss say, ‘Hey, you know what, we need to get better data,’ then selling all the tools in the world wouldn’t help that problem.”

Others, like Microsoft and Facebook, are working on similar tools. Eventually, Chowdhury says, this type of analysis needs to become standard. “I sometimes jokingly say that my goal in my job is to make the term ‘responsible AI’ redundant. It needs to be how we create artificial intelligence.”

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.