Last week, YourNewsWire.com, a website that traffics in wild conspiracy theories, published a blog post falsely claiming that the gunman in the recent Sutherland Springs, Texas, church shooting was found to be a member of an anti-fascist group “targeting white conservative churches.” The post rapidly spread online while reputable fact-checking sites including Snopes and PolitiFact raced to debunk the story within 24 hours–but not before it was shared more than 250,000 times on Facebook.

The dynamic highlights a fundamental problem with fact-checking in the era of viral misinformation and disinformation disseminated on social media: Even when erroneous reporting is caught fast and disproven or corrected swiftly, there’s no system in place to ensure the true story will ever reach the people who consumed or shared the false “news” in the first place.

Facebook’s efforts to resolve the issue have lately come under fire. Critics argue that the social network’s solution, which involves third-party fact-checkers like PolitiFact and the Associated Press manually tagging questionable articles featured in News Feed with a “disputed” reporting label, is superficial and slow, a days-long process that’s no match for the scale and speed of fake news. It also doesn’t address the users who read the articles before they were flagged. That has left some, including Senators Mark Warner and Jack Reed, wondering whether Facebook and other tech companies like Twitter and Google ought to implement a farther-reaching fact-checking service that could notify users retroactively if they fall prey to disinformation. Will it ever happen?

Senator Reed proposed the idea earlier this month at the Senate Intelligence Committee hearing on Russia’s manipulation of the tech platforms during the 2016 election. Pressing Facebook general counsel Colin Stretch on whether the company feels “an obligation to notify” the 126 million (or more) people reached by ads and content from Kremlin-linked operatives and troll farms on Facebook, Reed asked if they had the “technical skill” to implement such a system. Senator Warner later compared the concept to a hospital that realizes it inadvertently exposed patients to a disease. “The medical facility would have to tell the folks who were exposed,” he said. (A public petition launched on Change.org last month also calls on Facebook to notify its American users if they were exposed to Russian propaganda; so far it has received more than 88,000 signatures.)

The queries hinted at a larger idea: Could Facebook, in addition to labeling “disputed” stories, also alert readers if a story they clicked was later found to be inaccurate or intentionally misleading? Call it, say, targeted fact-checking: Facebook already delivers tailored ads to users based on the content they consume; could they do the same thing with fact-checked news? It would be, Reed suggested, the social network equivalent of a newspaper correction–only one that, with the tech companies’ expansive data, could actually reach its intended audience, like, say, the 250,000-plus Facebook users who shared the debunked YourNewsWire.com story.

At first glance, it seems like an obvious, impactful band-aid to viral disinformation and misinformation. But as a number of experts in this field tell Fast Company, nothing about this issue is simple. At Facebook’s scale, any technical solution–even one as seemingly uncomplicated as a fact-check notifier–carries with it sweeping connotations, a reality that demonstrates just how intractable the “fake news” problem is for Silicon Valley.

“It would be great for people to know when they are exposed to false or misleading information,” says PolitiFact’s executive director Aaron Sharockman. “Is it feasible? Sure, in fact, I’d imagine it’s pretty easy. Will it happen? I wouldn’t hold my breath.”

Technically Challenging?

In the Senate hearing, Facebook’s Stretch indicated there would be substantial “technical challenges associated” with notifying users that they’ve been subjected to disinformation. (The company has struggled to offer a definitive estimate of users impacted by Russian propaganda campaigns, and has been accused of deleting data useful to researchers trying to understand those efforts.) But generally speaking, “it’s absolutely not technically difficult,” says Krishna Bharat, a former research scientist at Google who led the search giant’s early News product efforts. Facebook, Twitter, and Google, after all, have built their businesses around tracking exactly what their users click.

“They are already telling users before they share [a “disputed” story]; they could equally tell you after it’s shared,” Bharat continues. “Frankly, it’s probably a little easier to do so after you shared it because [fact-checkers] would have more time to say it was ‘disputed.'”

When asked about Senator Reed’s idea, a Facebook spokesperson declined to speculate on whether this type of fact-checking system would be technically feasible, contrary to Stretch’s testimony. “We’re aware that there is still a lot of work to do and we are continuing to iterate with our fact-checking partners on multiple techniques,” the spokesperson says. “There’s no ‘one-size-fits-all’ approach that works everywhere, which is why we’ve been working on a diverse range of potential solutions.”

Related: Instagram Played a Much Larger Role In Russia’s Propaganda Campaign

In any case, it’s likely the company will have to leverage its technology and distribution channels more to scale its fact-checking efforts. “Senator Reed was asking the right questions,” says Arjun Hassard, a product head at Factmata, a London-based startup focused on developing AI-driven tools to reduce online misinformation. In his view, third-party fact-checking is only a part of the solution. To accelerate the process (Bloomberg recently reported Facebook’s partners only have the resources to debunk a handful of stories per day), the big tech players will need to rely more on automation and machine learning to detect and dispute false news. “I think manual fact-checkers are extremely well-intentioned and important, but they are dealing with a robust, complex, and evolving enemy,” Hassard says.

Ministry Of Truth

Many observers, though, doubt the tech companies will ever go so far as to implement some sort of retroactive notification system or build similarly forceful fact-checking tools. One reason they’ve outsourced the fact-checking process is because they’re wary of being perceived of as publishers; Facebook, Google, and Twitter claim they’re mere tech platforms, and thus are not liable for the content propagated on their services. “The [tech] platforms are very hesitant to do anything manually because they know it doesn’t scale–there is a cultural problem inside these companies in terms of doing any one-off fixes,” says Bharat. “It’s a slippery slope where they would be forced to editorialize everything, and they don’t like to editorialize anything. Once they start doing something manually in one context, they are going to be asked why they don’t do more: ‘Oh, what about this other context?’ They also worry about tinkering with things where they may be accused of political bias.”

In other words, they don’t want to be seen as a 1984-style “Ministry of Truth,” dictating to the public what’s real or not. If the company starts notifying users when they’ve consumed an article later discovered to be foreign government propaganda, should they do the same for celebrity gossip that’s later disputed? What about reporting on hot-button political topics that are inevitably contested by various sources, or even news about one of the tech companies that they themselves deny? It’s not hard to imagine any number of fraught scenarios where it might appear that Big Facebook is acting as a not-impartial arbiter of truth. PolitiFact’s Sharockman says he likes the idea of being able to reach affected users directly with facts but cautions that it would represent “a pretty aggressive response from platforms like Twitter and Facebook and one that I think would be met with a lot of backlash from users.”

Others argue there’s low-hanging fruit Twitter, Facebook, and Google could go after without causing too much hand-wringing. So as not to feel so intrusive, perhaps Facebook could experiment with an opt-in fact-checking notification system that allows users to choose from a list of fact-checking partners, who could send them, say, a monthly list of stories they clicked that the partners later disputed. Maybe Google could only warn users of the most egregiously false reporting–the content that crosses PolitiFact’s “Pants On Fire” threshold, like a recent “news” story on YouTube about former President Barack Obama being arrested for smuggling drugs in Japan that went viral and has since racked up nearly 300,000 views. Or perhaps Twitter could devise a tool to enable a select group of reputable news organizations to amplify corrections to readers who clicked, retweeted, or favorited reports that were later retracted.

It’s an age-old problem on the platform, whereby the original post garners thousands of retweets compared to the fraction of attention the corrected tweet usually receives. Only recently, for example, reporters sent a story rocketing across Twitter that implied President Donald Trump committed a faux pas at a koi pond; when that turned out to be misleading, however, there was no way for the journalists to issue a correction that could reach all the users who continued to spread the now-debunked story across the network. (Spokespeople for Google and Twitter declined to comment for this story.)

Bharat recommends another measure: find ways to buy fact-checkers more time to check the facts. Before a misleading story spreads too far across the social networks, Bharat suggests the tech companies could put triggers in place to flag a questionable story for human review, say, when it hits 10,000 shares online. If machines find warning signals in the content–like evidence of hate speech or hyper-partisanship, or that the link is being shared by users or bots who have a history of spreading false news–“at that point, I think they should take the foot off the gas pedal and slow this [sharing] down while it’s being checked,” he says.

In that way, ideally, PolitiFact could “dispute” the reporting long before a false story like YourNewsWire.com’s on the Texas shooter ever reaches hundreds of thousands of shares. (Facebook has said links with this label cut future impressions by 80%.) “Slow down the spread and”–if it turns out to be false–“hit the brakes completely to make this thing disappear,” Bharat says. “The tech companies can do that.”

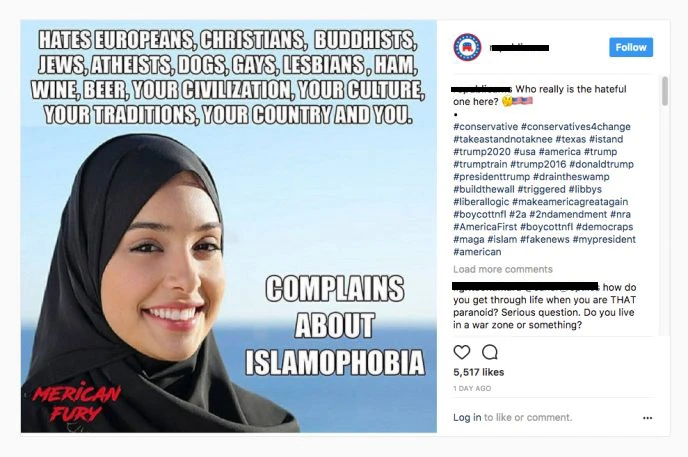

Badge Of Honor

Even if some of these ideas were implemented, there’s a greater concern for big organizations engaging with more fact-checking efforts: Rather than convincing users that a story is debunked, labeling it false might actually inversely give the article more credibility in some cases. That a story is “disputed” by Facebook or PolitiFact could become what’s called a “badge of honor” among readers predisposed to believe in conspiracy theories like the Hillary Clinton “Pizzagate” story.

“More academic research is finding that what appear to be effective methods for combating mis- or disinformation may not be so–that calling it out as ‘fake news’ very often cements it further and tends to give it more distribution among those inclined to buy into it due to personal biases,” says a former Twitter executive familiar with the company’s efforts on this front. Pushing a notification to users about disputed reporting, this exec adds, may only enhance “this backfire effect.”

To counter this issue, researchers have recommended more consumer education around what “fake news” looks like, as well as more analysis of who the most effective messengers are to deliver fact-checked content–what one academic study calls “ideologically compatible sources.” That is, rather than Facebook promoting a fact-check label or notification, it might resonate more with some people if Fox News delivers a clarification on “Pizzagate,” just as it would likely be more digestible for others if MSNBC issued a correction on the Trump-koi pond faux-controversy. Or, better yet could be like receiving either bit of information from a trusted friend. “What’s been found again and again,” says the former Twitter exec, “is that peer shame is a really effective way of getting someone to back off from engaging with or redistributing misinformation or fake news.”

Related: Alphabet’s Eric Schmidt On Fake News, Russia, And “Information Warfare”

Indeed, among some groups inside Twitter, the hope (or fantasy) is that users will self-police. As Twitter acting general counsel Sean Edgett said in response to Senator Reed’s questioning on this topic, the real-time dialogue happening on the social network can combat “a lot of this false and fake information right away, so when you’re seeing the tweets [with misinformation or disinformation], you’re also seeing a number of replies showing people where to go, where other information is that’s accurate.” Facebook, too, has explored ways to promote user-generated content that expresses disbelief about news stories, albeit with mixed results.

Still, regardless of whether these types of solutions could be more aggressively rolled out, a larger question remains over whether any of them will actually sway user sentiment around false news. But some observers believe it’s important for the tech companies to keep transparently testing new ideas, even versions of the one Senator Reed proposed, not only to learn what does work but also to continue studying in the process why and how certain news stories manipulate public opinion.

“Most of the solutions that the tech companies would readily leap to may actually make the problem worse than better,” the former Twitter exec says. Still, this source adds, better for them to keep pushing ahead with experiments, even if not all of their solutions prove sustainable or scalable. “It’s a start,” this person says. “Take the low-hanging fruit first.”

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.