In the six months since OpenAI let ChatGPT loose onto the world, “the discourse” around generative AI has become omnipresent—and borderline incoherent. Forget whether artificial intelligence will remake the economy or kill us all; no one can agree on what it even is: an alien intelligence, a calculator, that Plinko game from The Price Is Right? But while experts joust over metaphors, three-quarters of Americans are fretting over something much more practical: Can we trust AI?

For Artefact, a Seattle-based studio founded to “define the future through responsible design,” this is the question that actually matters. “Am I understanding what’s actually in front of me? Is it a real person, is it a machine?” says Matthew Jordan, Artefact’s chief creative officer.

Visual stunts like the swagged-out Pope have already introduced a new vernacular of images “cocreated” with AI. But what’s funny in a viral meme seems less so in political attack ads. If the hypesters are right, and AI is coming for every kind of information we encounter—from our work emails and Slack messages to the ads we see on our commutes and the entertainment we consume when we get home—how important is it that we know exactly how much of that information was created with AI? And how can designers make it easier for us to trust the information we’re getting?

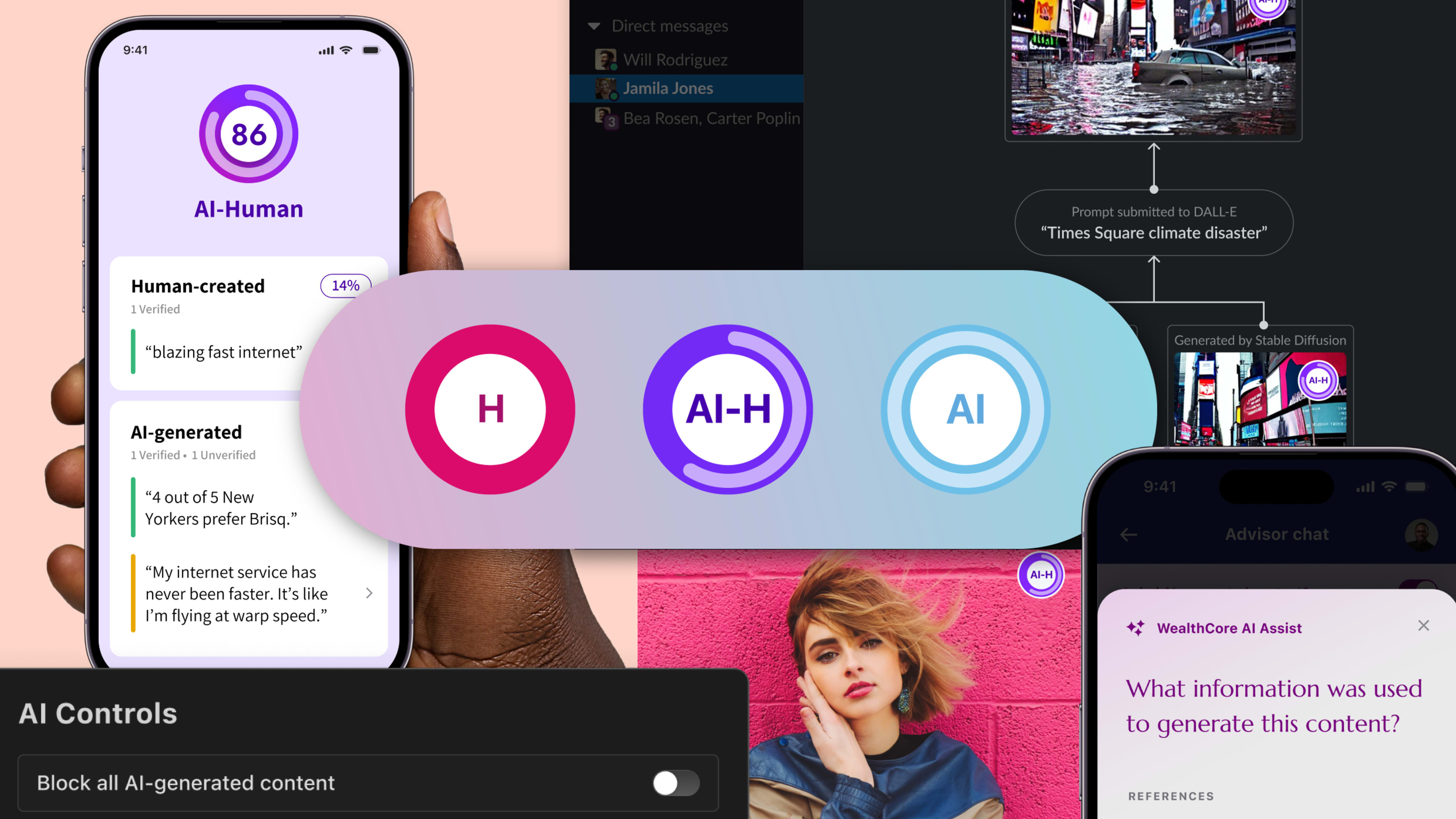

Artefact believes that building trust around generative AI boils down to three principles: transparency, integrity, and agency. The first two—showing who you are (transparency) and meaning what you say (integrity)—are familiar enough concepts from human relationships and institutions. But agency is important precisely because AI isn’t human.

Even though generative AI (especially chatbots powered by the latest large language models) can convincingly mimic some of the psychological cues that human beings consider trustworthy, that same mimicry can quickly become unsettling if there’s no way to predict or steer the system’s behavior. Agency is all about making the opportunities clear to users. “They want to maintain control over their experience,” says Maximillian West, Artefact’s design director.

At Co.Design’s request, Artefact translated these principles into three scenarios that envision what more approachable encounters with AI could look like. “We didn’t want to make the judgment that AI[-generated] content is bad,” West says. “We were interested in the idea of giving users more affordances to have that sense of control, because that feeling of trust is not being garnered in the same way it is with a human.”

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.