OpenAI is No. 1 on Fast Company’s list of the World’s 50 Most Innovative Companies of 2023. Explore the full list of companies that are reshaping industries and culture.

On a bright Monday morning at the end of January, the six-person leadership team behind ChatGPT—the most advanced AI chatbot ever to be deployed to a mass audience—gathers for its weekly meeting.

It’s taking place in OpenAI’s San Francisco headquarters, a former factory that’s now home to 375 researchers, engineers, and policy specialists—the people responsible for creating the breakthrough technology. Past the building’s imposing gate, through a courtyard edged by palm trees, and down the hall from a pebble fountain that emits a spalike burble, the team members arrive at a snug conference room, laptops in hand.

OpenAI chief technology officer Mira Murati takes a seat at the center of the table. Winter sunshine filters through a row of casement windows and a cluster of hanging plants. Murati, her hair drawn up into a haphazard ponytail, sips a glass of green tea and turns to a fresh page of a yellow legal pad, signaling for the ChatGPT team to launch into its update. There’s a lot to talk about.

Beyond these walls, the conversation around ChatGPT has crescendoed from buzz to roar in the 55 days since its launch. Members of Congress, journalists, tech insiders, and early adopters have expressed awe, anxiety, and even existential dread about the potential of the service, which allows users to plug in keywords or phrases (“summarize Supreme Court rulings,” “in the style of Dr. Seuss”) and receive often mind-blowingly good results. The largest school district in the country has banned ChatGPT. Podcast hosts turned armchair AI quarterbacks have likened its significance to the iPhone. That very morning, Microsoft, which previously invested more than $1 billion in the almost 8-year-old startup, announced that it will further integrate OpenAI’s tools into its products while pumping another reported $10 billion into the company. Meanwhile, ChatGPT’s leaders, having rushed to release the product last fall, are grappling with its runaway success. Designed for research purposes, ChatGPT has now reached 100 million people, according to analytics firm Similarweb—and is showing some of its limitations. (OpenAI doesn’t disclose user numbers.)

First on the team’s meeting agenda is bullshit—or, to be precise, what the team is discovering about how ChatGPT balances accuracy and creativity in its responses. Rather than retrieve predetermined answers, ChatGPT produces a fresh reply to every user prompt—this is why it’s known as generative AI and what makes it clever. But technology designed to generate stuff on the fly can end up “hallucinating,” as AI developers call it. In layman’s terms: “bullshitting.”

Murati speaks for the first time, asking the group what it’s learned about any correlation between ChatGPT’s accuracy and its imagination. “Do we know the relationship between the factuality balance and the creativity evals?” she asks. Dressed in head-to-toe black, she’s deliberate and professorial as she probes the chatbot’s reliability issues—a concern that, beyond this room, is inspiring hair-on-fire op-eds and Twitter threads.

Researcher Liam Fedus, a former particle physicist and Google Brain scientist who would look at home on a surfboard, cocks an eyebrow and admits that he doesn’t fully understand the relationship between fact and fiction in ChatGPT, telling Murati, “There might be, like, some weird tension, possibly.”

“I think there will definitely be tension,” Murati says, encouragingly. ChatGPT is OpenAI’s own creation, an innovative refinement to what AI researchers call a large language model, but the team is still discovering and shaping its capabilities. She nods for him to continue.

“We’re seeing more and more evidence that our base models have this sort of brilliance in creative writing,” he says, before noting that they’ve been honing them to put more emphasis on delivering a correct answer. But as OpenAI has “sort of steered toward a more usable model,” he notes, “maybe we’ve lost some ability for, you know, just really brilliant writing.”

“Mm-hmm, mm-hmm,” Murati continues nodding as she scribbles on her legal pad, having provided a gentle nudge that will unfurl new experiments designed to test accuracy versus creativity in the coming weeks. Billions of dollars and millions of jobs are potentially riding on this line of inquiry; failure to prove ChatGPT’s trustworthiness could jeopardize OpenAI’s position as the poster child for Silicon Valley’s most promising technology in decades and relegate the company’s tools to novelty status. Yet Murati has the sangfroid of someone leading a graduate-level seminar.

Less than a year ago, OpenAI was a well-regarded, if rather quiet, organization with a stated mission to “ensure that AGI (artificial general intelligence)—by which we mean highly autonomous systems that outperform humans at most economically valuable work—benefits all of humanity.” Many companies use AI, and consumers interact with it every time they swipe up on TikTok or share on Facebook. OpenAI has been building toward something far grander: a system with general knowledge of the world that can be directed to solve an almost infinite number of problems. Though half of the company’s own employees guess that achieving AGI is 15 years away, according to MIT Technology Review, OpenAI’s existing models have remarkably broad capabilities. With the public launches of its generative-image program Dall-E 2 last September and ChatGPT in November, OpenAI awakened the world to the realization that its technology can deliver extraordinary economic value today.

Murati, who was promoted to CTO in May 2022, has been at the helm of OpenAI’s strategy to test its tools in public. Although researchers have been making remarkable strides in AI for a decade, particularly when it comes to understanding text (natural language processing) and images (computer vision), most of the action remained locked away in the skunk works of tech giants like Google, which spent years more focused on having AI researchers produce academic papers than on shipping groundbreaking commercial products.

OpenAI is taking a different approach. It’s attracted top academic talent, but it’s putting their work into slick products and mass-market deployments. Imagine a room of PhDs with the energy of an enterprise sales force, and you’ll have some sense of why OpenAI has been the first to unlock broad public interest in AI. Beyond the high-profile ChatGPT, millions of people have tried out Dall-E, which conjures images based on user prompts (e.g., “a medieval painting of people playing pickleball”). Products like Whisper, an audio transcription tool, and Codex, which turns natural language prompts into code, are already being used by companies. Many pay to incorporate OpenAI’s APIs into their software and have signed on to send it user feedback. That feedback is fodder for improving the foundational models (i.e., the “brains”) at the core of the organization’s research.

It’s hard to overstate the transformative potential of those models. The innovation tied to past technology platforms, like mobile, has “kind of capped out,” says John Borthwick, founder and CEO of Betaworks, an early-stage venture firm that, since 2016, has been operating a series of AI-focused accelerator programs. “What we’re seeing is the next big wave.”

Although the technology industry is prone to seeing waves that often turn out to be ripples—Web3, anyone? Anyone?—AI is different. OpenAI already has hundreds of enterprise customers using its technology, from Jasper, the 2-year-old AI-content platform that emerged out of nowhere to make $90 million a year selling copywriting assistance, to Florida’s Salvador Dalí Museum, where visitors can visualize their dreams in an interactive exhibit powered by Dall-E. Then there’s Microsoft, of course. After first incorporating OpenAI technology into products such as GitHub Copilot, Designer, and Teams Premium, it’s now infusing Bing with ChatGPT-like capabilities—CEO Satya Nadella’s bid to give Microsoft relevance and revenue in search, where Google controls 84% of the market.

Nearly every aspect of economic activity could be affected by OpenAI’s tools. According to the McKinsey Global Institute, relatively narrow applications of AI, such as customer service automation, will be more valuable to the global economy this decade than the steam engine was in the late 1800s. AGI will be worth many, many trillions of dollars more, or so say its most ardent believers.

It might take a while to get there. After making $30 million last year, according to reporting from Reuters, OpenAI expects 2023 revenue to hit $200 million. (In January, OpenAI introduced a paid tier for ChatGPT, for $20 per month; it is also looking to grow its revenue with a ChatGPT-specific API.) OpenAI and its backers—including Elon Musk, Peter Thiel, and Reid Hoffman—are so confident in a mammoth outcome that they have structured a profit ceiling for themselves, as a gesture toward their mission to benefit humanity. (Microsoft, OpenAI’s largest backer, could be entitled to make as much as $92 billion, Fortune reports. Microsoft’s most recent check values the startup at $29 billion.)

Unsurprisingly, OpenAI has its detractors. ChatGPT is “nothing revolutionary,” sniffed Meta chief AI scientist Yann LeCun at a conference in January. Open-source evangelists decry OpenAI as a black box. Other critics complain that it is cavalier in its public testing, using its research-lab aura to escape stricter scrutiny. Still others say that it has been exploitative in its pursuit of safety, by relying on subcontractors in Kenya to label toxic content.

Plus, competitors are starting to challenge OpenAI. Last August, Stability AI launched its AI image generator, Stable Diffusion, making it immediately available to consumers. In the wake of ChatGPT, Google fast-tracked the release of a chatbot, dubbed Bard, and China’s Baidu announced plans to unveil its chatbot, Ernie. Google’s flubbed showcase of Bard cost it a stunning $100 billion in market capitalization in a single day; Baidu’s announcement, in February, sent its stock price soaring by 15%. That’s how game-changing OpenAI’s models can be—if the people behind them can teach them to distinguish fact and fantasy.

Murati, who was born in Albania and left at age 16 to attend Pearson United World College in Victoria, British Columbia, first caught AI fever while she was leading development of the Model X at Tesla, which she joined in 2013. At the time, Tesla was releasing early versions of Autopilot, its AI-enabled driver-assistance software with autonomous aspirations (arguably, delusions), and developing AI-enabled robots for its factories. Murati began considering other real-world applications for AI.

In 2016, she became VP of product and engineering at Leap Motion, which was working on an augmented reality system to replace keyboards and mice with hand gestures. Murati wanted to make the experience of interacting with a computer “as intuitive as playing with a ball.” But she soon realized that the technology, which relied on a VR headset, was too early. “Even a very small gap in accuracy can make you feel nauseous,” she notes.

As she considered what to do next, she concluded that a “massive advancement in technology” would have to play a role in solving the world’s biggest challenges. At OpenAI, which she joined in 2018, she found kindred spirits who shared her growing conviction that AGI would be that technology.

Murati also found an organization in flux. The world’s best AI labs were harnessing internet-scale quantities of data and custom-built supercomputers to train their models, and their brute-force approach was yielding results. OpenAI’s small but growing team realized that it, too, would require increasing (and increasingly expensive) computing power; commercialization became both an economic necessity and a pathway for its technology to learn about the world. In 2019, the nonprofit restructured itself as a for-profit startup with a nonprofit parent, installing former Y Combinator president Sam Altman as CEO. He quickly raised $1 billion from Microsoft.

As OpenAI embarked on a new course, with Murati as VP of applied AI and partnerships, it confronted the central problem of large language models, or algorithms designed to process and generate text on neural networks: They are akin to a sort of “hermetically sealed genius,” says Patrick Hebron, VP of R&D at rival Stable Diffusion. They require probing in order to unmask their talents; Mozart wasn’t Mozart, you might say, until someone handed him an instrument. For a system like GPT, OpenAI’s foundational language model, which has many possible talents, that exposure can happen far faster with the help of outside partners.

Other AI labs developing large language models were producing fancy demos and one-trick toys, such as Google Duplex, a voice-robot version of Google Assistant capable of booking appointments over the phone. OpenAI, however, began assembling the infrastructure and the relationships to finesse its models to better align with human intent. Murati, who was later promoted to oversee research as well, found herself at the heart of the effort.

To unlock ChatGPT, for example, OpenAI needed GPT to understand human conversational values. So much of language is subjective: What is a “cool” song? Or a “fancy” restaurant? Without human feedback, language models struggle to understand such concepts, let alone banter about them. OpenAI started to assemble the pieces required to teach GPT these values. It paid contractors to evaluate GPT outputs and write better ones, alongside employees doing the same. It found partners willing to share data from their own experiments. It built out a safety operation, trying to anticipate the ways that users might break the system. Then it fed the data from all those efforts back into a layer of algorithms that could sit on top of OpenAI’s language models.

The final step, slapping a search-bar interface on the system and calling it ChatGPT, was the easy part. The result is a chatbot that’s “more likely to do the thing that you want it to do,” Murati says.

ChatGPT is just the start. OpenAI has a diverse portfolio of applications. That breadth is by design. “One of the reasons that we wanted to pursue Dall-E was to get to a more robust understanding of the world, to have these models understand the world the way that we do,” Murati says. She sees AI models that have been trained through multimodal learning—that is, learning that encompasses text, images, audio, video, and even robotics—as the pathway to longer-term breakthroughs.

The trick is tying those future plans to products in the here and now.

Some 2,260 miles from OpenAI’s headquarters, entrepreneur Andrew Wyatt glances around his Pittsburgh coworking space. The cofounder and CEO of Cala, a design and manufacturing platform for fashion brands, Wyatt incorporated OpenAI’s Dall-E functionality into his product several months ago. With the help of Dall-E’s API, designers using Cala can now generate a sketch or a photorealistic image based on a text prompt. They can also use Dall-E to generate variations based on a starter image—in other words, to riff on an idea.

“Maybe I’m inspired by this pattern on the carpet here,” Wyatt says, taking a quick photo of it with Cala’s mobile app. He feeds the image into Dall-E; even though the original was badly lit and included part of a chair, the tool offers an array of similar patterns in seconds. “It’ll work a lot better,” he says, “if you’re taking high-res product photography, which every brand has.”

Wyatt pulls a higher-quality image of a button-down blouse into Cala’s design tool. The blouse’s fabric has a pattern: blocky, twisting chains in a deep green. “It’s almost like Inception, where you just keep peeling the onion,” he says, as he generates one array of variations, then another. “You can hit the button as many times as you want,” he adds. Each time he does, the chains morph into new patterns. “You can see that we’re now departing from the original vibe and getting something unique.”

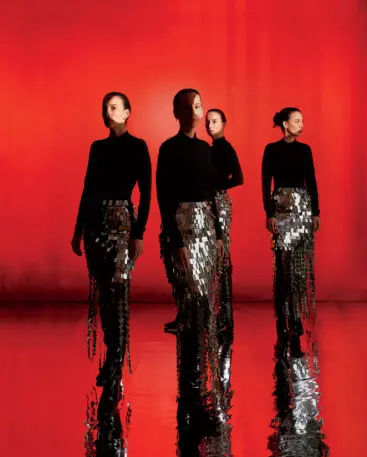

Designers from top brands are quietly experimenting with Cala’s Dall-E tool, sometimes pushing the boundaries with their wildest inputs. Wyatt reports that new users have been signing up for Cala at six times the prior rate. And rather than usurping designers, Cala’s tool requires them to come up with compelling prompts to get the best results, which is a skill like any other. When Cala conducted an internal competition to design a bag using Dall-E, the most experienced fashion designer on the team won handily; the rendering for her sleek chrome duffle could have been mistaken for a human-designed product.

At the moment, in fashion, generative AI is most obviously useful during the inspiration phase. But Wyatt is already connecting the dots to later stages of design and manufacturing. Cala could help even small brands nimbly produce garments on demand—a more sustainable inversion of the mass-produced fast-fashion archetype. In Wyatt’s vision, brands would be able to get their ideas into customers’ hands more quickly by eliminating physical samples from the design process.

“Where we see this potentially fully changing the game is [when brands can go] straight from text prompt to 3D render,” he says. “And then the holy grail is going from 3D render to 2D pattern that can be cut out of fabric and is ready to go into production.”

Last spring, Natalie Summers, who joined OpenAI from Apple in 2021 to help with community building, hopped on to Instagram to DM a handful of artists. “Hey, want to try out a cool new thing and tell us what you think?” she asked. The “thing” was Dall-E, which was still in research preview.

Summers, a fast-talking former reporter, arranged video calls with potters and set designers, landscape architects, and digital artists, first in groups of 10 or 20, and later, by the hundreds. The OpenAI research team wanted to understand what artists would find valuable in a generative art program—and what would draw their ire. So she offered them a chance to play around with Dall-E prompts while also issuing a warning: “Yes, this is going to change things,” she recalls saying. “You should help make [AI] tools that will be useful to you.”

The concept of aligning AI with human values can sound fraught: Whose values, exactly, form the benchmark? But figuring out what people value commercially puts a more practical (and profitable) frame around potentially paralyzing ethical questions. For the artists involved in Dall-E’s beta, at least, the most pressing question was how they could monetize their AI creations. They also wondered how generative technology would affect their jobs. (Another set of artists, whose work was included in the training data for similar products from Stability AI and Midjourney, is more focused on protecting their existing income: They are suing for copyright infringement.) “You put the technology in contact with reality; you see how people use it, what the limitations are; you learn from that; and you can feed it back into the technology development,” Murati says. “The other dimension is that you can actually see how much [the technology is] moving the needle in solving real-world problems or whether it’s a novelty.”

OpenAI’s role requires striking a careful balance: It must make incremental improvements for customers while laying the groundwork for future leaps in knowledge. With its most prized customers, OpenAI is a generous (if self-interested) implementation partner, as well as a data sponge. It teams up with them to preemptively break or abuse their applications in anticipation of how users might do the same, a practice called “red teaming.” (This process has helped it establish guardrails to deter spammers.) But pursuing safety is complicated: Giving AI the ability to describe a violent crime may not be appropriate for an elementary school lesson plan, but in a legal filing, it is essential. To retain GPT’s flexibility, OpenAI has so far preferred to apply sometimes heavy-handed filters that control what users see, rather than tinker with the system’s underlying brains.

“Anything you bake in, it’s baked in for all users,” Sandhini Agarwal, an OpenAI policy researcher, says of changes to the foundational model. “You really have to think and decide what should go in at that level.”

Customer data, in turn, gives OpenAI an edge in training its models. (Enterprise customers can opt out of data sharing, but risk diminished performance in their applications.) Microsoft, for its part, shares user insights with OpenAI—the takeaway from an A/B test, for example—but not necessarily the data. Microsoft may have invested billions in OpenAI and offered up its cloud-computing platform to the upstart at a steep discount, but it’s not giving up the store. Over time, other partners may arrive at a similar conclusion and look for ways to assert control, especially if OpenAI keeps releasing consumer-facing products alongside its APIs.

“I think less hype would be good,” says Murati, with an understated smile, when I ask her about GPT-4, OpenAI’s next large language model. As much as Dall-E and ChatGPT have captivated public imagination, GPT-4 could be even more consequential. In the lead-up to GPT-4’s launch, memes began circulating on Twitter, hypothesizing that the model would contain 100 trillion parameters, or calculator-esque processing centers—many, many orders of magnitude more than GPT-3. Perhaps no coincidence, the human brain contains roughly 100 trillion synapses.

Since the launch of ChatGPT, much of the hype cycle and hand-wringing related to OpenAI has come from the education sector. ChatGPT had a near-overnight effect on education, starting with teens posting their chatbot homework hacks to TikTok and culminating in the chatbot earning a passing grade in the final exams for University of Minnesota law school courses. With GPT-4, Murati sees a chance to “broaden opportunities for people.” From her perspective, it’s a quick hop from angry teachers failing students for cheating with ChatGPT to happy teachers writing lesson plans with an assist from the upgraded bot and students learning in brand-new ways.

“With ChatGPT, you can have this infinite interaction and have it teach you about complex topics in a way that’s based on your context,” Murati says. “It’s kind of like a personal tutor.” Education, to her, is a two-way street: In parallel, she wants OpenAI’s systems to be learning from humans. “You could make technological progress in a vacuum without real-world contacts,” she says. “But then the question is, Are you actually moving in the right direction?”

It’s unclear, from a commercial perspective, how important a foundational model the size of GPT-4 will be to the AI field. “Most companies use rather small models, like 1 to 10 billion parameters, because they specialize them for their use cases, and [the smaller size] makes them cheaper and faster to run,” says Clement Delangue, cofounder and CEO of Hugging Face, a GitHub-esque platform for the AI community.

Microsoft Bing’s integration with ChatGPT, on the other hand, makes a case for going big, with scale-dependent features like recency and annotation that could go a long way toward curtailing hallucination. An AI product that has up-to-date knowledge and can show the logic behind its outputs is both more reliable and easier to correct. Whether these foundational models will be as lucrative for OpenAI as search advertising has been for Google and the App Store has been for Apple remains to be seen.

However the industry takes shape, Murati argues that customers will value versatility. Besides, AGI isn’t going to emerge from thousands of bespoke mini-models. “Even if it takes a really long time to get to AGI,” she says, “the technology that we will build along the way will be incredibly useful to humans in solving very hard problems.”

She’s sitting in her cozily lit office, tortoiseshell glasses on, describing her team’s work as they press toward new discoveries. It’s easy to imagine a Dall-E prompt that would illustrate the point: Ragtag team of explorers on a windswept summit, new dawn rising, laptops in backpacks, Hudson River School, oil paint.

Editor’s Note: A previous version of this story incorrectly stated that OpenAI has access to presentations created by users of AI-powered storytelling company Tome.

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.