As a painter would use pigment, Refik Anadol uses data to create his art. After looking at his body of work—which has pioneered the use of artificial intelligence for years—you might also say that neural networks are his brushes. His latest exhibit at MoMA, Unsupervised, is the pinnacle of this philosophy and then more.

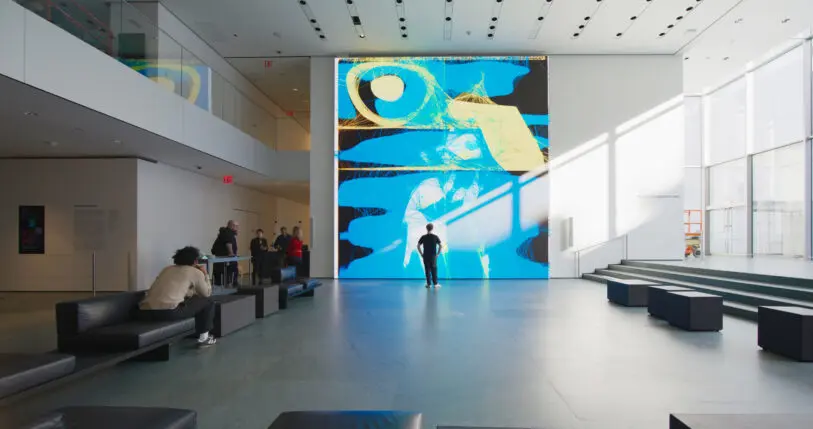

The colossal installation—a stunning 24- by 24-foot digital display that fills the entire MoMA lobby—renders an infinite animated flow of images, each of them dreamed up as you watch by an AI model fed by the museum’s entire collection of artwork. This flow is controlled by what happens around it, making the piece feel like it’s alive.

It’s hard to describe the awe-inspiring effect of Unsupervised unless you see it in action. Anadol gave me a video tour using his phone as he was putting the finishing touches on the work in preparation for today’s opening. Even through the phone’s low resolution camera, I was completely blown away with what I saw. It wasn’t only Unsupervised‘s titanic size but its content that was so compelling: the stream of fantastic images materializing and morphing into each other felt like watching an interdimensional being, breathing, constantly evolving at the other side of a portal.

For Anadol, that is what Unsupervised feels like: a portal to a new alternate universe created by some AI god that acts on its own volition (hence the name of the exhibit). To understand what Unsupervised means, you have to understand the two main methods with which current AIs learn to do their magic: Supervised AIs—like OpenAI’s Dall-E—are trained using data tagged with keywords. In broad strokes, these keywords (like “bald men with glasses” or “fluffy white dogs”) allow the AI to organize clusters of similar images. Then when you enter a prompt for an AI image generator (like “fluffy dog with glasses”), the AI creates noise and refines it until it reaches a sharp image, based on what it learned from all those fluffy dog clusters.

But Anadol’s unsupervised AI works differently. It had to make sense of the entire MoMA art collection on its own, without labels. Over the course of six months, the software created by Anadol and his team—along with the assistance of Nvidia engineers—fed on 380,000 extremely high resolution images taken from more than 180,000 artworks stored in MoMA’s galleries, including pieces by Pablo Picasso, Umberto Buccioni, and Gertrudes Altschul. The team created and tested different AI models to see which one produced the best results, then picked one and trained it for three weeks using a Nvidia DGX Station A100 (which is essentially an AI supercomputer in a desktop box that’s about 1,000 times more powerful than a typical laptop).

“The [MoMA] curators were loving this idea of unsupervised learning,” Anadol tells me, so the plan was to put the entire collection into the training set and let the AI make its own mind, or as Anadol says, let it “look at it and create a new universe.”

The results were stunning to everyone involved. “That part is so inspiring for curators,” says Anadol. “Because, when they see this 200-year artwork archive, when they see that the AI can create new worlds [from it] . . . you can see the [ensuing] amazement in their faces.”

Even while he is the Wizard of Oz behind the curtain, Anadol finds the results awesome. “It’s like its own entity,” he says. “We don’t know what kind of forms it can create.”

It’s alive!

Crafting the neural network and building the training model to create Unsupervised is only half of the story. The other half is how it breathes and creates its images in real time—a technological achievement on its own.

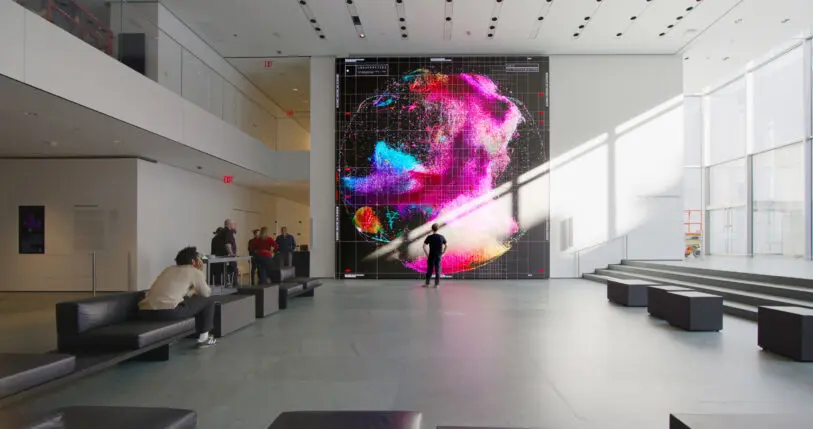

To start with, the resolution is stunning by today’s standards. At 3840 by 3960 pixels, it’s likely the highest resolution image synthesis neural network ever created. To compare, a typical Stable Diffusion image resolution is 512 by 512 pixels. Nvidia’s StyleGAN—the AI that forms the basis of Anadol and his team work—typically runs at 1024 by 1024.

But the truly shocking thing is that Unsupervised produces these images in real time, flowing in constant motion. When you introduce a prompt into Stable Diffusion, it takes seconds or even minutes to output an image. But this AI does it on the fly with the fluidity you expect from an Industrial Light & Magic special effect.

What Anadol and his team have achieved defies belief, as David Luebke—vice president of graphics research at Nvidia and one of the people behind StyleGAN—tells me over video conference: “I feel like Refik is very humble. He is very quick to express gratitude [toward Nvidia’s StyleGAN work and their assistance] but I don’t want to understate the technological savvy of Refik and the team he put together. They do amazing work.”

Another layer of complexity in Unsupervised is how the AI decides to change the images in real time. To generate each image, the computer constantly weighs two inputs from its environment. First, it references the motion of the visitors, captured by a camera set in the lobby’s ceiling. Then, it plugs into Manhattan’s weather data, obtained by a weather station in a nearby building. Like a joystick in a video game, these inputs push forces that affect different software levers, which in turn change affect how Unsupervised creates the images.

This constant self-tuning makes the exhibit even more like a real being, a wonderful monster that reacts to its environment by constantly shapeshifting into new art. For the next five months, this being will keep creating a new universe that will be constantly recorded as both video and in snapshots of its state of mind, as the machine’s decisions are saved in parallel to the film.

And, who knows, maybe this artificial art beast will live forever. Anadol says that Unsupervised may perhaps become a permanent part of the MoMA collection, which seems to me like something the institution must do. After all, it’s a synthesis of all the art that lives in the museum, the embodiment of MoMA itself with a life on its own. As Luebke points out, Unsupervised uses data as pigment to create new art, but the data feeding it was always art unto itself. In other words, the art that lives in the museum feeds this artificial artist living beside it, adding something new to the museum’s collection every moment. The process is such a mind-bending loop, it may actually open a portal to a new universe.

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.