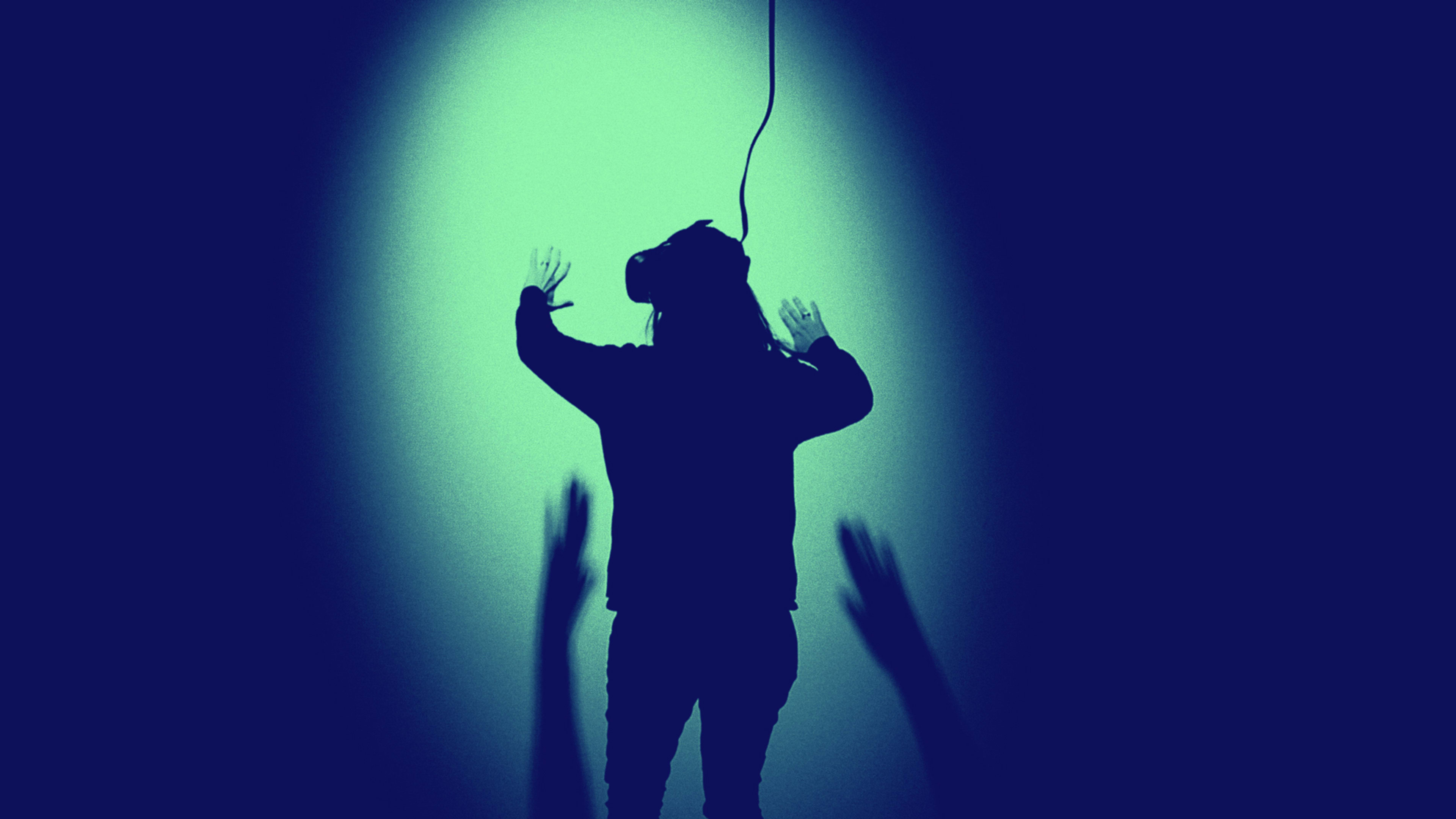

It’s just over seven months since Mark Zuckerberg declared Facebook’s parent company would be renamed Meta. For many people, the whole concept of the metaverse is still a bit hazy. But as companies and early adopters take part in virtual land grabs, and virtual reality (VR) and augmented reality (AR) companies rush to grow their customer base, the virtual world already appears to be adopting many of the worst behaviors of the real one.

A report from corporate accountability group SumOfUs says Meta’s VR platforms (Horizon Worlds and Horizon Venues) are rife with many of the same problems facing the more traditional social media outlets. Among them: misogynistic, homophobic, and racist comments; a reporting system that falls short of needs; inaction against offenders; and an open door for children to use the platform and encounter harms. On top of that, it says there’s a metaworld-specific issue that’s perhaps even more disturbing: virtual groping and rape.

“With just 300,000 users, it is remarkable how quickly Horizon Worlds has become a breeding ground for harmful content,”the report reads. “Without urgent action, this will only get worse. Until regulators hold Meta to account for the harms found on its platforms, weaken its grip over technology industries, and rein in its ruthless data harvesting practices, the metaverse is very likely to spiral into a darker, more toxic environment.”

Meta did not immediately respond to a request for comment.

Meta, of course, is just one player in the Metaverse space. Epic Games, Roblox, and others have been building their own virtual worlds, which (for now) are notably bigger. But Meta has cash—and lots of it. Last year, it announced plans to spend $10 billion on what it called Facebook Reality Labs. It’s also the biggest maker of VR headsets, where it can preload its own software.

As it does on the Facebook site, Meta plans to harvest user data as its chief revenue source in the Metaverse, but the SumOfUs report notes that the amount of data it will receive is a lot more than many people realize.

“In a virtual world, where users wear VR headsets that [could potentially] track bodily information like eye movements, facial expressions, and body temperature, we can expect a more dystopian version of surveillance capitalism,” it says.

Both Horizon Worlds and Horizon Venues have minimal moderation, the report says, which allows toxic behavior to occur. One beta tester who filed a complaint after being groped virtually by a stranger, the report says, was blamed by Meta for inadequately using the personal safety features. Another user says their female avatar was “virtually gang raped” within 60 seconds by a group of three to four male-appearing avatars.

Since interacting in the metaverse is done via cartoon-ish avatar torsos, some might shrug off the virtual assault allegations, since it’s not considered the same as a real-world assault. However, when another hand touches your avatar in Horizon Worlds, the VR controller in your hand vibrates, which one SumOfUs researcher who was assaulted virtually described as “a very disorienting and even disturbing physical experience.”

(Such incidents are not limited to Meta’s platforms, the report notes.)

SumOfUs was not the first to report this sort of unwanted interaction. In February, Meta introduced the “personal boundary” feature, which is designed to keep others from violating your avatar’s personal space, creating a roughly 4-foot perimeter.

“Personal Boundary creates more personal space for people and makes it easier to avoid unwanted interactions,” Meta said when introducing the feature. “We’ll continue to iterate and make improvements as we learn more about how Personal Boundary impacts people’s experiences in VR.”

Conspiracy theories, hate speech, and virtual gun violence were also observed as part of the study. And the report warns that Meta seems as ill-prepared to deal with these metaverse problems as it was with the disinformation that impacted the 2016 U.S. presidential election and further divided the country during the pandemic.

“Instead of learning from its previous mistakes, Meta is pushing ahead with the Metaverse with no clear plan for how it will curb harmful content and behavior, disinformation, and hate speech,” it reads. “Andrew Bosworth, Meta’s chief tech officer, admitted in an internal memo that moderation in the metaverse ‘at any meaningful scale is practically impossible.'”

The problem, of course, is not exclusive to Meta. The Center for Countering Digital Hate found last December that users, including minors, are exposed to abusive behavior every seven minutes in VRChat, ranging from graphic sexual content to extremist talking points and racist slurs.

While the SumOfUs report focuses mainly on Meta and its poor history of monitoring and responding to harmful content, it’s also a warning for other metaverse companies. The toxicity, misinformation, and divisiveness that’s omnipresent on today’s social media platforms is already gaining a foothold in the virtual world. And if it’s not dealt with soon, it could be that the future of the metaverse is less Ready Player One and more Black Mirror.

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.