It’s a beautifully sunny day in San Francisco, and I’m roaming the roof deck above an apartment in the city’s North Beach neighborhood. There’s a lot going on up here: In quick succession, I explore a model of the solar system, attempt to outrun a zombie, paint in 3D space, and learn about various types of flowers as I arrange them in a vase.

From the celestial to the down-to-earth, all of these experiences are digital. They’ve moments of augmented reality that have been enabled by the newest version of Snap’s Spectacles high-tech sunglasses, which I’m wearing instead of my own glasses. The Spectacles are layering 3D imagery onto my rooftop environs, pumping audio into my ears, and allowing me to interact with what I see through a temple-mounted touchpad or by gesturing with my hands.

That’s a way more ambitious set of capabilities than was offered by earlier versions of Spectacles, including the 2016 originals and their successors from 2018 and 2019. Those models were mostly about letting Snapchat fans shoot still photos and mini-videos without bothering to retrieve and aim a smartphone. (The 2019 Spectacles remain available, and compete with a similar pair of Ray-Bans powered by technology from Meta.)

Last May, Snap unveiled the new, AR-enabled version of Spectacles, which are indeed known simply as “the new Spectacles.” Rather than selling them like previous versions, Snap acknowledges that they’re currently an experiment, not a product. The company has doled them out for free to around 200 creators in 30 countries, choosing lucky recipients from applicants who fill out an online form.

“The main focus of that program is to make sure that anybody who receives this device is actively engaging with our product team,” says Sophia Dominguez, Snap’s head of AR platform partnerships. “Building, pushing the limits, and pushing the platform as much as possible.”

Given their limited release, the new Spectacles are a willfully small-scale foray into AR. But Snap is also experimenting with the medium on one of the biggest tapestries imaginable: Snapchat itself.

Spectacles are much closer to being something you might cheerfully wear out and about than a Magic Leap or HoloLens.

“We’re very, very proud of that number,” says Dominguez. But then she points out that there’s enormous room for further growth over the next few years: According to a Deloitte study commissioned by Snap, almost all smartphone users will be frequent AR users by 2025. Snapchat, which opens up to a view of whatever your smartphone’s camera is seeing each time you launch the app, is well-positioned to capture some of that additional attention.

In other words, beyond all those exuberantly silly Snapchat filters, something serious is going on.

Work in progress

For now, the new Spectacles are an intriguing milestone in the quest to create AR headwear that could be a category-defining hit. In order to incorporate the necessary electronics and battery, they’re chunky and angular, with a bit of a brutalist look. Their bulbous charging case isn’t something you’re going to slip into a pants pocket. Even so, these Spectacles look more like hip sunglasses than a headset, and weigh less than a third of a pound. They’re much closer to being something you might cheerfully wear while out and about than a Magic Leap or Microsoft HoloLens. (They even fit comfortably on my extremely wide head.)

None of the AR experiences I tried while wearing the Spectacles were the sort of killer app that would sell a fully commercialized version of these glasses on its own. But they were all reasonably slick and fun to try. And one of them—a restaurant menu I could peruse with a mid-air swiping gesture—hinted at purely practical applications for the technology well outside Snap’s traditional wheelhouse in entertainment.

It isn’t tough to understand why Snap is giving these Spectacles to a few hundred developers rather than asking consumers to pay for them. The company isn’t claiming that it’s on the cusp of shipping the definitive AR hardware: Dominguez stresses that Snap CEO Evan Spiegel “has said this could be a 10 year journey.” (That tracks with Meta’s 10-to-15-year estimate for its own AR/metaverse vision.)

Matthew Hallberg’s SketchFlow lens for Spectacles lets you paint with virtual 3D brushes. [Animation: courtesy of Snap]Still, Dominguez says that the progress reflected in the new Spectacles is meaningful. The ultimate goal is to create AR glasses that people will happily wear wherever they go. “Developers are still people, ” she says, “And developers have to wear them outside…If we can get them to wear them outside, and building things and excited about things, then we’re on the right path forward.”

Making Snapchat’s camera smarter

While Snap continues to experiment with Spectacles, it’s also expanding the AR capabilities available in Snapchat. That effort doesn’t require the company to engineer any hardware breakthroughs on its own. But for Snapchat to offer more meaty, immersive AR than now-classic effects such as the ability to puke rainbows, there’s plenty of work to do on the software side.

“My team is basically trying to make the Snapchat camera smarter, to understand everything in the world around it,” says Qi Pan, Snap’s director of computer vision engineering. Snap’s approach to AR, he explains, is to “iterate, learn, make the next generation a little bit better.” That work has been going on for years. Back in 2018, for example, London’s iconic Big Ben clock tower was obscured by scaffolding as part of a massive renovation program that’s only now wrapping up. Pan’s team built an AR filter that virtually peeled way the scaffolds, revealing the tower in all its stately glory. (This being Snapchat, the filter also put Big Ben inside a giant simulated snow dome.)

Over 250,000 developers have published 2.5 million Snapchat lenses, which have been viewed over 3.5 trillion times.

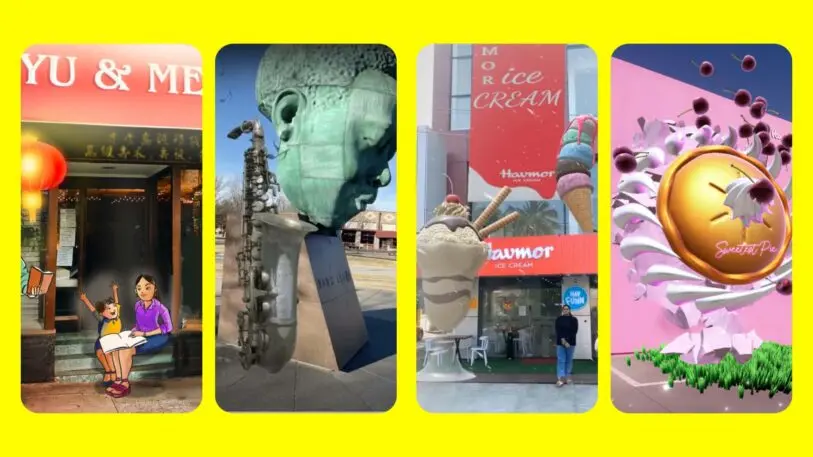

Custom landmarkers cater to Snap’s booming developer community, which the company has been courting through investments such as fellowships for AR creators. (AR will also be in the spotlight at its Partner Summit next week.) Over 250,000 developers have published a total of 2.5 million lenses, which have been viewed over 3.5 trillion times in aggregate; even if most of those stats relate to Snapchat lenses of the traditional, chat-oriented sort, it’s a big base on which to build.

In the past, Novaby had done much of its AR work in Unity, the powerful 3-D platform that began as a game engine and has more recently broadened its horizons to encompass AR and VR. Snap’s Lens Studio “is really surpassing a lot of what we could even do in Unity,” says Novaby CEO Julia Beabout. “But more importantly, it’s enabling us not to have to create an app from scratch to do what we’re doing.” That’s why she recommends it to clients as a way to reach a large audience in a speedy, cost-effective manner.

All of these place-based AR extravaganzas live in Snapchat rather than Spectacles. But “a lot of what we’re building is for Spectacles’ future,” says Pan. With a smartphone app such as Snapchat, “there’s still quite a lot of friction—you may walk past the most amazing AR activation anyone has ever built, but your phone’s in your pocket and you’ll never know about it.”

Even if the day when your AR glasses alert you to that amazing activation is years off, Snap wants to be ready—and both the hardware and software it’s building now are about getting there.

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.