Growing up in suburban Potomac, Maryland in the 1980s, Tim Sweeney, founder and CEO of Epic Games–one of the most successful gaming companies in history–wasn’t much of a gamer. His interests lay in the games themselves. The software. The internal logic humming along in the background. The stuff that made everything work.

Sweeney spent much of his time back then teaching himself to program on an Apple II, eventually using that skill to first create his own games and later his own gaming engine.

In the early 90s, Sweeney began building the code that would eventually power Epic’s first hit game in 1998, a first-person shooter called Unreal. After seeing teaser grabs of Unreal, other developers began asking to use the engine. Sweeney decided to oblige. Epic began developing Unreal Engine in 1995 and first licensed it in 1996. Epic continued building new games using the evolving toolset, including Gears of War, Infinity Blade, and Fortnite.

“This new generation brings the ability to have objects that are as detailed as your eye can see–not just with the shading on them, but on the geometry itself,” Sweeney tells me, as we sit at a patio table at his company’s Cary, North Carolina, headquarters. “And it brings in the lighting from the real world, which is freeing also. The more the technology can just simulate reality, the more all of your intuitions and experience in reality helps to guide it.”

The new fifth-generation engine—buoyed by refreshed software tools and a marketing tie-in with the team behind The Matrix franchise—will certainly enable more life-like games in the future, but it could go beyond that. At a time when lots of people in the tech world are talking about immersive “spatial” computing within something called the metaverse, Unreal Engine’s ability to simulate reality has some interesting implications. The new features in the gaming engine seem to be aimed at allowing digital creators in lots of different industries to build their own immersive virtual experiences.

“Convergence is happening because you’re able to use the same sort of high-fidelity graphics on a movie set and in a video game,” Sweeney says. “And in architectural visualization and automotive design, you can actually build all of these 3D objects–both a virtual twin to every object in the world, or every object in your company or in your movie.”

And all these experiences, if the original internet is any guide, aren’t likely to stay fragmented forever. At some point, Sweeney believes, the advantages to companies and consumers of connecting them will become too obvious. Then we’ll have something like a real metaverse.

A reality simulation engine

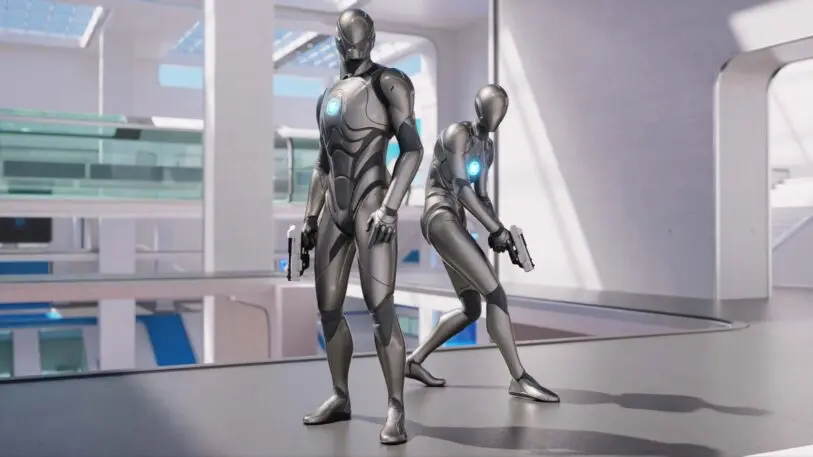

“We wondered . . . What would reality mean when a world we can build feels as real as our own,” actor Carrie-Anne Moss says in the first part of The Matrix Awakens, a tie-in with Warner Brothers that serves as Epic’s sizzle reel for Unreal Engine 5’s new features. It’s part marketing video, part action sequence, and part game. (PlayStation 5 and Xbox Series X/S users can control the movements of a new character named IO created for the project by Epic and Matrix Resurrections director Lana Wachowski.)

It’s no coincidence that Epic chose The Matrix to demo its new tools. Epic is pushing Unreal Engine toward the goal of creating digital experiences of such high quality that they’re indistinguishable from motion pictures. Epic’s ranks are peppered with people who one worked in the film industry. In fact, some of the people who created the computer-generated imagery (CGI) for The Matrix movies now work either on Epic’s games or special projects.

Epic’s CTO Kim Libreri, for example, goes way back with The Matrix franchise and the Wachowskis who created it. Libreri

worked in movies and was recruited to join the visual effects team for it. He helped supervise the movie’s famous bullet time shots, he told me.

In other words, The Matrix appeared as a perfect simulation of the real world–except occasionally people would jump 50 feet straight up in the air. Watching The Matrix, you imagine some Machine-controlled supercomputer somewhere constantly creating a perfect simulation in real time. What if a software tool existed that was capable of simulating worlds as convincingly as the system that created The Matrix?

UE5 and ‘The Matrix’

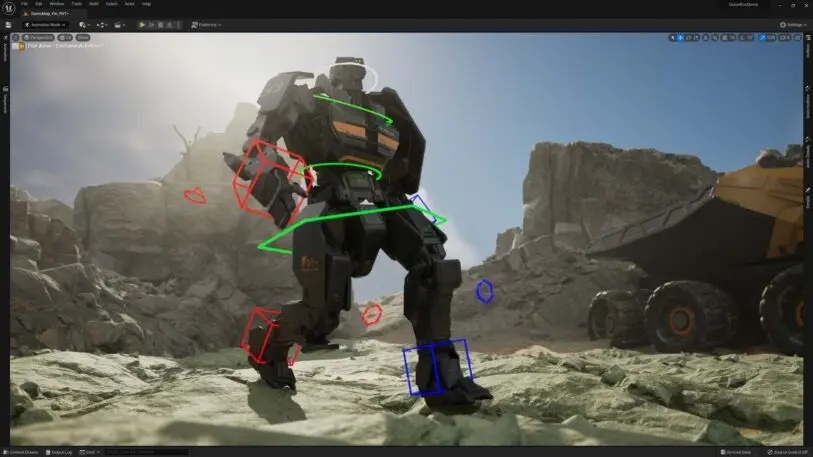

After talking to Sweeney, I went back to the Epic campus, this time to have a deeper look into the new features and tools that were added to Unreal Engine 5, and to understand how those developments manifest onscreen. I sat down in a boardroom at Epic to watch The Matrix Awakens with the person who oversees the development of the gaming engine, Nick Penwarden, Epic Games’s VP of engineering for Unreal Engine. Rendering detailed, photorealistic digital objects is a major theme in Unreal Engine 5, he told me as the demo started.

Penwarden rolled the demo past the first part in which Reeves and Moss talk about Matrixes old and new, and forward to the second part where the action starts. We watched a wild car chase-slash-shootout through the streets and on the freeways of a large fictional city that’s partly based on San Francisco. Familiar-looking San Francisco buildings and realistic-looking cars flew past the windows of the 70s muscle car driven by Carrie-Anne Moss’s character, Trinity.

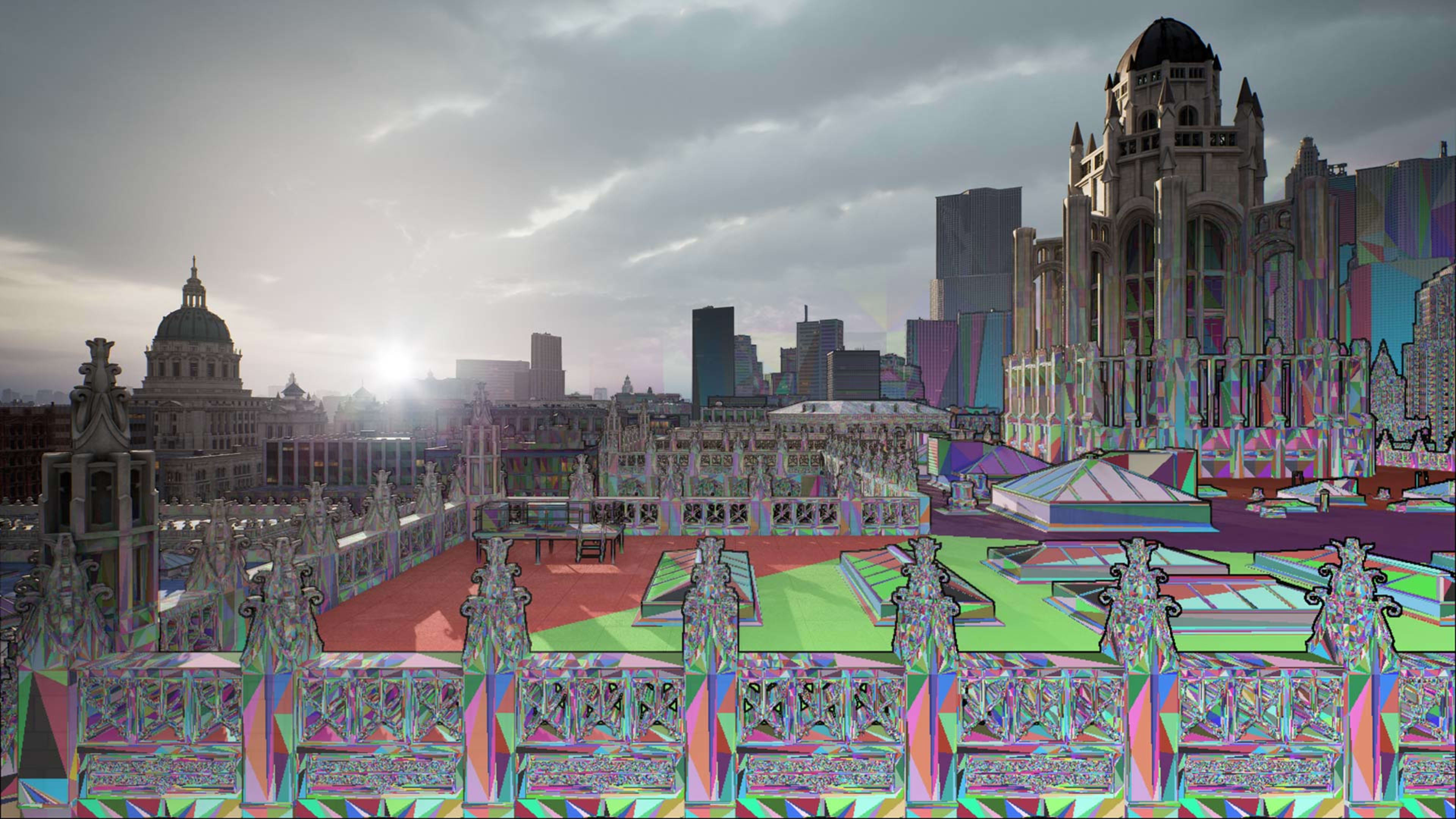

Architecture render before and after Nanite. [Images: courtesy of Epic Games]The centerpiece of UE5 is a graphics rendering technology Epic calls “Nanite,” which intelligently adds more or less detail to objects depending on their importance to the scene and their proximity to the point of view of the audience. In the “game” portion of the demo, for example, we watch from behind IO’s shoulder and control her gunfire.

“It’s about being able to spend our (graphics chip) memory on things that are actually going to affect the things you see,” Penwarden told me as we saw a wide view of the city’s skyline from Trinity’s car as she speeds down a freeway. I noticed that some of the buildings in the distance have a blurred quality. The engine is fudging the geometry of those far-off shapes and spending its power to render fine details on imagery in the focal point of the shot. “Especially with a city this large and with this much detail it would be impossible to have all that data rendering.”

In another wide angle shot of the city we saw sunlight reflecting naturally off hundreds of surfaces, some of them reflective like office windows, some of them less reflective like stone walls. Those lighting effects are the work of a new intelligent scene illumination system called Lumen, which automatically takes care of the lighting and reflections in a scene so that the designer doesn’t have to fuss over them as much.

‘Creators’

Unreal Engine is known among developers as a high-end tool that’s commonly used to create high-quality PC and console games. Unreal may not be the least expensive gaming engine license you can get, but the new features in UE5 are aimed at improving the economics of game making as a whole. They do this mainly by cutting down on the time and person power needed to make a high-quality experience, which Sweeney tells me is “by far” the most expensive part of making a game.

“It’s all aimed at making game development much more accessible and making high-quality and photorealistic gaming and creation more accessible to far more developers,” Sweeney tells me.

“I’d like to make it possible for a ten-person team to build a photorealistic game that’s incredibly high quality,” he says. “Whereas right now, if you’re building everything by hand, it might be a 100-person team.”

But it’s about more than games. Epic hopes the efficiencies in UE5 might also open the door to developers in other industries who might not otherwise have given Epic’s engine a serious look. In fact, Sweeney and his team now often refer to Unreal Engine users not as developers but as “creators,” a broader term that encompasses developers big and small, and within and without the gaming world.

Filmmakers began combining CGI and live action back in the 1980s. But the CGI often took lots of time and computer power to render (sometimes days for a single frame of film), so it had to be added to the live action in post-production, says Miles Perkins, who leads Epic’s media and entertainment industry business. The actors, acting in front of a green screen, couldn’t react to the CGI in real time, and the producer had to have faith that the CGI and live action would gel into a cohesive scene at the end of the process, Perkins says.

Instead of green screens, producers now use large LED screens showing scenes and special effects created in Unreal Engine running in real time behind and around the physical set and the actors. This lets the actors react more naturally to the special effects, and it lets the producer see if and how the scene’s digital and physical components are working together.

The fashion industry has begun using Unreal Engine to create “digital twins” of real-world clothing and accessories. Sweeney tells me that fashion brands are excited about the prospect of selling clothing and accessories in the metaverse. “When you’re in the metaverse see some cool item of clothing and buy it and own it both digitally and physically, and it will be a way better way to find new clothing.” Shoppers will be able to put (digital) clothing on their avatars to see how it looks. It’s a very different experience from buying something in a 2D marketplace like Amazon, Sweeney says, where you must have faith that an article of clothing will fit right and look good.

“You can actually build all of these 3D objects,” he says. “Both a virtual twin to every object in the world, or every object in your company or in your movie.”

Toward the metaverse

Despite all the tech industry hype over the past year or so, the concept of the metaverse–an immersive digital space where people (via their avatars) can socialize, play, or do business–is far from being fully realized.

Sweeney and Epic saw the concept coming years ago: Sometime after the 2017 release of Epic’s smash hit survival/battle royale/sandbox game Fortnite, people began to linger in the Fortnite world after the game play ended just to hang out with friends. They began coming to Fortnite to see concerts (Travis Scott’s Astronomical event, for example), or to see movie industry events (such as the premiere of a new Star Wars Episode IX: The Rise of Skywalker clip). Epic calls these “tie-ins” or “crossover events.” One one level, they’re marketing events, but they also demonstrate that people are getting more comfortable doing things within virtual space. Such immersive digital experiences may characterize the next big paradigm in personal computing and the internet.

But bigger and better virtual worlds are likely coming. Eventually, people are likely to want digital spaces and digital humans that are more lifelike and believable, just as gamers have demanded more and more lifelike environments in games. Building the various features of the metaverse will likely require lots of different tools, but tools like Unreal Engine that are already used to create immersive gaming environments will likely play key roles.

Some of the new features in Unreal Engine 5 seem to suggest this. After seeing The Matrix Awakens demo GamesBeat’s Dean Takahashi reflected: “It’s…a pretty good sign that Epic Games is serious about building its own metaverse, or enabling the customers of its game engine to build their version of the metaverse, the universe of virtual worlds that are all interconnected, like in novels such as Snow Crash and Ready Player One.”

In such an “open” universe, single developers wouldn’t so much “build a metaverse” as they would “build for the metaverse.” A social network might build its own virtual island. A gaming company might hold scheduled gaming events at pre-announced places in-world. A retailer might build a large digital storefront with an interior for shopping. Sweeney believes such an open world would require in-world companies and other organizations to use a set of open standards in order to allow people’s avatars to move between “worlds.”

“I think we can build this open version of the Metaverse over the next decade on the foundation of of open systems, open standards and companies being willing to work together on the basis of respecting their mutual customer relationships,” he says. “You can come in with an account from one ecosystem and play in another and everybody just respects those relationships. And there’s a healthy competition for every facet of the ecosystem.”

But eventually citizens of the metaverse will likely demand environments that are true-to-life, just as gamers have consistently demanded more life-like experiences from games. Epic is trying to push Unreal Engine toward being capable of satisfying that demand. In some respects the engine is getting creepily close. In others the need for more work is clear. But most importantly, the improvement from UE4 to UE5 suggests that the holy grail of movie-quality digital experiences is within reach.

Of course the development of the metaverse depends on more than just life-like graphics. Sweeney sees it as a totally new medium with new forms of commerce and new rules for trust, privacy, and identity.

“Ultimately, these are still the early days of this new medium,” Sweeney says, “and I think we’ll see a vast amount of innovation as more and more companies and people try different things and see what works.”

There was something weirdly incongruous about the expansive ideas I discussed with Sweeney and the setting of the conversation. We sat at a patio table just outside Epic’s big, nondescript office building on a gray and gusty March day imagining the likely future of the internet, the metaverse, which could cause a seismic shift as big as the arrival of Internet 1.0. It could be really wild, and could become a place that credibly competes with reality for human beings’ time and attention.

After our interview, Sweeney wandered off toward his car—one of the few parked in the lot, as most employees were still working remotely. Back in the Epic building I passed by a darkened office where someone was staring into the light of a computer monitor, working.

This quiet place is already at the epicenter of gaming, I thought to myself, and it could become one of the birthplaces of a new digital reality, the metaverse.

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.