Facebook is changing how it reaches people who have encountered misinformation on its platform. The company will now send notifications to anyone who has liked, commented, or shared a piece of COVID-19 misinformation that’s been taken down for violating the platform’s terms of service. It will then connect users with trustworthy sources in effort to correct the record. While researchers think the additional context could help people better understand their news consumption habits, it may be too little, too late to quell the tide of COVID-19 misinformation.

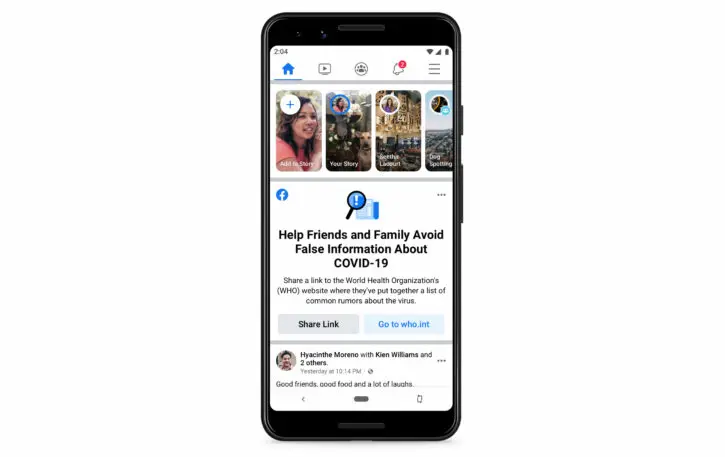

These proactive misinformation notifications are Facebook’s latest attempt to let platform users know they’ve come into contact with misinformation that was removed from the site. The company first launched this concept in April through a news feed post that pointed users who’d engaged with misinformation to a landing page on the World Health Organization’s website with debunked COVID-19 myths.

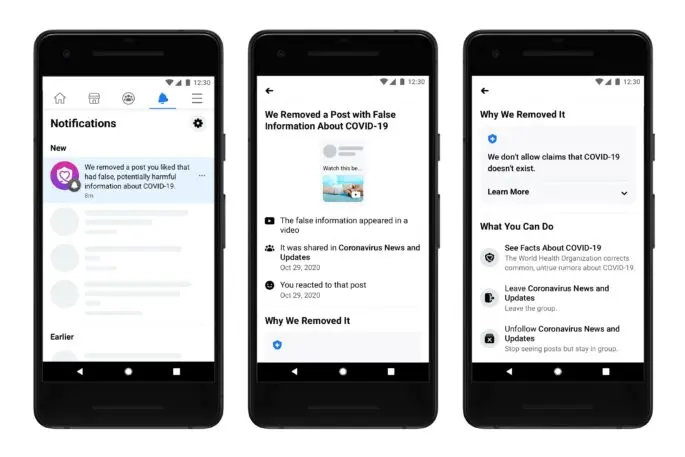

Now, Facebook is reaching out directly with notifications that read: “We removed a post you liked that had false, potentially harmful information about COVID-19.”

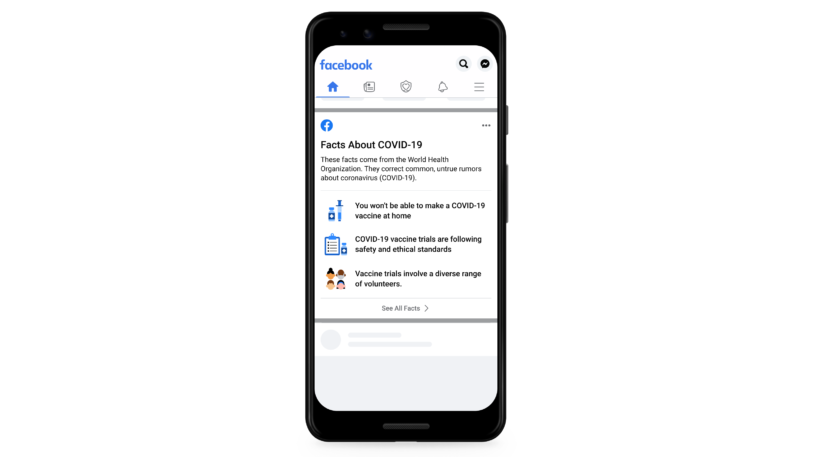

Clicking on the notification will take users to a landing page where they can see a thumbnail image of the offending content, a description of whether they liked, shared, or commented on the post, and why it was removed from Facebook. It also offers follow up actions, like the option to unsubscribe from the group that originally posted the false information or to “see facts” about COVID-19.

What wasn’t working

There’s a good reason for the change in execution: Facebook found that it wasn’t clear to users why posts in their news feed were urging them to get the facts about COVID-19.

“People didn’t really understand why they were seeing this message,” says Valerio Magliulo, a product manager at Facebook who worked on the new notification system. “There wasn’t a clear link between what they were reading on Facebook from that message and the content they interacted with.”

Facebook redesigned the experience so that the person would understand what exact information they came into contact with that was false—important context it didn’t include in its original launch format.

“The challenge we were facing and the fine balance we’re trying to strike is how do we provide enough information to give the user context about [the interaction] we’re talking about without reexposing them to misinformation,” says Magliulo. The concern that Magliulo highlights is that the platform could unintentionally reinforce the misinformation it’s trying to debunk.

But according to Alex Leavitt, a misinformation product researcher at Facebook, the backfire effect, or the possibility that correcting misinformation might lead people to cling to misinformation more, is minimal.

That’s why the change appears to be a half-step measure. While the new notification is much more specific than the original feature, which gave users no information about the false information they interacted with at all, it still may not be explicit enough. The feature doesn’t actually debunk any false narratives. While Facebook does connect users to a list dispelling the most common myths surrounding COVID-19, it doesn’t address the particular misinformation that a person engaged with.

So why not provide a more direct rebuttal to the definitively false information a person has experienced?

Facebook says that’s because it can’t show users a post that’s already been taken down. In addition, the company doesn’t want to shame the person who posted the misinformation in the first place, as it may have been unintentional.

In addition, Leavitt says one-size-fits-all experiences are easier to validate. When a debunking message is tailored to a small group of people who have all interacted with the same piece of misinformation, “it’s more difficult to try and find really strong effect sizes in experiments or surveys to make sure that it’s actually working,” he says.

An overwhelming tide

While this change might be a half-step in the right direction, researchers remain concerned that Facebook’s efforts to fight misinformation are too little, too late.

Meanwhile, COVID-19 misinformation, particularly about vaccines, continues to spread rapidly. In May 2020, Nature published a study—based on data collected from February-October 2019, before the pandemic began—that found that while anti-vaccination groups contain fewer members than pro-vaccination groups, they have a larger number of pages, experience more growth, and are more connected to users who haven’t made up their mind about vaccines. An October report from the U.K.-based non-profit, Center for Countering Digital Hate (CCDH), finds that some 31 million people follow anti-vaccination groups on Facebook.

“I think its great that Facebook is conscientiously thinking about misinformation on the platform specifically related to COVID-19 vaccines and they are trying to redirect people to more trustworthy sources,” says Kolina Koltai, a post-doctoral researcher who works at University of Washington’s Center for an Informed Public. “But they’ve got a lot of work cut out for them just because of the quick moving nature of misinformation.”

Koltai says that purveyors of disinformation, or intentionally false information, have gotten really good at creating content that narrowly evades violating Facebook’s terms of service. One way they do this is by using true information to make false assertions. For example, during the initial rollout of Pfizer’s COVID-19 vaccine in the U.K., two recipients with a history of severe allergies had an adverse reaction after receiving the vaccine. Some groups used this story to incorrectly assert that the vaccine is unsafe. All scientific evidence points to the contrary. So far, studies show the vaccine to be very safe.

What makes COVID-19 misinformation especially difficult to debunk and therefore justify taking down, she says, is that it’s so new. “There’s decades of research showing the MMR vaccine is safe,” she says. “We don’t have that with the COVID-19 [vaccine]. How do you debunk something that’s so in its infancy?”

In practice, this means that some COVID-19 misinformation stays up on the platform, though just how much is unclear. The CCDH says it reported 334 instances of COVID-19 misinformation and Facebook only removed a quarter of the content. Facebook says it has removed more than 12 million pieces of COVID-19 misinformation that could lead to physical harm across Facebook and Instagram.

In additional to removal, which Facebook reserves for content it deems harmful, Facebook also appends fact-checking labels to COVID-19 misinformation. However, it doesn’t necessarily notify someone who came into contact with that misinformation before the label was applied. Right now, it’s only informing people when they came into contact with misinformation that was taken down.

That could change over time. Facebook’s Leavitt says the company is working to direct users to the most up-to-date and credible information that it can.

“This is just part of the iteration that we’ve been going through,” says Leavitt. For now, he says, his group wants to make sure this feature is positively impacting people who are liking, commenting, and sharing misinformation. “In the future we might consider expanding it beyond that.”

Those changes may not come quickly enough. As Koltai pointed out, the time it takes to create misinformation is minuscule compared with the complex labor required to take it down. Speed is necessary to beat it back. Facebook’s plodding approach for an issue of such importance is ironic for a company once known for moving fast and breaking things.

This story has been updated with clarifications from Facebook.

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.