As someone who has seen a lot of awful, often fatal police encounters, Rick Smith has a few ideas for how to fix American law enforcement. In the past decade, those ideas have turned his company, Axon, into a policing juggernaut. Take the Taser, its best-selling energy weapon, intended as an answer to deadly encounters, as Smith described last year in his book, The End of Killing. “Gun violence is not something people think of as a tech problem,” he says. “They think about gun control, or some other politics, is the way to deal with it. We think, let’s just make the bullet obsolete.”

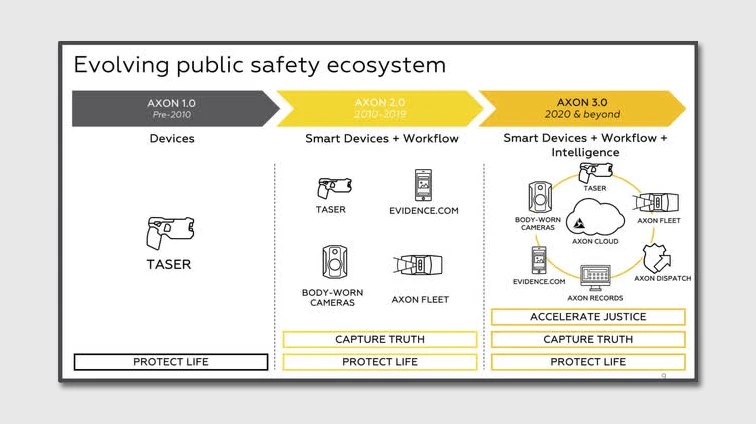

The body camera was another solution to more big problems. In the wake of Michael Brown’s death in Ferguson, Axon began pitching the devices as a way to document otherwise unseen encounters, or to supplement—or counterbalance—growing piles of citizen footage, from the VHS tape of Rodney King to the Facebook Live stream of Alton Sterling. While the impact of body cameras on policing remains ambiguous, lawmakers across the country have spent millions on the devices and evidence-management software, encouraged by such things as an Axon camera giveaway. In the process, Smith’s firm, which changed its name from Taser three years ago, has begun to look more like a tech company, with the profits and compensation packages to match.

“Look, we are a for-profit business,” says Smith, “but if we solve really big problems, I’m sure we can come up with financial models that make it make sense.”

“If you think that ultimately, we want to change policing behavior, well we have all these videos of incidents in policing, and that seems like that’s a pretty valuable resource,” says Smith. “How can agencies put those videos to use?”

One solution is live body-camera video. A new Axon product, Respond, integrates real-time camera data with information from 911 and police dispatch centers, completing a software suite aimed at digitizing police departments’ often antiquated workflows. (The department in Maricopa, Arizona, is Axon’s first customer for the platform.) This could allow mental health professionals to remotely “call in” to police encounters and help defuse potentially fatal encounters, for example. The company is also offering a set of VR training videos focused on encounters with people during mental crises.

Another idea for identifying potentially abusive behavior is automated transcription and other AI tools. Axon’s new video player generates text from hours of body-camera video in minutes. Eventually, Smith hopes to save officers’ time by automatically writing up their police reports. But in the meantime, the software could offer a superhuman power: the ability to search police video for a specific incident—or type of incident.

In 2018, Axon received a patent for software that can identify faces, objects, and sounds in body cam video in real-time. In a patent awarded last month, Axon engineers describe software for finding words, locations, clothing, weapons, buildings, and other objects. AI could also identify “the characteristics” of speech, including volume, tone (“e.g., menacing, threatening, helpful, kind”), frequency range, or emotions (“e.g., anger, elation”), according to the patent filings.

Using machines to scan video for suspicious language, objects, or behavior is not completely new; it’s already being done with stationary surveillance cameras and oceans of YouTube and Facebook videos. But using AI to tag body-camera footage, either after the fact or in real time, would give the police dramatic new surveillance powers. And ethical or legal problems aside, interpreting body-camera footage can be a heavy lift for AI.

“Replacing the level and complexity and depth of a report generated by a human is crazy hard,” says Genevieve Patterson, a computer vision researcher and cofounder of Trash, a social video app. “What is difficult and scary for people about this is that, in the law enforcement context, the stakes could be life or death.”

Smith says the keyword search feature is not yet active. Last year he announced Axon was pressing pause on the use of face recognition, citing the concerns of its AI ethics advisory board. (Amazon, which had also quietly hyped face recognition for body cameras, put sales of its own software on hold in June, with Microsoft and IBM also halting usage of the technology.) Instead, Axon is focusing on software for transcribing footage and license plate reading.

• Read more about the Future of Policing

Smith also faces a more low-tech challenge: making his ideas acceptable to often intransigent police unions. Police officers aren’t exactly clamoring for more scrutiny, especially if it’s being done by a computer. In Minneapolis, the Police Department has partnered with a company called Benchmark Analytics to implement an early-warning system for problematic cops, but the program has suffered from delays and funding issues.

Meanwhile, many communities aren’t calling for more technology for their police but for deep reform, if not deep budget cuts. “It’s incumbent upon the technology companies involved in policing to think about how their products can help improve accountability,” says Barry Friedman, a constitutional law professor who runs the Policing Project at NYU and sits on the Axon ethics board. “We have been encouraging Axon to think about their customer as the community, not just as a policing agency.”

Smith recently spoke with me from home in Scottsdale, Arizona, about that idea, and how he sees technology helping police at a moment of crisis—one that he thinks “has a much greater chance of actually driving lasting change.” This interview has been edited and condensed for clarity.

Better cops through data

Fast Company: Your cameras have been witness to countless incidents of police violence, even if the public often doesn’t get to see the footage. Meanwhile, there are growing calls to defund the police, which could drain the budgets that pay for your technology. How has the push for police reform changed things for Axon?

Rick Smith: We’ve seen that there have been calls to defund the police, but I think those are really translating into calls to reform police. Ultimately, there’s an acknowledgment that reform is going to need technology tools. So we’re careful to say, “Look, technology is not going to go solve all these problems for us.” However, we can’t solve problems very well without technology. We need information systems that track key metrics that we’re identifying as important. And ultimately we believe it is shifting some of the things on our road map around.

FC: Most of the videos of police abuse that we get to see come from civilians rather than police. The body-camera videos from the George Floyd incident still have not been released to the public. I wonder how you see body cameras in particular playing a role in police reform.

RS: I try to be somewhat impartial, and I guess this might be because I’m in the body-camera business, but I think body cameras made a difference [in the case of George Floyd]. If you didn’t have body cameras there, I think what could have happened was, yes, you would have had some videos from cell phones, but that’s only of a few snippets of the incident, and those only started after things were already going pretty badly. The body cameras bring views from multiple officers of the entire event.

The [Minneapolis] park police did release their body camera footage [showing some of the initial encounter at a distance]. And I think there was enough that you just got a chance to see how the event was unfolding in a way such that there was no unbroken moment—without that, I think there could have been the response “Well, you know, right before these other videos, George Floyd was violently fighting with police” or something like that. I think these videos just sort of foreclosed any repositioning of what happened. Or to be more colorful, you might say the truth had nowhere to hide.

And what happened? There were police chiefs within hours across the country who were coming out and saying, “This was wrong, they murdered George Floyd, and things have to change.” I’ve never seen that happen. I’ve never seen cops, police leaders, come out and criticize each other.

RS: When you think about transparent and accountable policing, there’s a big role for policy. But we think body cameras are a technology that can have a huge impact. So when we think about racism and racial equity, we are now challenging ourselves to say, Okay, how do we make that a technology problem? How might we use keyword search to surface videos with racial epithets?

And how might we introduce new VR training that either pushes officer intervention, or where we could do racial bias training in a way that is more impactful? Impactful such that, when the subject takes that headset off, we want them to feel physically ill. What we’re showing them, we need to pick something that’s emotionally powerful, not just a reason to check a checkbox.

Coming out of the George Floyd incident, one of the big areas for improvement is officer intervention. Could we get to a world where there are no aggressive cops who are going to cross the line? Probably not. However, could we get to a world where four other officers would not stand around while one officer blatantly crosses the line? Now, that’s going to take some real work.

But there’s a lot of acceptance because of George Floyd—as I’m talking to police chiefs, they’re like, ‘yeah, we absolutely need to do a better job of breaking that part of police culture and getting to a point where officers, no matter how junior, are given a way to safely intervene.’ We’re doing two VR scenarios exactly on this officer intervention issue. We’re going to put cops in VR—not in the George Floyd incident, but in other scenarios where an officer starts crossing the line—and then we’re going to be taking them through and training them effectively such that you need to intervene. Because it’s not just about general public safety: it is your career that can be on the line if you don’t do it right.

Body-cam footage as game tapes

FC: You mentioned the ability to search for keywords in body-camera video. What does that mean for police accountability?

RS: Recently there was a case in North Carolina where a random video review found two officers sitting in a car having a conversation that was very racially charged, about how there was a coming race war and they were ready to go out and kill—basically they were using the N-word and other racist slurs. The officers were fired, but that was a case where the department found the video by just pure luck.

We have a tool called Performance that helps police departments do random video selection and review. But one of the things we’re discussing with policing agencies right now is, How do we use AI to make you more efficient than just picking random videos? With random videos, it’s going to be pretty rare that you find something that went wrong. And with this new transcription product, we can now do word searches to help surface videos.

Six months ago, if I mentioned that concept, pretty much every agency I talked to would have said—or did say—”Nope, we only want random video review, because that’s kind of what’s acceptable to the unions and to other parties.” But now we’re hearing a very different tune from police chiefs: “No, we actually need better tools, so that for those videos, we need to find them and review them. We can’t have them sitting around surreptitiously in our evidence files.”

We’ve not yet launched a video search tool to search across videos with keywords, but we are having active conversations about that as a potential next step in how we would use these AI tools.

• Related: Why it’s so hard to find accurate policing data

FC: Face-recognizing police cameras are considered unpalatable for many communities. I imagine some officers would feel more surveilled by this kind of AI too. How do you surmount that hurdle?

RS: We could use a variety of technical approaches, or change business processes. The simplest one is—and I’m having a number of calls with police chiefs right now about it—what could we change in policing culture and policy to where individual officers might nominate difficult incidents for coaching and review?

Historically that really doesn’t happen, because policing has a very rigid, discipline-focused culture. If you’re a cop on the street—especially now that the world is in a pretty negative orientation towards policing—and if you are in a difficult situation, the last thing in the world that you would want is for that incident to go into some sort of review process. Because ultimately only bad things will happen to you: You might lose pay, you might get days off without pay. You might get fired.

And so, one idea that’s been interesting as I’ve been talking to policing leaders is that in pro sports, athletes review their game tapes rigorously because they’re trying to improve their performance in the next game. That is not something that culturally happens in law enforcement. But these things are happening in a couple of different places. The punchline is, to make policing better, we probably don’t need more punitive measures on police; we actually need to find ways to incentivize [officers to nominate themselves for] positive self-review.

What we’re hearing from our actual customers is, right now, they wouldn’t use software for this, because the policies out there wouldn’t be compatible with it. But my next call is with an agency that we are in discussions with about giving this a try. And what we can do is, I’m now challenging our team to go and build the software systems to enable this sort of review.

https://vimeo.com/333871354

FC: Axon has shifted from weapons maker to essentially a tech company. You’ve bought a few machine vision startups and hired a couple of former higher-ups at Amazon Alexa to run software and AI. Yours was also one of the first public companies to announce a pause on face recognition. What role does AI play in the future of law enforcement?

RS: The aspects of AI are certainly important, but there are so many low-hanging user interface issues that we think can make a big difference. We don’t want to be out over our skis. I do think with our AI ethics board, I think we’ve got a lot of perspectives about the risks of getting AI wrong. We should use it carefully. And first, in places where we can do no harm. So things like doing post-incident transcription, as long as there’s a preservation of the audio-video record, that’s pretty low-risk.

I’d say right now in the world of Silicon Valley, we’re not on the bleeding edge of pushing for real-time AI. We’re solving for pedestrian user-interface problems that to our customers are still really impactful. We’re building AI systems primarily focusing on automating post-incident efficiency issues that are very valuable and have clear ROI to our customers, more so than trying to do real-time AI that brings some real risks.

The payoff is not there yet to take those risks, when we can probably have a bigger impact by just fixing the way the user interacts with the technology first. And we think that’s setting us up for a world where we can begin to use more AI in real time.

• Related: Policing’s problems won’t be fixed by tech that aids—or replaces—humans

FC: There are few other companies that have potential access to so much data about how policing works. It relates to another question that is at the forefront in terms of policing, especially around body cameras: Who should control that video, and who gets to see it?

RS: First and foremost, it should not be us to control that footage. We are self-aware that we are a for-profit corporation, and our role is building the systems to manage this data on behalf of our agency customers. As of today, the way that’s built, there are system admins that are within the police agencies themselves that basically manage the policies around how that data is managed.

I could envision some time when cities might ultimately decide that they want to have some other agency within the city that might have some authority over how that data is being managed. Ultimately, police departments still defer to mayors, city managers, and city councils.

One thing that we’re actively looking at right now: We have a new use-of-force reporting system called Axon Standards, which basically is a system agencies can use to document their use-of-force incidents. It makes it pretty easy to include video and photos and also the Taser logs, all into one system.

We are building a system that’s really optimized for gathering all that information and moving it through a workflow that includes giving access to the key reviewers that might be on citizen oversight committees. As part of that work, we’re also looking at how we might be able to help agencies be able to share their data in some sort of de-identified way for academic studies. For obvious reasons, it’s just really hard for academics to get good access to the data because you have all the privacy concerns.

FC: For a company like Axon, what is the right role to play in police reform?

RS: I think we’re in this unique position in that we are not police or an agency—we’re technologists who work a lot with police. But that gives us the ability to be a thought partner in ways. If you’re a police chief right now, you are just trying to survive and get through this time. It’s really hard to step outside and be objective about your agency. And so, for example, one of the things that we’ve done recently, we created a new position, a vice president of community impact, Regina Holloway, [an attorney and Atlantic Fellow for Racial Equity] who comes from the police reform community in Chicago. Basically, her job is to help us engage better with community members.

FC: How did that come about?

RS: We talk to police all the time. That’s our job. When we formed our AI ethics board, part of their critical feedback was, Hey, wait a minute: You know, your ultimate customers are the taxpayers in these communities. Not just the police.

There was a lot of pressure for a time there, on me in particular personally, and on the company, like, What are you going to do, to understand the concerns of the community that are feeling like they’re being overpoliced? And so we hired Regina, and what’s been interesting about this is, when you get these different voices in the room, to me, it’s quite uplifting about the solution orientation that becomes possible.

FC: For example? How does Axon engage community members in planning some of these new products?

RS: If you watch the news right now, you see a lot of anger about policing issues. You see Black Lives Matter and Blue Lives Matter, representing these two poles, where on one pole it’s almost like the police can do no wrong and these protesters are bad folks. And on the other side, it’s the exact opposite view: The police are thugs.

But ultimately we get in the room together. And more people from the community who are sitting around the table are seeing it too. They’re saying, “Yeah, you know, this isn’t going to get better by just punitive measures on police. We actually need to rethink the way police agencies are managed.”

And so for me, it’s a really exciting thing to be involved with. That we can help bring these two viewpoints together. And now ultimately, to incentivize officers to do this, we’re going to need this change in the policy that we would negotiate together with community leaders in law enforcement.

And what’s sort of unique when you write software is that it becomes tangible, instead of this amorphous idea of “How would we do officer review?” I can show them screen mock-ups. Like, “Here’s a camera. Here’s how a cop would mark that this was a challenging incident.” We can kind of make it real to where, when they’re working on their policy, it’s not some ill-formed idea, but the software can give the idea real structure as to how it works.

Read more about the Future of Policing.

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.