In late April, an investigation by The Guardian tied widespread 5G and coronavirus conspiracy theories to an an evangelical pastor in England.

The pastor, Jonathan James, baselessly claimed in an audio recording that 5G wireless networking would allow Bill Gates to track people who’d been vaccinated for the coronavirus, and that the virus itself was a cover-up for 5G’s poisonous effects. On YouTube and private messaging apps such as WhatsApp, anti-5G groups pushed the sermon-like recording as coming from a “former Vodafone boss,” lending it an air of credibility.

In fact, The Guardian reported that the pastor had only worked in a sales role at Vodafone for less than a year in 2014, long before networks even started deploying 5G. But the publication wasn’t working alone in debunking the story. It had help from a startup called Logically, which combines AI, automation, and human fact-checkers to root out fake news.

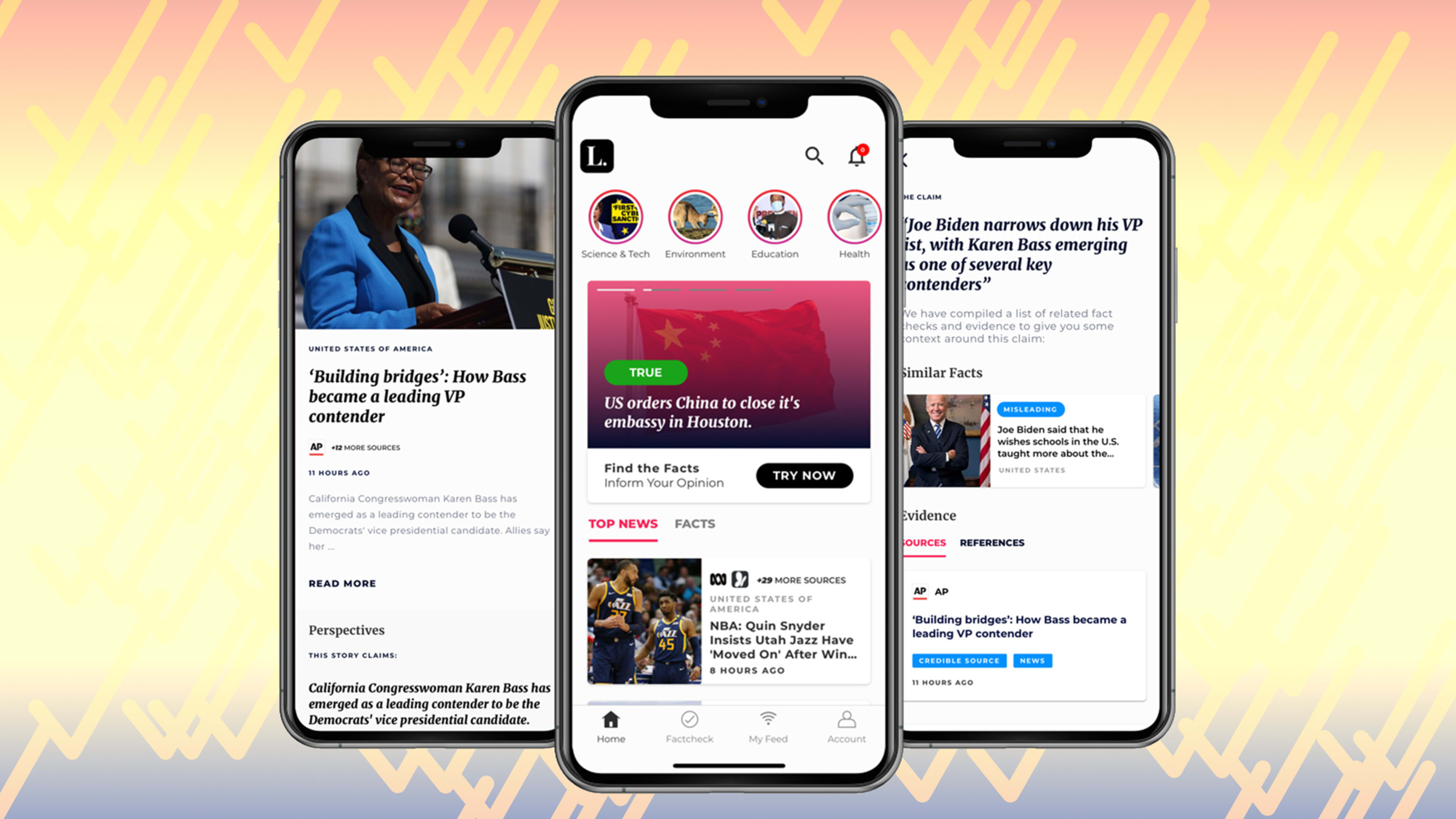

Logically has already worked with government agencies in India and the United Kingdom to identify extremist content and disinformation campaigns, and now it’s launching in the United States, both as a fact-checking product for consumers and as a service for organizations and government agencies that need help dealing with a tide of misinformation. In doing so, it’s going a clear step further than social networks themselves, which have struggled to flag and contain bad information on their own platforms.

“What we’d like to do is bring the industrial revolution to fact-checking,” founder Lyric Jain says in an interview.

Faster fact-checking

Logically isn’t the only group that’s trying to fuse fact-checking with automation. Facebook says it uses machine learning to flag potential misinformation on its network so third-party fact-checkers can review it. Full Fact, a nonprofit based in the United Kingdom, gives human fact-checkers tools to find false claims and match them with refuting facts or reporting, and is developing a machine learning model that can debunk some claims without human involvement. The Duke University Reporters’ Lab created a tool for readers that automatically pairs public figures’ claims with the work of human fact-checking organizations.

Jain says that Logically’s methodology is a bit different. Beyond just matching up bogus claims with evidence to the contrary, the startup treats the fact-checking process like an assembly line, with an algorithm prioritizing and doling out tasks. As a basic example, one step might involve finding the source of a rumor, while another might involve researching the claim. Logically’s system can handle some of these tasks automatically, but it can also hand out assignments to human fact-checkers based on their area of expertise.

Lyric JainWhat we’d like to do is bring the industrial revolution to fact-checking.”

“We break down the entire fact-checking process into piecemeal steps, so that each individual is able to carry out a step that they specialize in,” Jain says.

The startup employs about 40 full-time fact-checkers overseas and expects to hire nearly a dozen in the United States within the next six to nine months, Jain says. And he describes an array of tools that the company has developed: There are algorithms that can analyze media for signs of doctoring, ways to recognize coordinated behavior from bots, a “super-focused version of Google on steroids” that tracks down sources of evidence, and forensic tools for finding the original source of a false claim. For The Guardian’s investigation, it even used an experimental “Rumor Tracer” tool that lets people report a text message or image they received, along with their phone numbers and the numbers of anyone who shared or received the rumor.

Jain hopes that the combination of all these methods will allow its fact-checkers to respond faster and more efficiently.

“When you compare the volume of claims fact-checking organizations are able to get through to the misinformation that exists, the comparison isn’t even worth being made,” he says. “We’d like to change that balance.”

Friends as “whistleblowers”

Identifying and debunking false claims is only half the battle, though. Logically is also bringing news consumers into the fold through a newly launched browser extension and mobile app, so they can check on dubious articles themselves.

I’ve been using a pre-release version of the extension, and have indeed found that it can work pretty well.

When I searched on DuckDuckGo for “5G causes cancer,” for instance, one of the first results was from a website called cancer.news, offering “7 Reasons why 5G is a threat to overall health.” Logically’s extension immediately displayed a pop-up asking if I really wanted to read the article, and clicking the extension brought up a warning that “this article has a chance of being Junk Science.”

Although Logically hadn’t fact-checked the cancer.news story specifically, its algorithms had accurately predicted low credibility for both the news source and the article itself. Those predictions can be based both on the track record of the source and what it calls “the hallmarks of misinformation” in the story itself. When Logically isn’t able to rate an article immediately, users can send off parts of the article for further fact-checking.

Because mobile apps are more limited than browser extensions, Logically’s app won’t be able to detect dubious claims automatically, but users will be able to hit the share button on iOS or Android to check on links that they read in other apps.

Jain acknowledges that the people who would most benefit from seeing a fact-check probably aren’t seeking out services like Logically. Still, he’s hopeful that a small group of truth-conscious readers will be enough to snuff out falsehoods before they become widespread, particularly on closed networks like WhatsApp.

Lyric JainMisinformation is most damaging when it first comes out of those concentrated groups into the wider public.”

“Misinformation is most damaging when it first comes out of those concentrated groups into the wider public,” he says. “If we have whistleblowers in the wider public, exposed to those forums . . . we effectively have a chance to respond very soon to what’s still an emerging threat, in the pre-viral stages.”

Beyond just putting the burden on users, Logically is also interested in working with government partners and other groups to identify false claims. Last year, it worked with an unnamed “social platform” to report on the spread of fake news before India’s general election. It analyzed 1 million articles, finding 14% to be unreliable and 25% to be false. Logically also teamed up with Maharashtra’s cyber police to monitor for misinformation on social media ahead of the state’s Legislative Assembly elections, and in the United Kingdom, it worked with the Metropolitan Police to develop a tool that identifies extremist content.

Jain says the systems it’s launching the United States are built on the work it’s already done overseas.

“This is the same platform that we’ve been building for three years,” Jain says. “We’ve been battle-tested and battle-hardened.”

Beyond truthiness

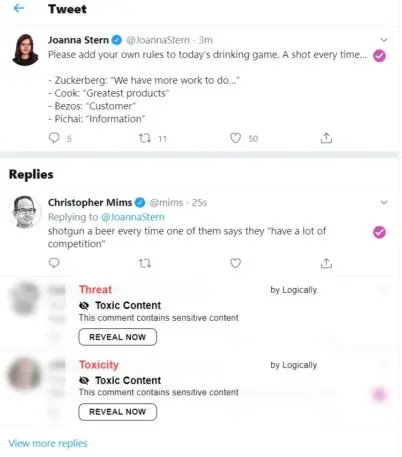

Logically isn’t just interested in swatting down falsehoods. The startup’s browser extension also analyzes the sentiment of comments on sites like Twitter and YouTube, and can block out toxic, profane, or obscene content unless users click through on the contents.

“We’re kind of branching the discussion away from just false content into this broader category of harmful content,” Jain says.

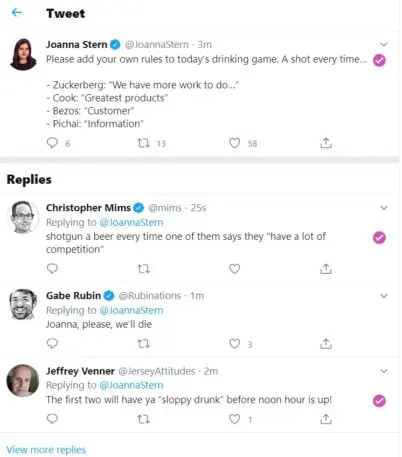

Compared to Logically’s fact-checking, the results here are a bit shakier. In my experience, it would sometimes flag tongue-in-cheek content as toxic, or innocuous content as obscene. Still, Jain believes its experimentation in this area can become a model for social networks like Twitter and Facebook over time.

“If we’re able to return some of this power back to end users, the end user can pick and choose what type of online harm they’d like to [be exposed to], and over time there can become industry standards,” he says.

All of this raises the question of why social networks don’t offer these kinds of tools themselves. Imagine if Facebook, for instance, provided its users with the kinds of instant fact-checks that Logically makes available through its browser extension and app, or if Twitter gave users even more granular screening options for toxic or offensive content. Such tools would be a lot more powerful and useful if they were built into the actual products, rather than just tacked on by a third party.

Jain believes these kinds of controls are too fraught with political peril for companies like Facebook and Twitter to offer directly. But that might change if Logically can prove itself over time.

“We can afford to take this rather large, innovative leap, but for a platform like Twitter or Facebook with hundreds of millions or billions of users, it’s probably a leap too far without sufficient evidence,” he says. “Hopefully we can help accelerate or provide some of that evidence.”

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.