Robert Mercer invested in Cambridge Analytica, the company that became infamous for siphoning off Facebook users’ information to target political ads. The shady data firm worked for the 2016 Trump campaign and Leave.EU, a pro-Brexit group. The Trump campaign also employed Steve Bannon, the right-wing firebrand who also has ties to Mercer and claims Brexit campaign leader Nigel Farage as a personal friend.

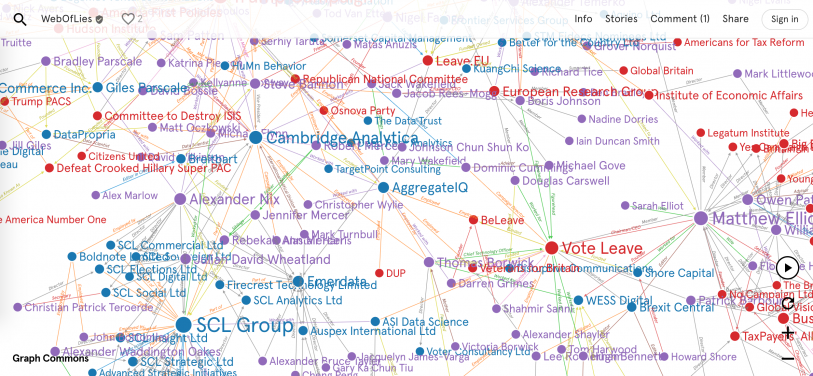

The links between the Trump campaign and the successful push for the United Kingdom to leave the European Union are just a few of the connections highlighted by an interactive project called WebOfLies that was first released in January. It’s reminiscent of follow-the-money graphics released by major news organizations in recent years, and it reports a web of connections between world figures such as President Trump, U.K. Prime Minister Boris Johnson, former Cambridge Analytica CEO Alexander Nix, and Russian intelligence agencies. But it wasn’t produced by a major news outlet. Instead, it was developed by a user who goes by the name MinimalGravitas on the sprawling discussion platform Reddit, where everyday commenters are increasingly seeking to strike back against those who would spread potentially harmful disinformation online.

“It seems to me if I think truth matters, then I’ve morally not got much choice but to try and understand and fight back against this sort of thing,” writes MinimalGravitas in an email.

On Reddit, forums with names such as r/trollfare, r/ActiveMeasures, r/Against_Astroturfing, and r/DisinformationWatch, mostly pseudonymous users share links to news stories and academic research papers about online disinformation campaigns. Many promote their own research into suspicious clusters of users promoting misleading posts on Reddit and other social networks. Some swap tips on how to steer friends and family away from biased news sources. Other subreddits, as Reddit forums are known, look specifically to aid users trying to separate truth from disinformation. One example is r/SJWRabbitHole, which serves as a kind of online support network for people turning away from the alt-right.

MinimalGravitasIf I think truth matters, then I’ve morally not got much choice but to try and understand and fight back.”

MinimalGravitas says they created WebOfLies to help people understand news stories about the sometimes-obscure figures said to be linked to election manipulation efforts around the world.

“If, for example, a Russian oligarch is mentioned in discussion of election interference, I want people to be able to ‘Ctrl F’ in WebOfLies and find out who that person is linked to, which companies they are involved with, which politicians they have met with, etc.,” they write. MinimalGravitas, including every other Redditor interviewed for this story, declined to disclose their real names out of concern about retaliation.

The Reddit user, who recently finished postgraduate studies in physics, is also working on a project to summarize a Wiki listing of academic papers on misinformation that were collected by r/trollfare users. They hope to produce an easy-to-understand report on how to avoid falling prey to manipulative online content.

Recent years have seen a host of academics, companies, and nonprofits from the Atlantic Council to Vietnam Veterans of America looking into misinformation campaigns online and reporting their findings to big social networks and to authorities. But Reddit, which has incubated conspiracy theories such as Pizzagate and QAnon, has become a perhaps unlikely home for passionate amateurs looking to fight back in the information wars. One challenge is evident on Reddit itself: While the disinformation-fighting subreddits generally have subscriber counts in the low thousands, forums such as Gamergate hub r/KotakuInAction or conspiracy theory subreddits such as r/conspiracy can have subscriber counts in the hundreds of thousands or even millions. Unfortunately, the audience for false information is much bigger than those trying to fact-check it.

MinimalGravitasTrying to understand how the universe works is hard enough for humanity without some assholes trying to mislead people.”

Reddit has long relied on its small army of volunteer moderators to keep the site livable despite a never-ending onslaught of trolls, spammers, and, now, avid propagandists. “They’re so reliant on these people who are really doing amazing work under circumstances that are terrible,” says Adrienne Massanari, an associate professor of communication at the University of Illinois at Chicago who has researched Reddit’s relationship with conspiracy theories and the alt-right.

And the task has arguably gotten even harder as the general climate of political distrust has made many users suspicious of anyone in power, including moderators and Reddit’s paid employees, says abrownn, a moderator for several massive subreddits, including r/worldnews (23 million members) and r/technology (8.6 million members), who has tracked misinformation on the platform.

“As we approach the 2020 election, things are only going to get worse,” he says. “There will be more blogs pushing misinformation or disinformation. There will be more people calling for the ignoring of decorum or rules.”

A mixed history on misinformation

Like most major social networks, Reddit has a decidedly mixed record when it comes to misinformation. There are subreddits such as r/AskHistorians and r/AskScience where users can ask experts questions and all but academically rigorous answers are removed. There are also plenty of innocuous forums where users post everything from photos of their pets to dating advice. But the site has historically also struggled to deal with a variety of false and misleading content, which means many of the Redditors looking to combat misinformation find themselves fighting falsehoods on the site itself.

Many of the Redditors looking to combat misinformation find themselves fighting falsehoods on the site itself.

Perhaps most famously, after the 2013 Boston Marathon bombing, amateur sleuths on Reddit forums trying to find those responsible poked fingers at innocent bystanders. Subreddits also gave a platform to Gamergate, the movement that spread falsehoods about video game developers and journalists, leading to harassment and death threats. Reddit has also hosted adherents of conspiracy theories such as Pizzagate, which alleged Democratic Party officials were involved in sex trafficking through pizzerias, and QAnon, which claims Trump is secretly fighting government corruption. (Reddit says both subreddits dedicated to Pizzagate and QAnon were banned, though the conspiracy theories still propagate elsewhere on the site).

The site, which was among the first companies backed by the now-celebrated accelerator Y Combinator, has always attracted people with a certain nerdy, contrarian bent looking to share information not easily found elsewhere. Massanari says that may have contributed to it becoming a bit of a haven for conspiracy theorists.

“I think why Reddit has become a place for this has a lot to do with the nature of the conversations that go on there,” she says. “A lot of Redditors see themselves as smarter or more invested in certain topics than the general public.”

A spokesperson for Reddit declined to comment for this story.

Redditors policing Reddit

Reddit management has taken steps over the years to remove accounts linked to alleged state propaganda efforts and shut down subreddits that become havens for hate speech. In its most recent transparency report, released in late February, Reddit reported 1,150 subreddits were shut down in 2019 for harassment. The site also just took action against r/The_Donald, a 791,000-user pro-Trump subreddit, The Daily Beast reported, removing some of the group’s moderators and requiring that future ones be vetted by Reddit. The subreddit had already been put on what Reddit calls “quarantine,” a designation for subreddits with potentially offensive content that is only available to logged-in users and doesn’t show up on site searches.

The company has also sought to squash efforts to suppress or promote particular posts. That’s something that can be done by bot-controlled accounts or coordinated manual voting, since the site allows people to vote content up or down and favors posts with better voting results. In the report, Reddit claimed 6,806 subreddits were shut down in 2019 for “vote or subscription cheating.” But much of the work of deciding what to do about false and even deliberately misleading posts falls to the volunteer moderators of individual subreddits, who are given considerable latitude to decide what content is acceptable on their forums. Even when Reddit has taken action against manipulative behavior on the platform, it’s often been thanks at least in part to reports from everyday users.

In 2018, Reddit officials gave special praise to the site’s users for helping discover a suspected Iran-linked effort to manipulate sentiment toward Middle Eastern politics on popular subreddits such as r/conspiracy and r/worldnews. “Through this investigation, the incredible vigilance of the Reddit community has been brought to light, helping us pinpoint some of the suspicious account behavior,” Reddit CTO Christopher Slowe wrote.

abrownnIt’s pretty much exactly the same, minus the Iranian stuff.”

An NBC News article at the time highlighted how the Reddit user abrownn uncovered ties between accounts involved in the propaganda operation and the sites to which they linked. Speaking to Fast Company, he says that while that particular operation may have been thwarted, there are still organized groups on the site coordinating to push false narratives.

“It’s pretty much exactly the same, minus the Iranian stuff,” he says. “It’s mostly right-wing content these days.”

Today, abrownn is a moderator for r/worldnews and r/technology. He and other moderators use a variety of user-made programs that can detect when new websites are suddenly posted to a subreddit, which can sometimes be a sign of a new propaganda or fake news site. The users who share such sites may not always be aware they’re fake, he says: One recent trend has been sites with fake addresses that mimic well-known news sites, such as the BBC, looking to trick readers into sharing their content. (Reddit says this practice is prohibited). Abrownn says he uses a mix of his own homemade scripts and widely circulated Reddit moderation tools, including the Reddit Enhancement Suite, which can do things such as add color-coded tags to posts by particular users, and the Moderator Toolbox for Reddit, with a built-in user history analysis feature. The tools abrownn uses can also spot suspicious patterns, such as an unusual number of newly created accounts promoting a particular post.

“I feel like I have a bit of a duty to do this because not a lot of people are very willing to sit there and look at a lot of this stuff without burning out too quickly that also know what to look for,” he says.

A sometimes heavy burden

Abrownn is far from the only user looking to squash deliberate disinformation on Reddit itself: -Ph03niX-, a longtime Reddit user and a moderator of the subreddits r/Digital_Manipulation and r/AgainstHateSubreddits, which look to spot new centers of hate speech on the site, says he began looking into the problem around the 2014 midterm elections. That’s when he noticed “something off” about discourse on the site.

“Something happened,” he says. “The site just got bombarded with politics.”

-Ph03niX-Something happened. The site just got bombarded with politics.”

He began searching out people who surreptitiously maintained multiple accounts on the site to push their agendas. He often privately urged them to stop, and, if that didn’t work, he exposed their hidden networks of accounts through posts on the site. In other posts, he’s shared general information about online manipulation and lies, and he can speak off the cuff about decades of conspiracy-minded thinking dating back to the anti-Communist John Birch Society of the 1960s, perhaps best remembered today for its opposition to water fluoridation. But, he says, he may leave Reddit after this year.

“I’ve been doing this so long,” he says. “I’m kind of jaded about it.”

It’s a sentiment that several of the site’s crusaders against disinformation expressed, questioning the effectiveness of their unpaid labor in a world where fringe forums such as r/conspiracy can command orders of magnitude more subscribers than those that seek to debunk them.

“Other than my own selfish needs, I don’t know if anyone else is really interacting,” quips Marc1309, a doctoral student at a university in Canada who has done academic research about Reddit and is a regular contributor to anti-disinformation subreddits including r/Digital_Manipulation, r/Disinfo, and r/Foreign_Interference. He regularly shares information about propaganda and misinformation from flat Earth theories to climate change denial, trying to focus on verified sources such as well-regarded researchers and think tanks. But, he says, while he thinks the forums are useful for curating information for himself and other researchers, it’s unclear how much it can change others’ minds.

Marc1309You’re preaching to the choir a bit.”

“You’re preaching to the choir a bit,” he says.

Even if misinformation-fighting subreddits have struggled so far to reach much of the site’s users and the internet at large, there’s almost certainly value in making information available for people who are themselves actively reevaluating their own political beliefs or trying to understand why loved ones believe what they do.

“So far, I can’t really say if the sub has had any great effect on the [vast] amount of reactionary content creators, or more importantly the people who follow them,” writes KoolKidSpec, the creator of SJWRabbitHole, in an email. “But I can say at least that some people have gotten some sort of support from posting there.”

KoolKidSpec, who lives in New England, says he created the subreddit after seeing YouTube personalities discuss their own experiences moving away from far-right beliefs.

“The subreddits are still pretty small, so I try to reply to every story posted,” he writes. “And I have to say that it seems that just being able to post these stories and talk about themselves, in some cases, really does help people.”

This story has been updated with more information about Reddit’s policies.

This story is part of our “Hacking Democracy” series, which examines the ways in which technology is eroding our elections and democratic institutions—and what’s been done to fix them. Read more here.

Recognize your brand's excellence by applying to this year's Brands That Matters Awards before the early-rate deadline, May 3.