As the federal government plods along on developing privacy laws, some cities are taking matters into their own hands—with facial recognition technology at the top of the list. Now, Portland, Oregon, has plans to ban the use of facial recognition for both the government and private businesses in the city, a move that could make Portland’s ban the most restrictive in the United States.

The proposed ban comes after cities including San Francisco, Oakland, and Berkeley in California, and Somerville in Massachusetts, have already banned the use of facial recognition by their city government agencies, including police departments. But Portland’s ban goes a step further by expanding to private businesses—if it makes it into law and takes effect in spring 2020, as planned.

It could be a preview of what to expect across the country. “I think we’re going to start to see more and more [private sector bans],” says ACLU of Northern California attorney Matt Cagle, who helped draft the San Francisco legislation that later served as the model for Oakland and Berkeley. “People are really concerned about facial recognition use and the tracking of their innate features by governments and private corporations.”

Along with being used in policing, facial recognition is becoming a fixture in retail stores, stadiums, and even schools. Software providers like Microsoft, Amazon, and IBM offer algorithms that any developer can use to analyze faces, and other big companies like Facebook have already implemented facial recognition on social media.

“The technology has high rates of false positives for women and people of color, which means they will be more likely misidentified and targeted for things they didn’t do, or for being in places they weren’t actually in,” Portland city commissioner Jo Ann Hardesty says in an email.

Hardesty, who’s spearheading the ban effort, also links private and government use of face recognition, such the way that hundreds of police departments are using video captured by Amazon’s Ring smart doorbell cameras. “I am very worried about the companies collecting data and storing it and then making sweetheart deals with law enforcement so that they get access to that data,” she told a September Portland city council work session about the proposed ban.

Cities have enacted bans to prevent this by prohibiting city agencies not only from collecting facial-recognition data on their own, but also from acquiring third-party data. “If you’re a city department, you can’t yourself use facial recognition, but also you can’t go outsourcing that to a private party,” Cagle says.

The dangers of facial recognition in private

Portland appears to be the first city putting equal weight on the dangers of purely private-sector face detection and analysis. “This has major consequences for how people can live their lives when private companies try to use this software for things like security or employment screening,” says Hardesty.

It’s not just theoretical. For instance, retailers have been testing and deploying facial recognition tech to check customers in their stores against images of known shoplifters. Facial recognition software provider FaceFirst told BuzzFeed News that thousands of stores are using its technology for this purpose. Live entertainment companies AEG Presents and Live Nation considered using face recognition to identify ticket owners and facilitate their access to services or parts of venues. Both companies halted their plans in light of protest by musicians, reports The LA Times.

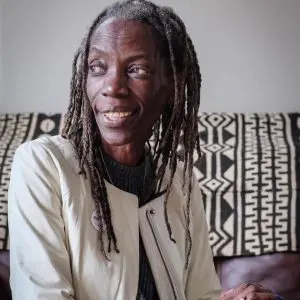

Jo Ann HardestyThis has major consequences for how people can live their lives when private companies try to use this software.”

Face recognition, like other forms of AI, is trained on limited data, and its accuracy plummets once it strays beyond white men. Results are less accurate with light-skinned women, poor with dark-skinned men, and abysmal with dark-skinned women.

In 2018, MIT University researcher Joy Buolamwini tested facial recognition services from IBM, Microsoft, and China-based Face++ on images of individuals from sub-Saharan Africa and northern Europe. Failure rates for identifying someone as a light-skinned man were from 0% to 0.8%. Failure rates for correctly identifying dark-skinned women were over 20% for Microsoft and over 34% for IBM and Face++.

(Buolamwini’s popular YouTube video “AI, Ain’t I A Woman?” shows commercial software labeling Oprah Winfrey, Michelle Obama, and Serena Williams as men.)

After Buolamwini shared results of her study with the big tech companies, they released updated models with much better performance. In a follow-up study, error rates for dark-skinned females dropped to about 17% for IBM’s algorithm and just 1.5% for Microsoft’s. But they were still a far cry from the accuracy for light-skinned men.

Hardesty is unswayed by arguments that the technology can be improved enough. “Rather than going back to fix something we already know is flawed to begin with, we should stop it from taking root in the first place,” she says.

And even if there were stellar improvement in accuracy, that could be just as unsettling, meaning governments and corporations would be able to reliably track us wherever we go.

The problem of consent

Beyond accuracy, Hardesty is concerned about consent. “These private companies never asked for our permission to scan, collect, store, or sell our faces, but that’s what would happen if this technology takes place unchecked,” she says. “For example, no one wants their child’s face scanned and sold to the highest bidder without their consent.”

“The capturing and encoding of our biometric data is going to probably be the new frontier in creating value for companies in terms of AI,” says Mutale Nkonde, a fellow at Harvard University’s Berkman Klein Center for Internet & Society. Look no further than Facebook, which is caught up in a lawsuit that could set the stage for how face-rec bans are enforced across the country.

Jo Ann HardestyNo one wants their child’s face scanned and sold to the highest bidder without their consent.”

Residents of Illinois are suing the social network, accusing it of scanning their images without consent. They cite Illinois’s Biometric Information Privacy Act, which requires that companies provide detailed written disclosure and receive written consent from each individual before collecting any biometric data, including images of their faces. (This would make scanning faces in public essentially impossible.) The U.S. District Court for Northern California, where Facebook is located, ruled that plaintiffs have the right to pursue the case. Whatever the outcome, the case will likely make its way up to the massive Ninth Circuit Court of Appeals, which includes Oregon.

Given all the technical, legal, and ethical uncertainty, Joy Buolamwini recommends at least taking a pause on facial recognition. “We know that there are technical limitations. We also know that even if those disparities were closed, the capacity for abuse is too great because there are no safeguards around this technology in the current environment,” she tells me. “I do believe the moratorium route is the correct way to go, given what we know.”

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.