In 2009, a group of scientists at Stanford and Princeton wanted to teach computers how to recognize any object in the world. To do that, they needed a lot of images—images of cats and dogs and apples and forks and spoons, but also images of people. These photos, each tagged with a category, could then be shown to a machine learning algorithm that would slowly learn over time how to detect the pointed prongs of a fork from the soft curves of a spoon.

The researchers built a data set of more than 14 million images, all organized into more than 20,000 categories, with an average of 1,000 images per category. It has become the most-cited object recognition data set in the world, with more than 12,000 citations in research papers.

But ImageNet, as the data set is known, doesn’t just include objects: It also has nearly 3,000 categories dedicated to people, including some that are described with relatively innocuous terms, like “cheerleader” or “boy scout.”

But many assigned descriptions, which were crowdsourced using human workers via Amazon’s platform Mechanical Turk, are deeply disturbing. “Bad person,” “hypocrite,” “loser,” “drug addict,” “debtor,” and “wimp” are all categories, and within each category there are images of people, scraped from Flickr and other social media sites and used without their consent. More insidiously, ImageNet also has categories like “workers” and “leaders,” which are socio-historical categories that look incredibly different across different cultures—if they exist at all. There’s no way to know who actually labeled each image, let alone assess what each person’s individual biases are that may have informed the labels.

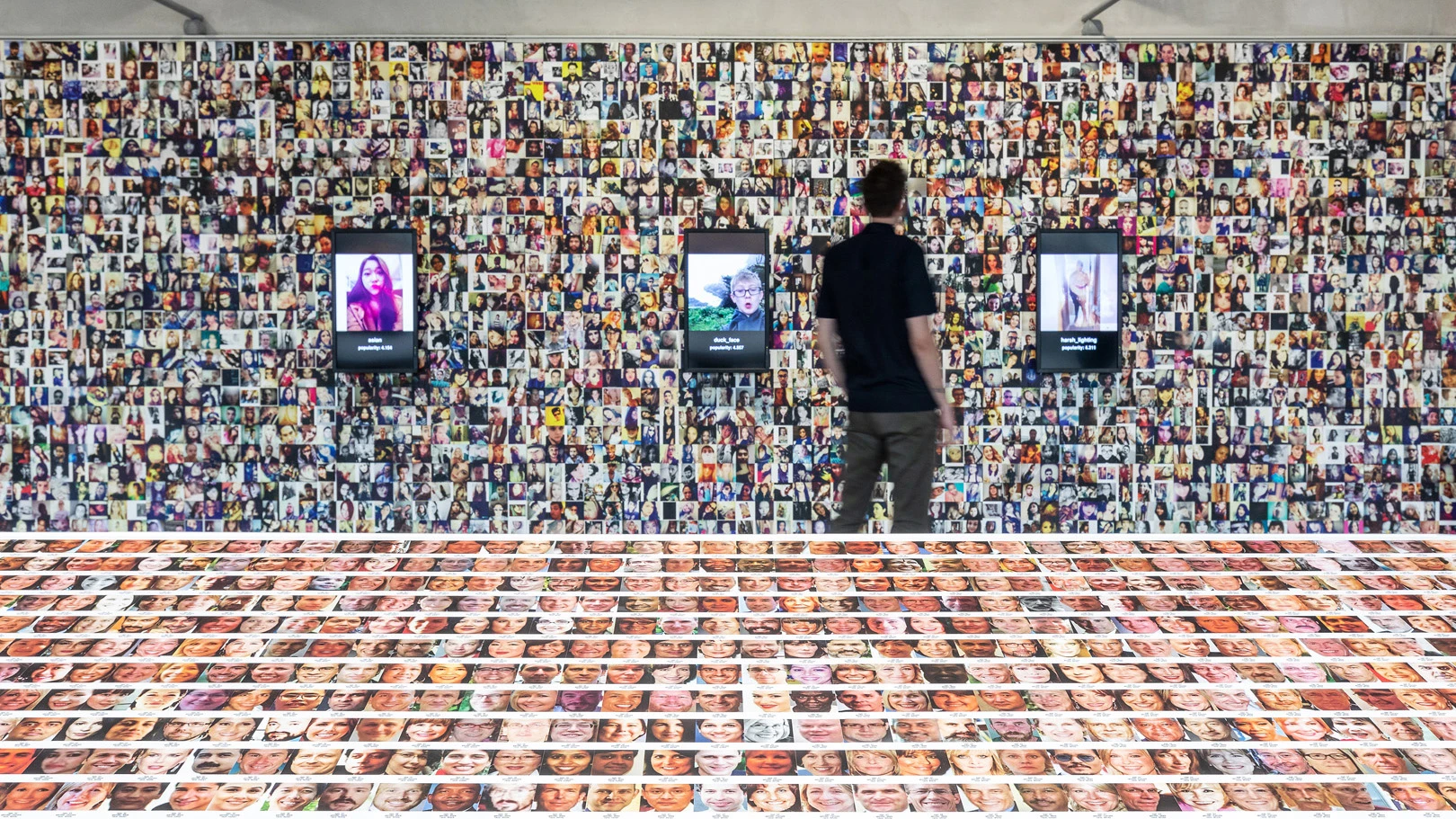

ImageNet and the history of other kinds of training data sets, which date back to the 1960s, are the subject of a new exhibition at the Prada Foundation in Milan. The exhibition, Training Humans, was curated by Trevor Paglen and professor and researcher Kate Crawford. It exposes the photographs that make up data sets like ImageNet, showing visitors a glimpse into the images that power computer vision and facial recognition systems.

ImageNet in particular has its own room in the gallery, where photographs of people are pasted onto cards where their category labels are printed, and pinned to the wall—echoing scientific card catalogues of the past, like with insects pinned to cards with their scientific name.

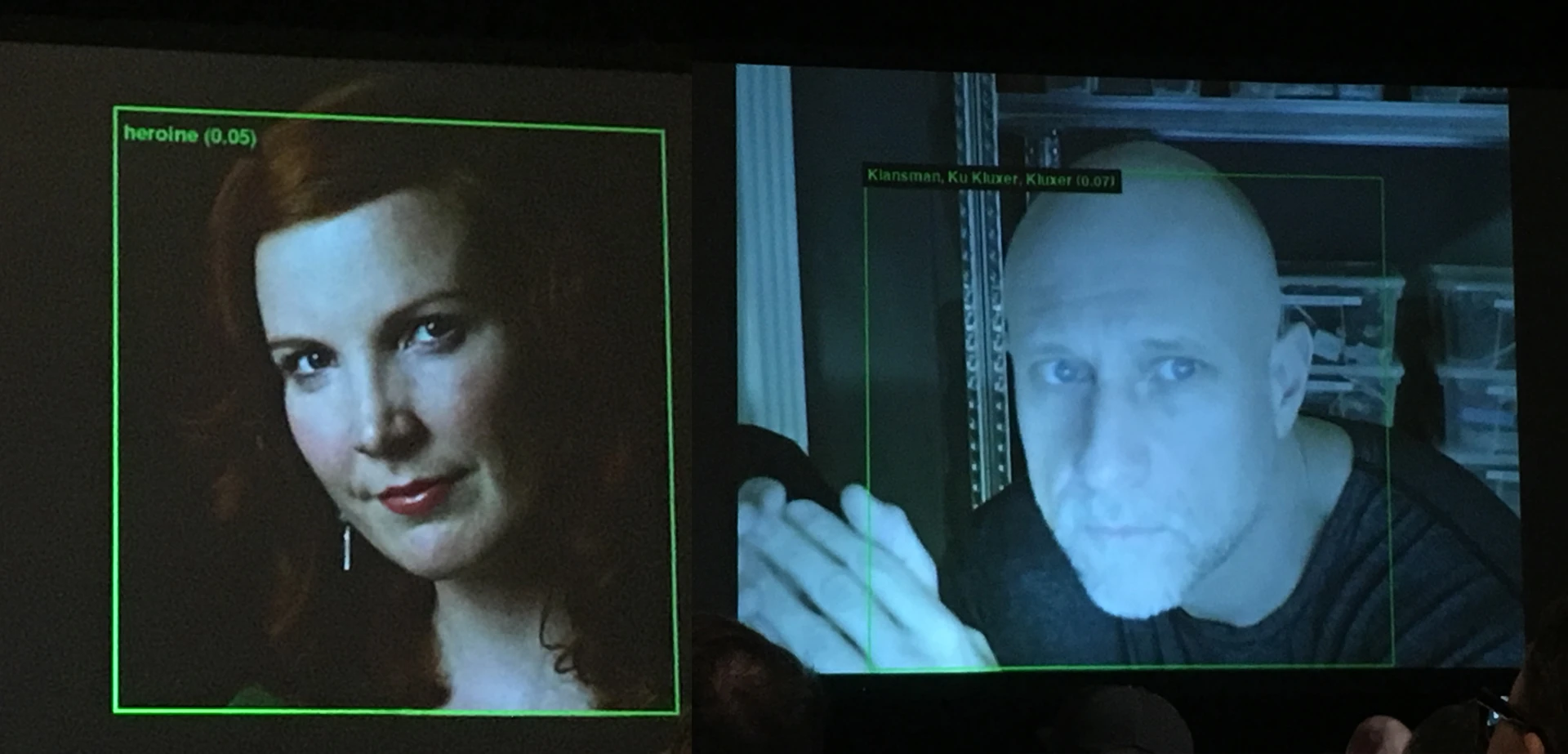

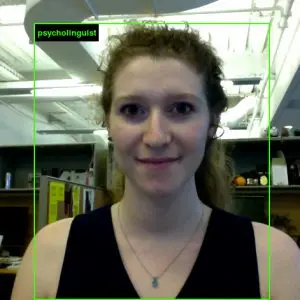

For people who can’t visit Milan, Crawford and Paglen have created an online tool called ImageNet Roulette, which is trained on the human classification categories of ImageNet. It lets you take a photo with a webcam, use a link, or upload any picture of a person and then it provides you with ImageNet’s categorization of the subject.

Paglen and Crawford demoed the tool at the South by Southwest conference in Austin earlier this year with their own photos and that of Leif Ryge, the developer in Paglen’s studio who created it. Paglen’s image was categorized as “Klansman.” Crawford’s headshot garnered the classification “heroine.” Other images of Ryge came back as “creep,” “homeless,” and “anarchist.”

“I think this business of classifying humans is in general something that’s worthy of a lot more scrutiny than it’s been given,” Paglen says.

Paglen points to the dark history of putting people into categories in previous centuries, like during apartheid in South Africa: A volume called the Book of Life categorized people based on their racial background, and was used to determine where people could live, what jobs they could hold, and where their children could go to school. He also likens the rise of machine learning and people’s perception that its algorithms are objective to the history of photography in the late 19th and early 20th centuries, where many thought that these newfangled images were inherently neutral. Paired with the rise of social Darwinism, which popularized the idea that some cultures and peoples are evolutionarily superior to others, photography helped create new pseudoscientific fields. Phrenology, which lacks any basis in fact and is deeply racist, tried to measure physical differences between people of different races. Scientists also tried to tie people’s appearance to their actions: “When photography was invented, it was understood to be objective and neutral and encouraged what we now think of as a bunch of pseudoscientists measuring people’s faces to figure out if they’re criminals or not,” Paglen says.

Just as we know today that photography is subjective and that phrenology is ridiculous, Paglen and Crawford hope to expose the truth about today’s AI systems, undermining the widespread belief that they are somehow neutral because they’re built using math.

“No matter how you construct a system that says this is what a man, what a woman, what a child, what a black person, what an Asian person looks like, you’ve come up with a type of taxonomic organization that is always going to be political,” says Crawford, who is also the cofounder and codirector of the AI Now Institute at NYU. “It always has subjectivity to it and a way of seeing built into it.”

The exhibition may be the first time that many people catch a glimpse of the images that underlie algorithmic systems. But some of the most important photos are missing. While Training Humans includes data sets that are open source or available for research use, it excludes images from some of the most powerful data sets of our time, like Facebook’s vast horde of images.

Crawford also wants to highlight that the source of these complex technological systems is rooted in the mundane images from people’s daily life.

“They’re not these fantastically abstract mathematical systems no one can understand,” Crawford says. “It’s the material of our everyday lives [that] has been ingested into these large systems for making AI better at facial recognition, at ’emotion detection.'”

But that doesn’t make them harmless. Facial recognition is highly controversial, and emotion recognition is starting to be debunked by scientists.

One of the data sets included in the exhibition is the Japanese Female Facial Expression data set from 1997, which has 213 images of six facial expressions that are assumed to correspond with inner emotional state—joy, surprise, sadness, disgust, anger, and fear—along with a neutral face. Crawford points to it as an example of how using six categories to articulate the wealth and depth of human emotion is simply insufficient and privileges a simplistic view of how humanity experiences the world. Another data set called FERET, which was funded by the CIA in the late 1990s, used images of researchers, lab assistants, and janitors who worked in the University of Texas at Austin lab to train algorithms to detect people’s faces.

[Photos: Marco Cappelletti/Fondazione Prada]While many machine learning data sets are deeply flawed, they’re being deployed anyway, both by academic researchers and by companies. But Crawford has some ideas for how data sets’ inherent biases could be catalogued better so that when future AI scientists use them, they’re at least aware of the problems.

In 2018, she collaborated with eight other researchers on a project that create labels for training sets that includes information on where the images come from, what the demographic split is (if the images are of people), who created the set, if the image subjects gave consent, any privacy concerns, and the original intent of the data set (among others). These “datasheets for data sets” have already gotten some adoption in industry, though it’s a very small step toward researchers understanding the implications of their AI’s data.

For Paglen, the question of what can be done to make machine learning training sets less simplistic and downright inaccurate is bigger. Because he believes technology can never be neutral, he thinks the designers of these systems should be questioning whether classifying humans is really part of the work they want to do.

“What kind of world do you want to live in?” he says. “That’s the first question.”

Images of the Japanese Female Facial Expression dataset have been removed from this story due to lack of consent from the image’s subject.

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.