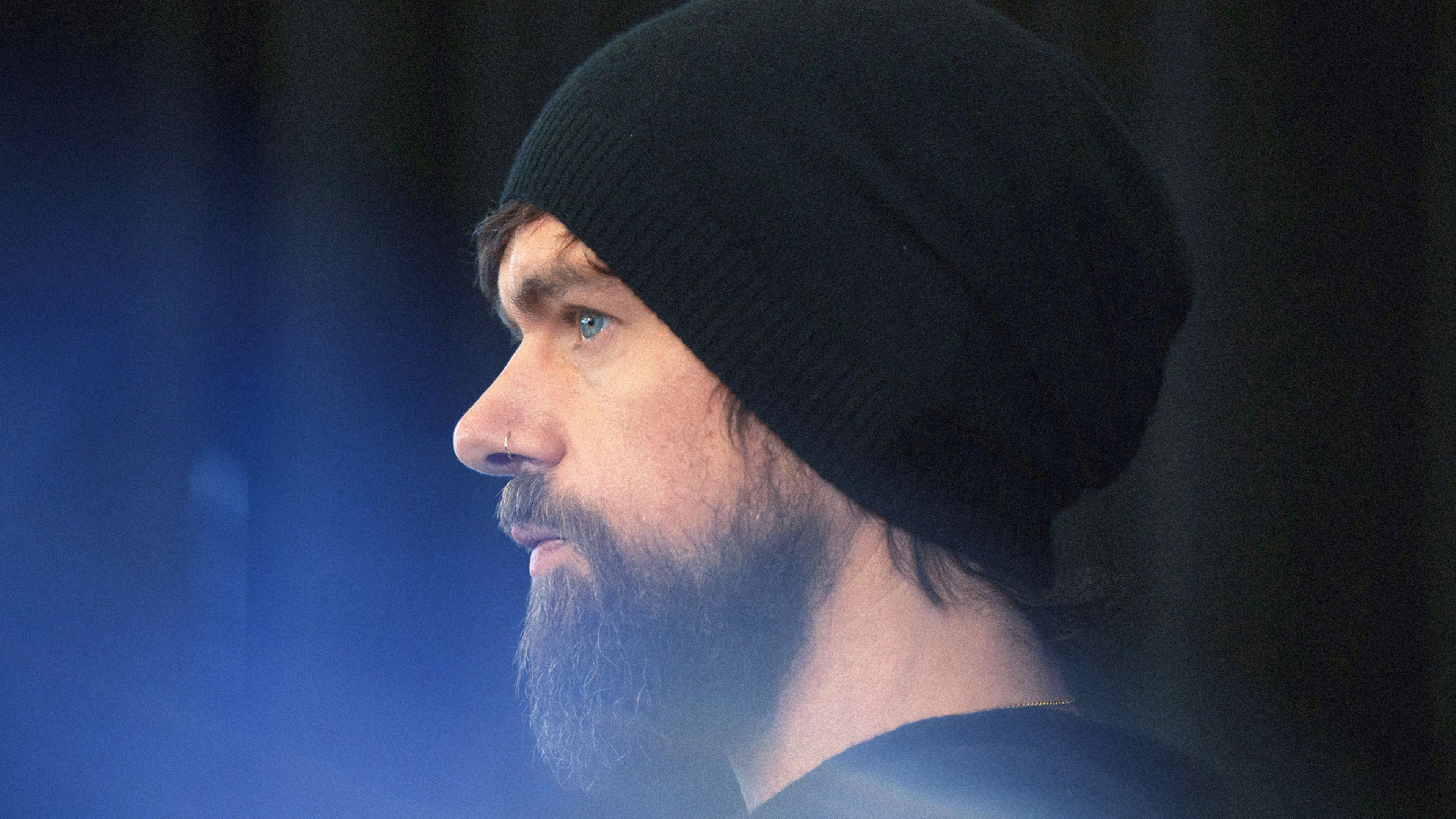

Jack Dorsey knows his platform is flawed. “It’s a pretty terrible situation when you’re coming to a service to learn something about the world, and you spend the majority of time reporting and receiving abuse,” the Twitter cofounder said on the stage at TED in Vancouver, during a conversation with the head of TED, Chris Anderson, and Whitney Pennington Rodgers, TED’s current affairs curator. Dorsey was referencing the fact that a woman is abused on Twitter every 30 seconds, or that the platform, through the proliferation of bots on it, has been accused of influencing elections in the U.S. and most recently, in Israel, or allowing toxic accounts linked with extremist groups to linger.

“What worries me most,” Dorsey added, is Twitter’s ability to address the widespread issues of abuse and misinformation on the platform in a systemic way. Throughout the conversation, both Anderson and Pennington Rodgers, as well as the audience through the hashtag #AskJackatTED, pressed Dorsey on what, exactly, Twitter was doing to attempt to rein in abuse on the platform.

Dorsey answered by saying that the company has doubled down on using machine learning to identify toxicity on the platform.

“Mid-last year, we tried to apply more deep learning to the problem so we can take the burden off the victim to report abuse,” he said. “Around 38% of abusive tweets are now identified by machine learning,” he added, citing a set of self-reported metrics the company disclosed today. “That was from 0% just a year ago, which meant that everyone who received abuse had to report it.”

Twitter is also beginning to work with Cortico, a nonprofit that collaborates with the MIT Media Lab to analyze public conversation, to measure indicators of toxicity on the platform. While Twitter is leaning more heavily on machine learning to do this work, Dorsey said a team of humans at the company are still tasked with reviewing toxic tweets that these algorithms surface, and deciding how to manage the account producing them.

While Dorsey made it clear that this investment in algorithms to identify abuse is crucial, to the audience at TED, and to many users on the platform who chimed in through the hashtag, his promises fell short. Multiple versions of the question: “Why are Nazis still on your platform?” poured in, and Dorsey leaned on an answer that felt dissatisfying to many. “We have policies around violent extremist groups, and the majority of our work in our terms of service works on conduct,” he said.

However, Dorsey continued to emphasize that proactively identifying abusive behavior through algorithms is the way forward. “I want to build a platform that is not dependent on me,” he said.

Related: Carole Cadwalladr blasts tech titans at TED: Your technology is “a crime scene”

“It doesn’t facilitate the healthy conversations”

Going forward, Dorsey said he wants to change the way Twitter rewards user engagement, even if that means sacrificing time spent on the site. “More relevance means less time on the service, and that’s perfectly fine,” he said. Rather than focusing on a network of followed users, he suggested that Twitter works better as “an interest-based network.”

“If I were to start service again, I wouldn’t emphasize follow count, I wouldn’t emphasize the like count, or even have a like button in the first place,” he said. “It doesn’t facilitate the healthy conversations.”

At #TED2019 where CEO @Jack took the stage this morn to take on tough questions about growing toxic culture of @Twitter. Kudos to him for showing up – hope we see words & aspirations turn into action. pic.twitter.com/NQQJKzdmaC

— Jean Case (@jeancase) April 16, 2019

But for now, people still want Dorsey to hold himself and the platform to a higher standard of accountability. Anderson may have summed it up best: “We are on this great voyage with you on a ship, and there are people on board in steerage who are expressing discomfort and you, unlike other captains are saying, ‘well, tell me, I want to hear,’ and they’re saying ‘we’re worried about the iceberg ahead,'” Anderson says. “And you say, ‘our ship hasn’t been built for steering as well as it might,’ and you’re showing this extraordinary calm, but we’re all saying ‘Jack, turn the fucking wheel!'”

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.