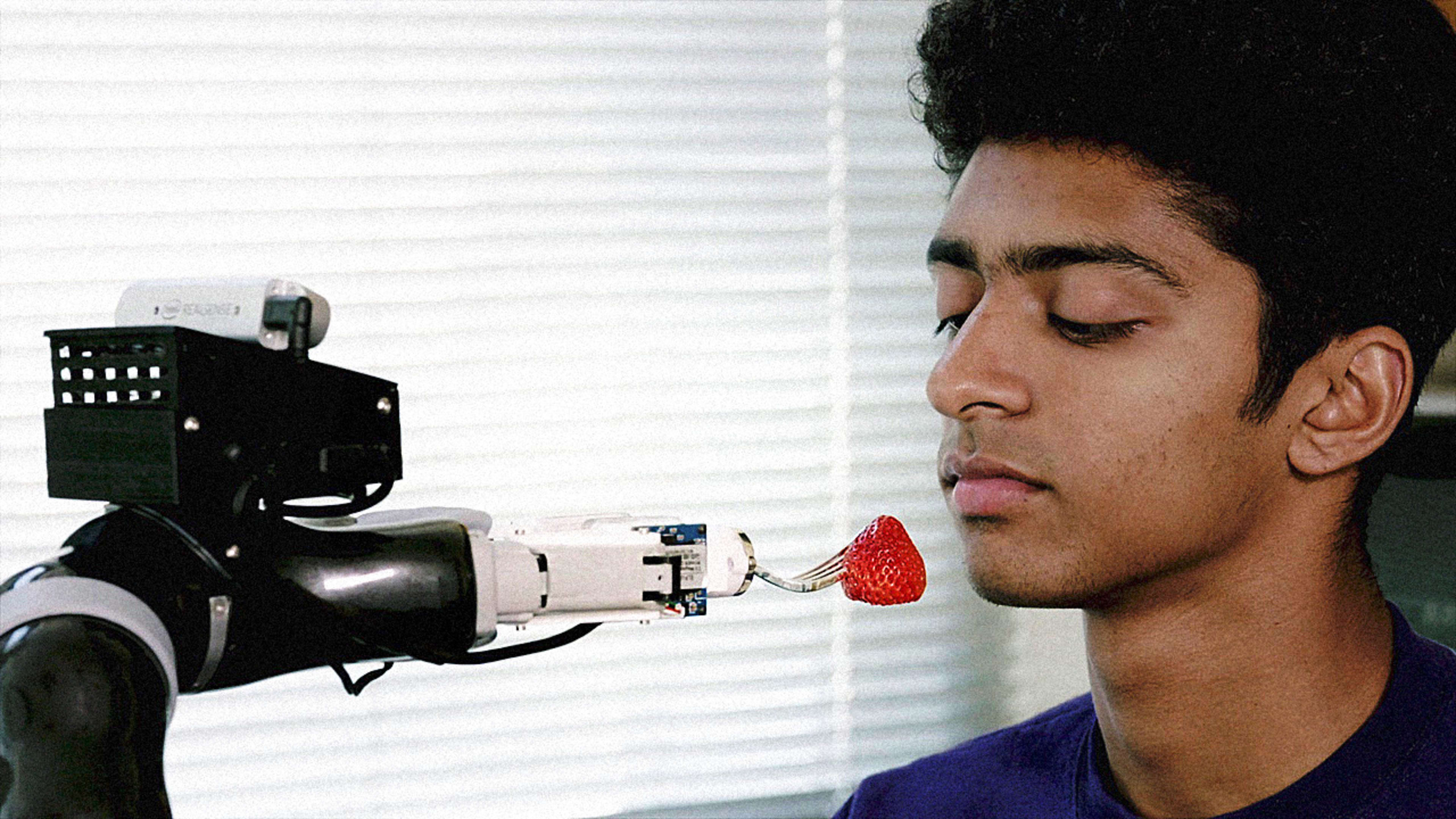

A million people around the U.S. cannot feed themselves. Whether they’re living with a disability or age-related challenges, they require a caregiver to spoon food into their mouths for meals and snacks. For the past year, researchers at the University of Washington have been developing a robotic arm that could enable anyone capable of opening their mouth to feed themselves. The robot can be hooked onto a wheelchair, where it holds a fork, pokes at bites of food, and delivers those bites right into someone’s mouth. It’s a personal companion for food that could one day replace assisted eating altogether.

But the mealtime choreography that most of us take for granted is harder to automate than you might think. To master the use of a fork, researchers needed to deconstruct the intricate UX of eating.

[Image: courtesy University of Washington]

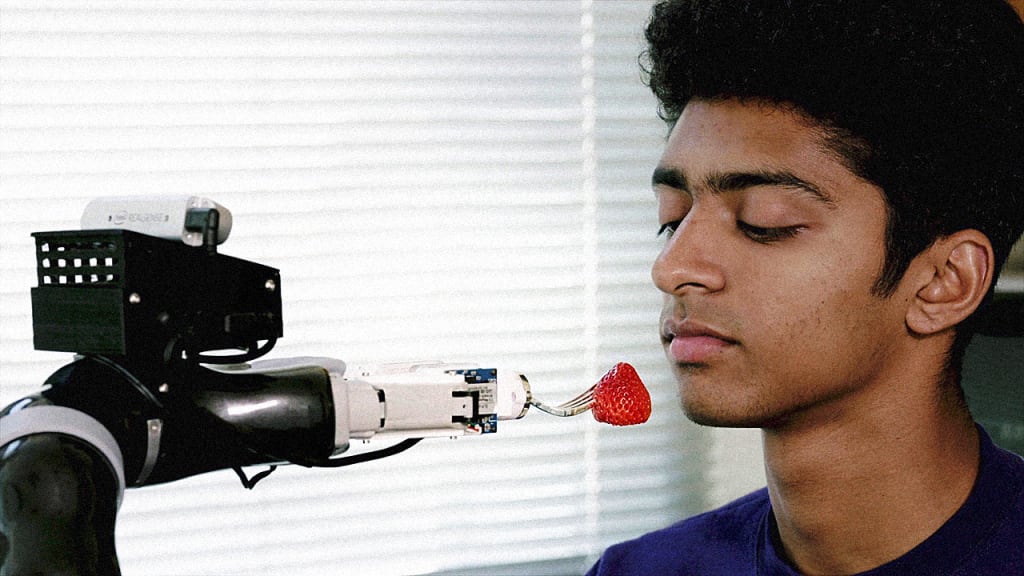

As a result, the feeding robot is actually a product of several studies. First and foremost, the robot had to learn how to properly poke foods. Perhaps that sounds simple, but imagine the difference in how it feels to pierce the surface of a crunchy carrot, a smooth but squishy grape, or a soft banana. “People change how they skewer food based on food shape, hardness, ease of feeding, etc.,” says UW computer science doctoral student Gilwoo Lee, who worked on the project. So humans were brought into the lab and handed a sensor-laden fork, which they used to pick up a dozen foods and lift them to feed a mannequin. Researchers recorded the data to better understand the micromovements at play.

[Image: courtesy University of Washington]

One example? If you pierce a banana from the top, you’ll get through the flesh fine. But the slippery banana will slide right down off the fork when you attempt to lift it off the plate. “This motivated us to come up with an angled skewering motion, which significantly increased the success rate for soft items,” says Lee.

The next challenge was not only lifting that food to the right height, but also angling it to stay on the fork all the way until it was delivered, edibly, into someone’s mouth. For this section of the research, the lab tasked able-bodied people with taking bites from the robot’s hand. What they learned was that some items, like a carrot, needed to be skewered not just at the right angle, but the right place. You can’t fit a stick of carrot in your mouth if it was poked in the middle. Slender items like these need to be poked at an endpoint.

[Image: courtesy University of Washington]

With all of this eating data in its software, the robot uses object recognition to discern 12 different foods–apple, banana, bell pepper, broccoli, cantaloupe, carrot, cauliflower, cherry tomato, grapes, honeydew melon, kiwi, strawberry–and poke at each with a unique strategy. To know it’s the right time for the next bite, the robot spots an open mouth and delivers food there.

It’s an imperfect solution, the research team admits, because it prohibits the diner from talking to someone at the table while eating–a casual hello would be interpreted by the robot as a request for a bite. The ideal feeding robot would enable social interactions with others at the table. Other limitations include the fact that the robot doesn’t cut the food into bites itself–though Lee points out that there are one-handed cutting boards, already designed for stroke survivors, that the robot will likely learn to use in the future. The team is also working on training the robot to pick up more types of food–and learning new skills like twirling pasta–along with creating an algorithm that will help the robot pick up unique foods, making a best guess to tackle unforeseen forking challenges.

While the team has no immediate plans for commercialization, what should be heartening is that this robot is built entirely from off-the-shelf components. The core arm is actually a Jaco assistive robot arm produced by the manufacturer Kinova, while the camera and depth sensor is made by Intel. That means we already have the physical technologies to allow anyone to feed themselves; now we just need all of the human UX intricacies of dining translated into code.

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.