Vint Cerf, who co-designed the early protocols and architecture for our modern internet, uses a parable to explain why courageous leadership is vitally important in the wake of emerging technologies like artificial intelligence.

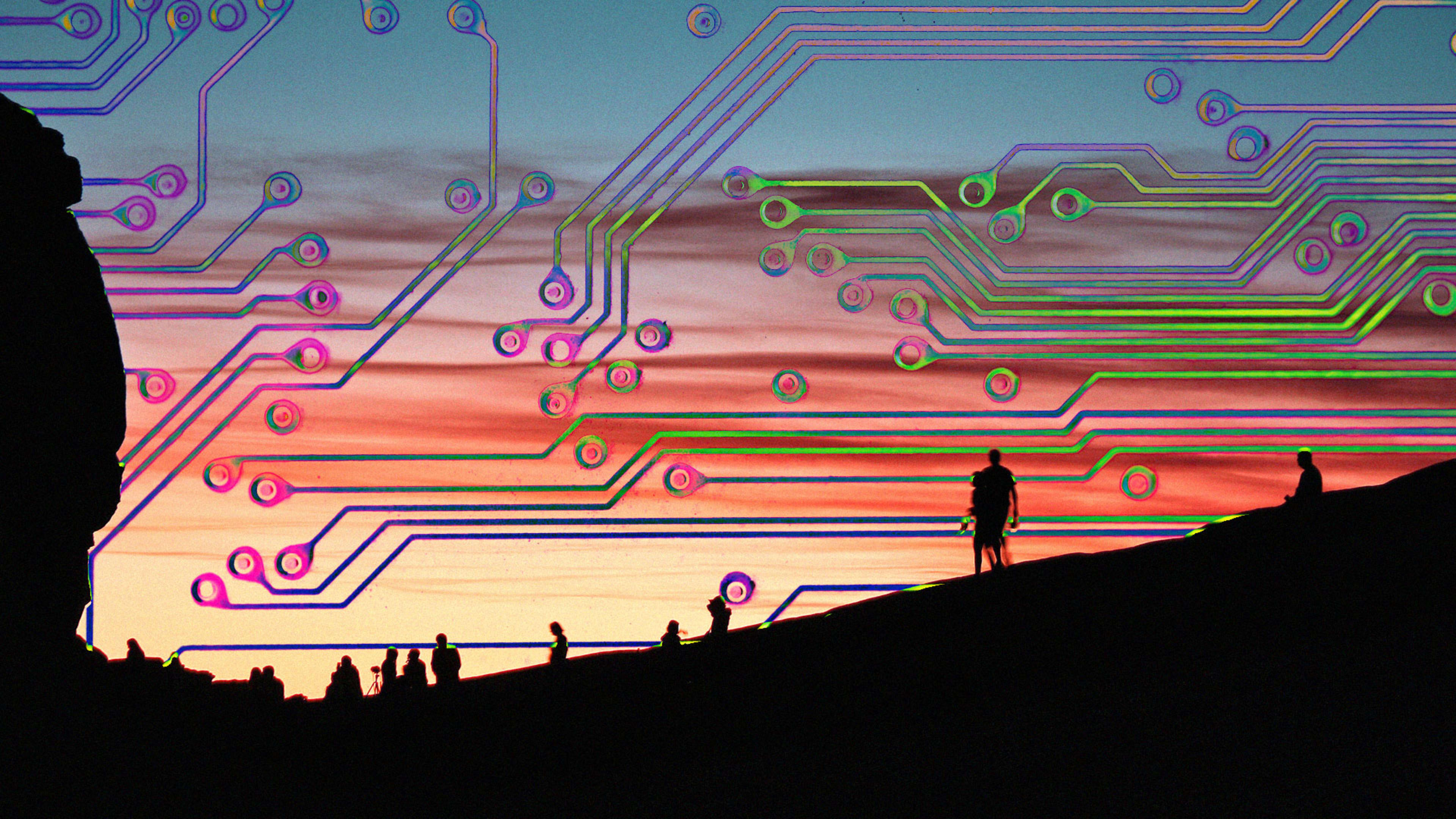

Imagine that you are living in a tiny community at the base of a valley that’s surrounded by mountains. At the top of a distant mountain is a giant boulder. It’s been there for a long time and has never moved, so as far as your community is concerned, it just blends into the rest of the landscape. Then one day, you notice that the giant boulder looks unstable—that it’s in position to roll down the mountain, gaining speed and power as it moves, and it will destroy your community and everyone in it. In fact, you realize that perhaps you’ve been blind to its motion your entire life. That giant boulder has always been moving, little by little, but you’ve never had your eyes fully open to the subtle, minute changes happening daily: a tiny shift in the shadow it casts, the visual distance between it and next mountain over, the nearly imperceptible sound it makes as the ground crunches beneath it. You realize that as just one person, you can’t run up the mountain and stop the giant boulder on your own. You’re too small, and the boulder is too large.

But then you realize that if you can find a pebble and put it in the right spot, it will slow the boulder’s momentum and divert it just a bit. Just one pebble won’t stop the boulder from destroying the village, so you ask your entire community to join you. Pebbles in hand, every single person ascends the mountain and is prepared for the boulder—there is collaboration, and communication, and a plan to deal with the boulder as it makes its way down. People and their pebbles—not a bigger boulder—make all the difference.

Safe, beneficial technology isn’t the result of hope and happenstance. It is the product of courageous leadership and of dedicated, ongoing collaborations. But at the moment, the AI community is competitive and often working at cross-purposes.

The future of AI — and by extension, the future of humanity — is already controlled by just nine big tech titans, who are developing the frameworks, chipsets, and networks, funding the majority of research, earning the lion’s share of patents, and in the process mining our data in ways that aren’t transparent or observable to us. Six are in the US, and I call them the G-MAFIA: Google, Microsoft, Amazon, Facebook, IBM and Apple. Three are in China, and they are the BAT: Baidu, Alibaba and Tencent.

The Big Nine are under intense pressure — from Wall Street in the United States and Beijing in China — to fulfill shortsighted expectations, even at great cost to our futures. We must empower and embolden the Big Nine to shift the trajectory of artificial intelligence, because without a groundswell of support from us, they cannot and will not do it on their own.

What follows is a series of pebbles that can put humanity on a better path to the future.

The Big Nine’s leadership all promise that they are developing and promoting AI for the good of humanity. I believe that is their intent, but executing on that promise is incredibly difficult. To start, how should we define “good”? What does that word mean, exactly? This harkens back to the problems within AI’s tribes. We can’t just all agree to be “doing good” because that broad statement is far too ambiguous to guide AI’s tribes.

For example, AI’s tribes, inspired by Western moral philosopher Immanuel Kant, learn how to preprogram a system of rights and duties into certain AI systems. Killing a human is bad; keeping a human is good. The rigidity in that statement works if the AI is in a car and its only choices are to crash into a tree and injure the driver or crash into a crowd of people and kill them all. Rigid interpretations don’t solve for more complex, real-world circumstances where the choices would be more varied: crash into a tree and kill the driver; crash into a crowd and kill eight people; crash into the sidewalk and kill only a three-year-old boy. How can we possibly define what is the best version of “good” in these examples?

Again, frameworks can be useful to the Big Nine. They don’t require a mastery of philosophers. They just demand a slower, more conscientious approach. The Big Nine should take concrete steps on how it sources, trains, and uses our data, how it hires staff, and how it communicates ethical behavior within the workplace.

At every step of the process, the Big Nine should analyze its actions and determine whether or not they’re causing future harm—and then they should be able to verify that their choices are correct. This begins with clear standards on bias and transparency.

Right now, there is no singular baseline or set of standards to evaluate bias—and there are no goals to overcome the bias that currently exists throughout AI. There is no mechanism to prioritize safety over speed and given my own experiences in China and the sheer number of safety disasters there, I’m extremely worried. Bridges and buildings routinely collapse, roads and sidewalks buckle, and there have been too many instances of food contamination to list here. (That isn’t hyperbole. There have been more than 500,000 food health scandals involving everything from baby formula and rice in just the past few years.

One of the primary causes for these problems? Chinese workplaces that incentivize cutting corners. It is absolutely chilling to imagine advanced AI systems built by teams that cut corners. Without enforceable global safety standards, the BAT have no protection from Beijing’s directives, however myopic they may be, while the G-MAFIA must answer to ill-advised market demands. There is no standard for transparency either. In the United States, the G-MAFIA, along with the American Civil Liberties Union, the New America Foundation, and the Berkman Klein Center at Harvard are part of the Partnership on AI, which is meant to promote transparency in AI research. The partnership published a terrific set of recommendations to help guide AI research in a positive direction, but those tenets are not enforceable in any way—and they’re not observed within all of the business units of the G-MAFIA. They’re not observed within the BAT, either.

The Big Nine are using flawed corpora (training data sets) that are riddled with bias. This is public knowledge. The challenge is that improving the data and learning models is a big financial liability. For example, one corpus with serious problems is ImageNet, which I’ve made reference to several times in this book. ImageNet contains 14 million labeled images, and roughly half of that labeled data comes solely from the United States.

Here in the US, a “traditional” image of a bride is a woman wearing a white dress and a veil, though in reality that image doesn’t come close to representing most people on their wedding day. There are women who get married in pantsuits, women who get married on the beach wearing colorful summery dresses, and women who get married wearing kimono and saris. In fact, my own wedding dress was a light beige color. Yet ImageNet doesn’t recognize brides in anything beyond a white dress and veil.

We also know that medical data sets are problematic. Systems being trained to recognize cancer have predominantly been ingesting photos and scans of light skin. And in the future, it could result in the misdiagnosis of people with black and brown skin. If the Big Nine knows there are problems in the corpora and aren’t doing anything about it, they’re leading AI down the wrong path.

One way forward is to turn AI on itself and evaluate all of the training data currently in use. This has been done plenty of times already—though not for the purpose of cleaning up training data. As a side project, IBM’s India Research Lab analyzed entries shortlisted for the Man Booker Prize for literature between 1969 and 2017. It revealed “the pervasiveness of gender bias and stereotype in the books on different features like occupation, introductions, and actions associated to the characters in the book.” Male characters were more likely to have higher-level jobs as directors, professors, and doctors, while female characters were more likely to be described as “teacher” or “whore.”

If it’s possible to use natural language processing, graph algorithms, and other basic machine-learning techniques to ferret out biases in literary awards, those can also be used to find biases in popular training data sets. Once problems are discovered, they should be published and then fixed. This would serve a dual purpose. Training data can suffer from entropy, which might jeopardize an entire system. With regular attention, training data can be kept healthy.

A solution would be for the Big Nine—or the G-MAFIA, at the very least—to share the costs of creating new training sets. This is a big ask since creating new corpora takes considerable time, money, and human capital. Until we’ve successfully audited our AI systems and corpora and fixed extant issues within them, the Big Nine should insist on human annotators to label content and make the entire process transparent. Then, before those corpora are used, the data should be verified. It will be an arduous and tedious process but one that would serve in the best interests of the entire field.

Yes, the Big Nine need our data. However, they should earn—rather than assume—our trust. Rather than changing the terms of service agreements using arcane, unintelligible language, or inviting us to play games, they ought to explain and disclose what they’re doing. When the Big Nine do research—either on their own or in partnership with universities and others in the AI ecosystem—they should commit to data disclosure and fully explain their motivations and expected outcomes. If they did, we might willingly participate and support their efforts. I’d be the first in line.

Understandably, data disclosure is a harder ask in China, but it’s in the best interests of citizens. The BAT should not agree to build products for the purpose of controlling and limiting the freedoms of China’s citizens and those of its partners. BAT executives must demonstrate courageous leadership. They must be willing and able to disagree with Beijing: to deny requests for surveillance, safeguard Chinese citizens’ data, and ensure that at least in the digital realm, everyone is being treated fairly and equally.

The Big Nine should pursue a sober research agenda. The goal is simple and straightforward: build technology that advances humanity without putting us at risk. One possible way to achieve this is through something called “differential technological progress,” which is often debated among AI’s tribes. It would prioritize risk-reducing AI progress over risk-increasing progress. It’s a good idea but hard to implement. For example, generative adversarial networks, which were mentioned in the scenarios, can be very risky if harnessed and used by hackers. But they’re also a path to big achievements in research. Rather than assuming that no one will repurpose AI for evil—or assuming that we can simply deal with problems as they arise—the Big Nine should develop a process to evaluate whether new basic or applied research will yield an AI whose benefits greatly outweigh any risks. To that end, any financial investment accepted or made by the Big Nine should include funding for beneficial use and risk mapping. For example, if Google pursues generative adversarial network research, it should spend a reasonable amount of time, staff resources, and money investigating, mapping, and testing the negative consequences.

A requirement like this would also serve to curb expectations of fast profits. Intentionally slowing the development cycle of AI is not a popular recommendation, but it’s a vital one. It’s safer for us to think through and plan for risk in advance rather than simply reacting after something goes wrong.

In the United States, the G-MAFIA can commit to recalibrating its own hiring processes, which at present prioritize a prospective hire’s skills and whether they will ft into company culture. What this process unintentionally overlooks is someone’s personal understanding of ethics. Hilary Mason, a highly respected data scientist and the founder of Fast Forward Labs, explained a simple process for ethics screening during interviews. She recommends asking pointed questions and listening intently to a candidate’s answers. Questions like: “You’re working on a model for consumer access to a financial service. Race is a significant feature in your model, but you can’t use race. What do you do?” and “You’re asked to use network traffic data to offer loans to small businesses. It turns out that the available data doesn’t rigorously inform credit risk. What do you do?”

Depending on the answers, candidates should be hired, be hired conditionally, and required to complete unconscious bias training before they begin work, or be dis-qualified. The Big Nine can build a culture that supports ethics in AI by hiring scholars, trained ethicists, and risk analysts. Ideally, these hires would be embedded throughout the entire organization: on consumer hardware, software, and product teams; on the sales and service teams; co-leading technical programs; building networks and supply chains; in the design and strategy groups; in HR and legal; and on the marketing and communications teams.

The Big Nine should develop a process to evaluate the ethical implications of research, workflows, projects, partnerships, and products, and that process should be woven in to most of the job functions within the companies. As a gesture of trust, the Big Nine should publish that process so that we can all gain a better understanding of how decisions are made with regards to our data. Either collaboratively or individually, the Big Nine should develop a code of conduct specifically for its AI workers. It should reflect basic human rights and it should also reflect the company’s unique culture and corporate values. And if anyone violates that code, a clear and protective whistleblowing channel should be open to staff members.

Realistically, all of these measures will temporarily and negatively impact short-term revenue for the Big Nine. Investors need to allow them some breathing room.

AI is a big space, and we are only at the beginning of our ascent up the mountain. It’s time to grab our pebbles and start on the right path.

Excerpted from: The Big Nine: How the Tech Titans and Their Thinking Machines Could Warp Humanity by Amy Webb. Copyright © by Amy Webb. Published by arrangement with PublicAffairs, an imprint of Hachette Book Group.

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.