Thanks to social media, it’s easy to come across reporting from unfamiliar news sources around the world. But it can often be difficult to tell which sites are presenting the straight truth, which have a political bias, and which are spreading outright lies.

A new research project from the Massachusetts Institute of Technology’s Computer Science and Artificial Intelligence Lab and the Qatar Computing Research Institute aims to use machine learning to detect which sites focus on facts and which are more likely to churn out misinformation.

“If a website has published fake news before, there’s a good chance they’ll do it again,” said postdoctoral associate MIT CSAIL Ramy Baly, lead author on a paper about the technology, in a statement.

“The most useful information source for judging both factuality and bias turns out to be the actual articles,” says Preslav Nakov, a senior scientist at QCRI, in an interview.

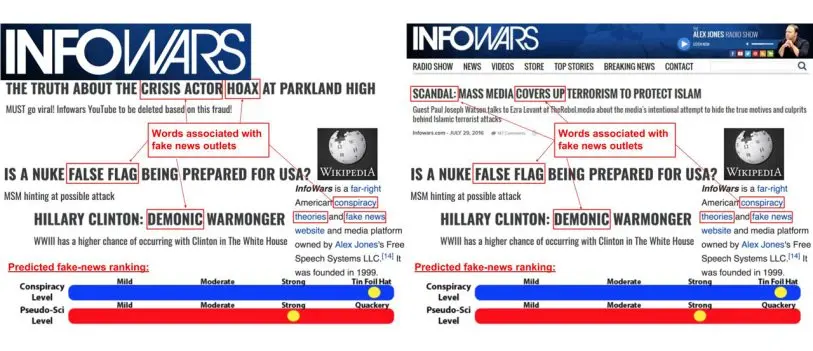

Perhaps unsurprisingly, less factual sites were more likely to use hyperbolic and emotional language than those reporting more factual content. Additionally, Nakov says, news sources with longer descriptions on Wikipedia tend to be more reliable. The online encyclopedia can also provide verbal indications that news sources are suspect, such as references to bias or a tendency to spread conspiracy theories, he says.

“If you, for example, open the Wikipedia page of Breitbart, you read things like ‘misogynistic,’ ‘xenophobic,’ ‘racist,'” Nakov says.

Separately, sites with more complex domain names and URL structures were generally less trustworthy than sites with simpler ones. Some of the more complex URLs belonged to sites with longer addresses essentially impersonating familiar ones with simpler domains.

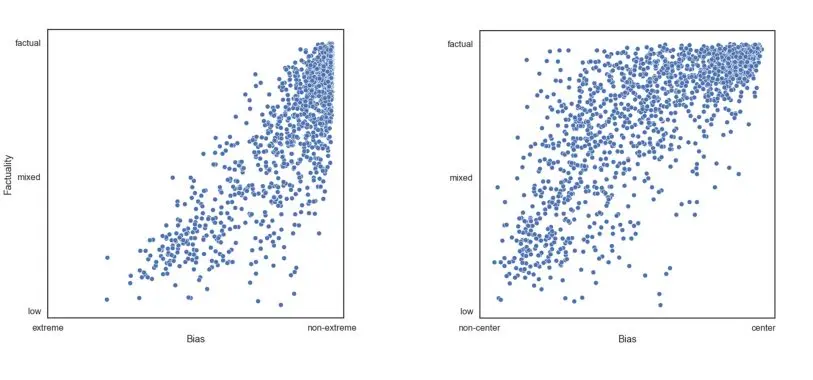

When presented with a new news outlet, the system was roughly 65% accurate at detecting whether it has a high, medium, or low level of factuality and 70% accurate at detecting whether it leans to the left, right, or center. The researchers plan to present the paper in a few weeks at the Empirical Methods in Natural Language Processing conference in Brussels.

The research is far from the only ongoing project involving combating misinformation. The Defense Advanced Research Projects Agency has been funding research into detecting forged images and videos, and other researchers have delved into how to teach people to spot suspect news.

In the future, the MIT and QCRI researchers plan to test the English-trained system on other languages and see how it fares with other biases than left and right, such as spotting religious or secular-leaning news in the Islamic world. The group also has plans for an app that could offer users a look at news stories from a variety of political perspectives.

Recognize your company's culture of innovation by applying to this year's Best Workplaces for Innovators Awards before the extended deadline, April 12.