Facebook has been rocked by reports of a massive data scrape carried out by Cambridge Analytica and one of its then-contractors, a Cambridge University academic named Aleksandr Kogan. Kogan claims that the data he collected from thousands of Facebook users and their friends—amounting to data on over 50 million users—abided by Facebook’s terms; Cambridge Analytica promises it deleted the data; and Facebook is auditing everyone it can for signs of the data.

But while Facebook provided the original data, it wasn’t the only vehicle for Kogan’s app. Kogan acquired what he wanted from individual people—over 240,000 over a six-month period—recruiting them and paying them for their quiz answers and data using Amazon’s Mechanical Turk micro-work platform and Qualtrics, a survey platform.

The two platforms are often used by companies to conduct experiments and surveys and by academic researchers to, for instance, recruit psychology subjects. Research ethics require that scientists only gather people’s data for specified uses with their explicit consent, and Amazon’s polices prohibit tasks from harvesting people’s Facebook data.

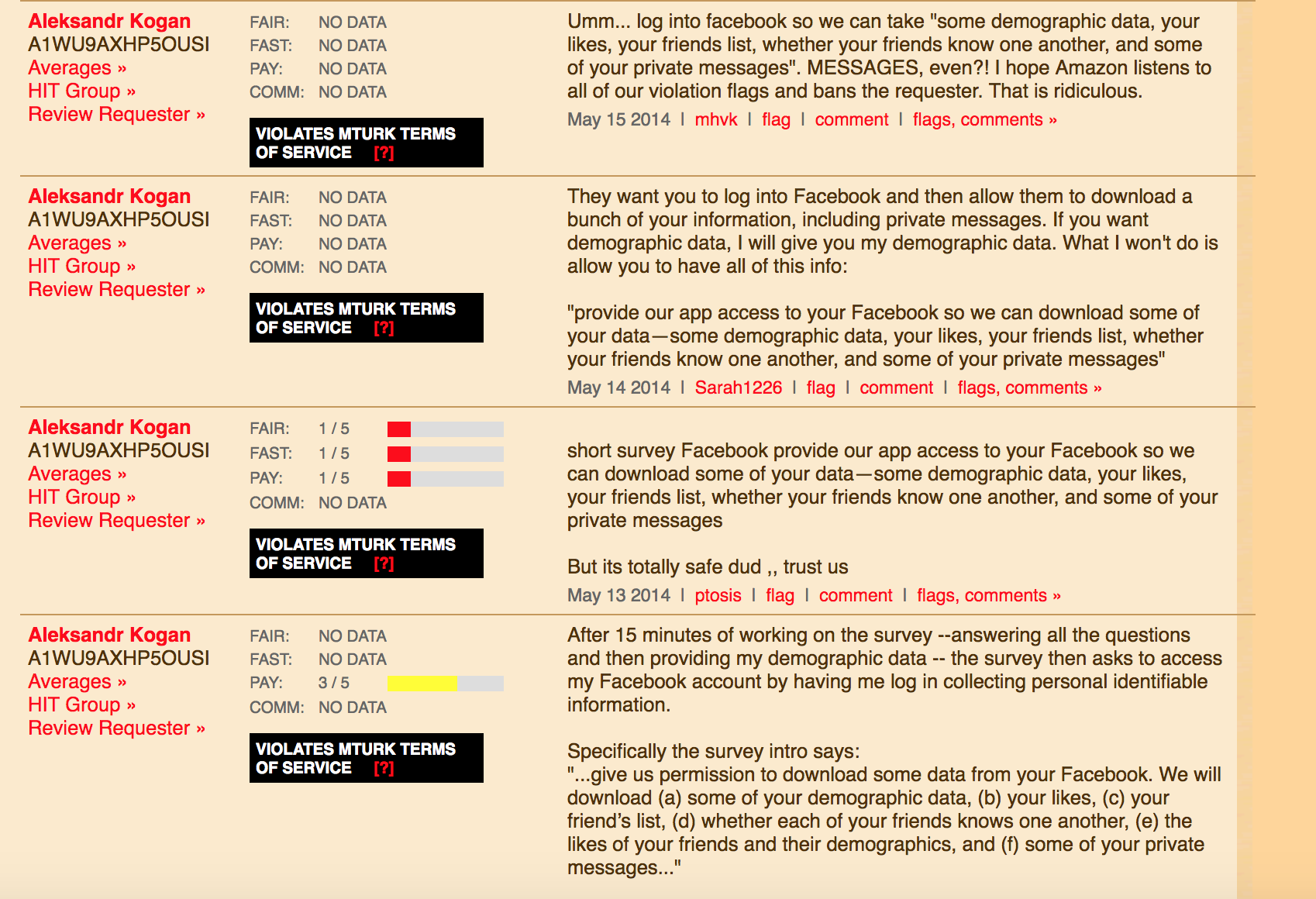

At the time, multiple survey-takers took to a message board, Turkopticon, to report the quiz for violating of Amazon’s Terms of Service. The company eventually booted Kogan from its platform in 2015–over a year later–after learning from a Guardian article that he had passed the data to Cambridge Analytica.

But Kogan’s new company, Philometrics, continues to post questionnaires to Mechanical Turk, according to Turkopticon. Kogan’s collaborator on the Cambridge Analytica project, Joe Chancellor, also still uses the platform; according to one review website, he posted a task on Mechanical Turk in February.

Kogan, who remains a research associate in Cambridge University’s psychology department, and Chancellor, who now works at Facebook Research, did not respond to requests for comment.

When pressed for more details, an Amazon Web Services spokesperson responded, “Our terms of service clearly prohibit misuse. We suspended the Mechanical Turk requester in 2015 for violating our terms of service.” (This is the same statement Amazon gave to reporters Matthias Schwartz and Cora Currier at The Intercept in March of last year.)

Amazon wouldn’t provide more details, including how many people Kogan recruited on MTurk, or whether it attempted to notify those users after it learned of Cambridge Analytica’s role in 2015. “Sorry Cale, nothing more to add at this time,” the spokesperson said.

Qualtrics has not disclosed if it took any steps to review Kogan’s work. A spokesperson for the company said, “Qualtrics takes data privacy and security very seriously. Customers, not Qualtrics, own their data. All data are safeguarded using industry best security practices that prevent unlawful disclosure.”

How The Data Harvest Worked

In early 2014, Kogan, a lecturer at Cambridge University, cofounded a company, Global Science Research, for the purpose of working with Cambridge Analytica on a project to collect data on as many Americans as possible.

The personality quizzes–and the associated data-harvesting Facebook app–first appeared that spring on Mechanical Turk. MTurk, as it’s known, is an online marketplace where human workers (called “turkers”) can earn small amounts of money for completing individual “human intelligence tasks” (HITs)–like taking a survey and connecting it with your personal Facebook profile. Kogan and Chancellor also turned to the survey platform Qualtrics, spending around $800,000 to pay users who took their quizzes (and turned over their data), according to the New York Times.

Generally, the task offered to pay turkers $1 to $2 dollars apiece once they completed the quiz and handed over access to their Facebook data. Technically, neither MTurk nor Qualtrics allow integrations that let survey respondents connect to users’ Facebook data. It’s likely, however, that Kogan recruited users using these platforms and then sent them a link to the external Facebook app, which then connected him with Facebook’s Open API.

Attached to one of the tasks it sent out in 2014, Global Science Research and Cambridge Analytica added a few specific requirements.

First, GSR and Cambridge Analytica were only interested in Americans. Second, “turkers” wouldn’t be paid until they authorized the Facebook app to “…give us permission to download some data from your Facebook. We will download (a) some of your demographic data, (b) your likes, (c) your friend’s list, (d) whether each of your friends knows one another, (e) the likes of your friends and their demographics, and (f) some of your private messages…” (Research shows the average Facebook user then had about 340 friends.)

Not long after the surveys started to appear, a few of the “turkers” began to realize that the Global Science Research app was taking advantage of Facebook’s gray data supply chain. “Someone can learn everything about you by looking at hundreds of pics, messages, friends, and likes,” warned one “turker.”

MTurk’s acceptable use policy expressly forbids this. Prohibited activities include:

- “Collecting personally identifiable information (e.g., don’t ask Workers for their email address or phone number)”

- “Advertising or marketing activities, including HITs requiring registration at another website or group”

- “Posting HITs on behalf of third parties without our prior written consent”

On Turkopticon, Kogan has more than a hundred reviews for various tasks, posted both by his account, from at least February 2014 to May 2014, and by his company’s account, from June 2014 to December 2015.

But in February 2014 and again in May 2014, a flurry of negative reviews reported that Kogan had violated the Amazon Turk Terms of Service. In May 2014 one user wrote:

After 15 minutes of working on the survey –answering all the questions and then providing my demographic data — the survey then asks to access my Facebook account by having me log in collecting personal identifiable information… This is against AMT policy, which includes:

“collecting personal identifiable information” as an example of a prohibited activity.

Also, the survey was carelessly done, which makes me believe it’s all a setup to collect information about a person for really cheap.

At least nine people on the message board reported Kogan and Global Science Research for violating Amazon’s rules. But he continued posting various research surveys until December 2015, when Amazon said it banned him from the platform.

After recent reporting by The Guardian and The Times, Facebook banned Kogan and Cambridge Analytica from its platform pending an investigation. The company said Kogan previously claimed that the data his app was scraping was being used for academic purposes.

Kogan, who has agreed to an audit, has said that Facebook “at no point raised any concerns at all” about the app or an updated description of it that detailed its commercial, rather than academic, use. He also said that when Facebook asked him to delete the data in 2015, he did. Kogan told the BBC last week that he was “being basically used as a scapegoat by both Facebook and Cambridge Analytica.”

Offloading Responsibility

Rochelle LaPlante, an MTurk worker and advocate, said the scandal highlighted issues with the way Amazon addressed abuse on its platform. Amazon, she said, doesn’t thoroughly check out the people who recruit turkers, instead relying on people like her to turn in those whose tasks go against the platform’s terms of service, she said.

“This puts responsibility onto individual workers to be familiar enough with the Terms of Service that the platform requesters must agree to,” she told Fast Company over email. “It relies on workers to know what to report and when, and to take on the labor of sending these reports to Amazon.”

Related: The Network Uber Drivers Built

What’s more, says LaPlante, Amazon rarely gives Turkers feedback about reports they have submitted or follow up on them in any way, “so we never know if our reports are read or if action is taken or how quickly (or not) any of that happens.” In regards to Kogan, LaPlante wonders why he was able to make multiple MTurk accounts and continue posting requests for work even after he was initially terminated.

How many others, like Kogan, have used these freelancer platforms for this kind of participation—and this kind of data harvesting—in exchange for a small compensation? One user on the subreddit MTurk writes about Cambridge Analytica, “This firm definitely isn’t unique and I’m sure there are worse abusers that will never get noticed.”

LaPlante says there are many tasks on MTurk that ask for people to log into Facebook, or something similar. And it’s difficult to know exactly where that data is going.

“These are clear violations of the MTurk Terms of Service and we report them to Amazon when we see them. But again, we have no way on the worker side to know if Amazon ever takes action against those,” she says. “If the researcher is suspended for a TOS violation, it’s quite possible the researcher still gets their project completed before Amazon gets around to taking a look at the reports, since popular HITs are often done within a few hours (or less).”

LaPlante said the company needs to be more transparent, echoing calls increasingly heard around Silicon Valley and the data industry. “I do wonder why we have yet to see a recent statement from Amazon about their involvement,” says LaPlante

There are many questions that still surround the Facebook-Cambridge Analytica controversy, and many lessons too. Among them: If tech companies can’t police themselves, they must share more of their internal data with researchers, journalists, and the public. It’s a demand that people have been making of Facebook and other companies for years, with varying and mostly dismal success. If they want to maintain the integrity of their platforms–and their users’ trust–any company that helps to amplify the misuse of our data will need to find ways to turn over some of their own data too.

Read more about the aftermath of the Cambridge Analytica scandal and its ongoing impact on Facebook.

With additional reporting by Joel Winston.

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.