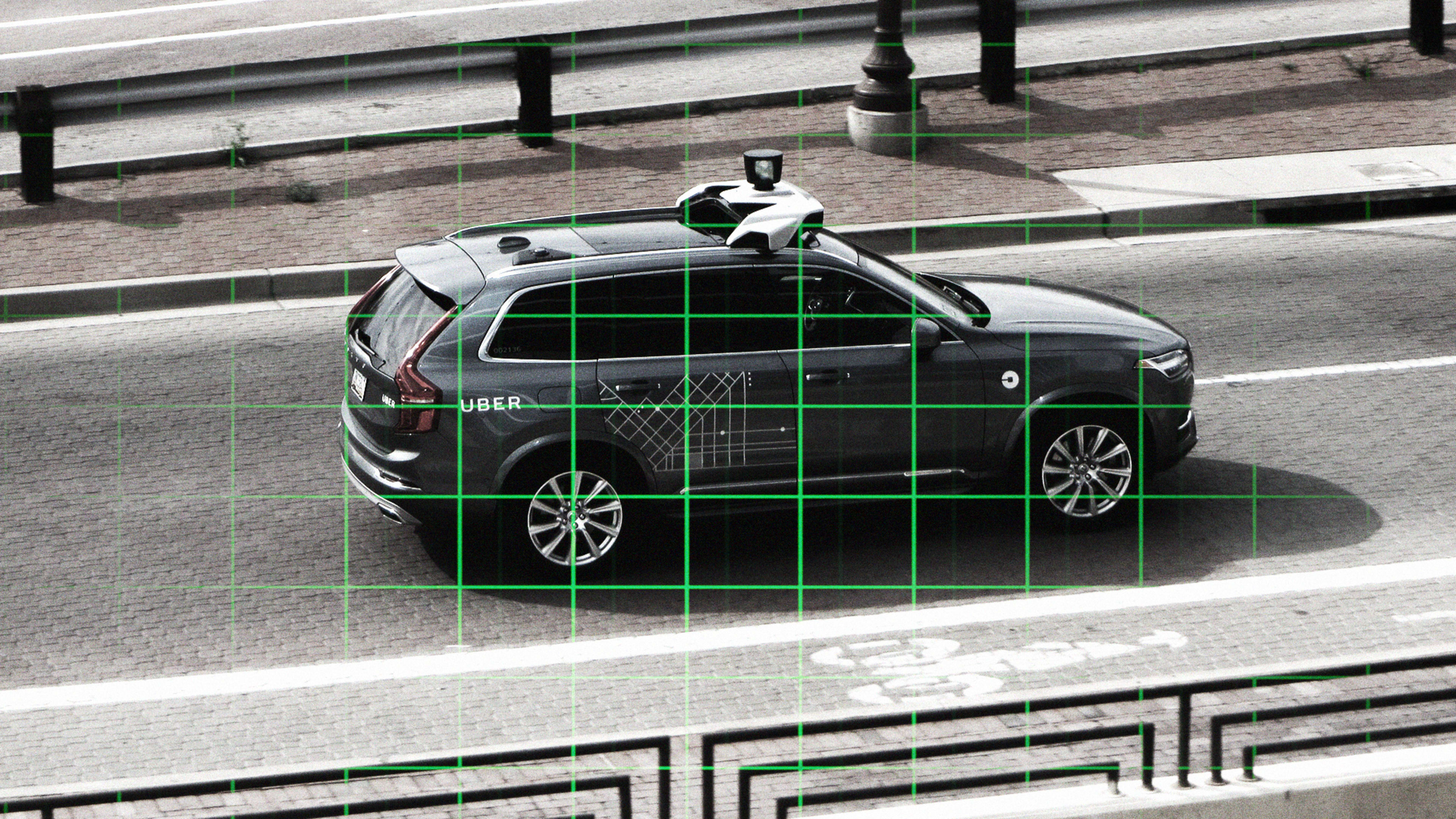

The self-driving future may be closer than you think. There are already plenty of autonomous cars cruising the streets in California, Michigan, Florida, Arizona, Nevada, and Massachusetts. And those fleets are poised to grow exponentially, with Waymo, Uber, General Motors, and others promising to have tens of thousands of them on the road by 2020. These phantom rides have been largely greeted with excited curiosity. But in the wake of a high-profile death in Arizona, new questions are being raised about their safety, and both techies and regulators are being pressured to come up with some answers.

On Sunday, March 21, a woman named Elaine Herzberg was wheeling a bicycle across a two-lane road known as Mill Avenue in Tempe, Arizona, when she was struck by one of Uber’s self-driving cars. She was transported to the hospital, but ultimately died of her injuries. The tragic accident has many people asking what went wrong and who’s responsible.

NTSB investigators in Tempe, Arizona, examine the Uber vehicle involved in Sunday’s fatal accident. pic.twitter.com/Zoj4GrnxCT

— NTSB Newsroom (@NTSB_Newsroom) March 20, 2018

“Who will be charged in her death, this is why I’m against these,” remarked a Twitter user named Cha’s Dad shortly after the incident made headlines. He was one of many outraged people demanding that someone take responsibility for her death. Much is still unknown about the accident and Uber is currently cooperating with police as they continue to investigate. But there are lots of little points of confusion. For instance, it was late at night when the accident happened, but the lack of light should not have stopped the car’s sensors from detecting Herzberg. Velodyne, the company that makes the Lidar sensor used in Uber’s cars, rushed to tell Bloomberg that its technology was not responsible for stopping the vehicle. Another company, Aptiv, which supplies advanced assisted driving systems for Volvo, also deflected responsibility, saying its technology had been disabled at the time of the accident.

https://twitter.com/boingboingbbeep/status/975793469514973184

The law is murky when it comes to who is ultimately responsible in the event of a self-driving accident. In general, there aren’t a lot of rules governing how cars that drive themselves should be rolled out onto public streets and what happens when they fail. Furthermore, regulators have not forced companies to provide insight into how their vehicles think and make decisions.

Instead, they’ve taken an extremely light touch when it comes to creating rules for self-driving startups. Last fall, the National Highway Traffic Safety Administration issued “a vision for safety.” The 36-page report is a paean to open markets, encouraging state governments to “support industry innovation” by becoming more “nimble” in order to keep up with the speed of technological change. The voluntary guidance stays pretty nonspecific and tells states not to get in the way.

As a result, states are largely left to come up with their own sets of rules for self-driving cars and how they should be validated and introduced to public roads. That can be problematic, because it creates a gulf in standards between states. California, for example, requires companies and individuals operating autonomous vehicles on its streets to obtain a permit and report certain data like collisions and disengagements. By comparison, Arizona is among the most permissive states when it comes to self-driving technology, though the state has now barred Uber from testing self-driving vehicles in the state.

In 2015, the state’s governor, Doug Ducey, issued the state’s first autonomous car rules. They called for an oversight committee for self-driving activity and required self-driving cars to be insured and have safety drivers with a drivers license; someone who could take the wheel if needed. In the weeks before Uber’s accident in Tempe, Ducey updated those rules in an executive order. The state now asks companies with self-driving vehicles without a driver in the front seat to have a system in place wherein if the car fails, it can achieve a “minimal risk condition.” Secondly, it mandates the state Department of Transportation to develop a protocol for how law enforcement should interact with a self-driving car in the event of an accident, one which the agency says is still in development. It’s unclear how the state plans on validating these criteria are met.

In addition to the fact that the current administration is anti-regulation, the federal government has in part been lax about rule making because the protocol for building safe cars in general is well-established. Manufacturers are responsible for designing and testing their own vehicles against safety standards set out by government agencies and, of course, individual cars have to pass certain mechanical safety and emissions tests every year. Plus, the driver is a built-in fail-safe. This is true even in the case of a software glitch. “There are bugs in software of the most basic things in the car,” says Nathan Aschbacher, CTO of Polysync, a self-driving car startup. “The way that is mitigated is, at the end of the day, you have a human behind the wheel.”

Tesla has been able to roll out its autopilot feature, a sort of faux self-driving experience, and update it over time, because there’s a human in the car who’s able to take the wheel in case of emergency. Even the most recent iteration, which promises to keep the car in pace with traffic and lane change as necessary, comes with this disclaimer, “Autopilot should still be considered a driver’s assistance feature with the driver responsible for remaining in control of the car at all times.”

Fully self-driving cars will change that paradigm.

What Went Wrong In Tempe

There are inherent difficulties to making a human the back-up to a car that drives itself. Once people have had the experience of being a passive driver, they have a hard time stepping back into the role of driver. According to a study conducted last year, people also need time to adapt to different kinds of driving conditions and behavior.

In the case of Uber’s accident in Tempe, there was a safety driver monitoring the car as it drove. It appears from a new video of the accident that the driver was looking down rather than at the road, though the footage is inconclusive. It does show that the victim, who was walking across the road, wasn’t visible until the car was within striking distance. Investigators are still trying to determine what went wrong here, but the incident serves as an opportunity to examine more closely what sorts of tests self-driving cars should have to pass before being allowed onto public roadways.

Car companies working on self-driving are very focused on eradicating the driver, the longstanding safety mechanism. Waymo is currently testing cars with empty driver’s seats in Arizona. California recently passed rules allowing self-driving car companies to test their cars to drive around like drones, with no safety driver upfront. General Motors has announced it will produce cars without steering wheels and brake pedals as early as 2019. Roughly 40,000 people in the U.S. die from automotive crashes every year, notes Aschbacher, “Once you cede control, what will you accept as safe enough?”

This is a big question among those tinkering on the future of mobility. Many advocates of self-driving technology say that these cars will reduce vehicle-related death. While it may reduce driver error, it may also introduce a whole new spectrum of problems–none of which is currently regulated.

Fears Of A “Flash Crash” In The Autonomy Space

One of the existing examples of algorithms taking over for humans provides some insight into what happens when algorithms take over for humans. In the case of high-frequency trading algorithms, there is something called a “flash crash.” You may remember the one that happened in 2010 when the stock market bottomed out before quickly rebounding. In these cases, money can go missing, but banks and bankers are responsible for balancing out inequity. “If there’s a flash crash in this autonomy space,” says Polysync CPO, David Sosnow, describing a software or hardware bug might cause all makes of a certain car model to behave erratically, “There is no reset button the following day–likely a lot of people have died or been seriously injured.”

Some people in the industry are calling for software safety standards. “Every 1,000 lines of code in a car contains an average of 50 errors,” says Zohar Fox, CEO and cofounder of Aurora Labs. “Standard QA testing misses about 15% of those errors, far too high a number given the growing importance of software reliability.”

The International Organization for Standardization’s guidelines for electrical systems in cars, a set of guidelines for electric systems in cars, is currently making way for self-driving technology. Historically, the standard has set rules for how automotive components should be tested individually and as integrated into a vehicle. As cars have become more computerized, the organization has embraced some software, though the most complicated code it’s dealt with doesn’t come near the intricacy of that of a self-driving car.

“The difficulty they are facing is in repairing system errors once they are on the road already and in detecting system errors before they result in malfunctions,” says Fox. System errors aren’t the only challenges self-driving cars will have to overcome to be safe. They’ll also have to become proficient in understanding and responding to the kind of cues human drivers signal to each other while on the road.

At their most competent they’ll have to be able to interact safely with humans in the car and humans outside of the car. It’s the latter that presents a bigger challenge. “The autonomous vehicle or the self-driving vehicle could be safe, but what if somebody else does something?” asks Srikanth Saripalli, an

somewhere that everybody can check,” he says. It’s through transparency he believes, that self-driving cars will ultimately be made safer–or at the very least something all people can better understand.

“Before autonomous cars are on the road, everyone should know how they’ll respond in unexpected situations,” writes Saripalli

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.