There are hundreds of millions, if not billions, of passionate sports fans around the world. And yet, according to Intel, no more than 1% of those people will ever get to see their favorite team in person.

That massive experience gap is at the center of Intel’s ambitious live-sports virtual reality efforts, a series of initiatives that over the next couple of years should solidify the company’s “as if you’re there” philosophy about sports, said Jeff Hopper, the business strategy lead at Intel Sports Group.

In the short term, those efforts will focus on single-user, individual experiences. But over time, Intel plans on making it possible for fans to be right in the middle of their favorite team’s action, create personalized 3D highlights, and share them with friends.

This week, Intel and Major League Baseball announced a three-year partnership to live-stream one baseball game a week in VR. That followed a similar pact between the NBA and NextVR that kicked off at the beginning of the 2016-2017 basketball season.

Fans watching the games—via Intel’s True VR app on Samsung’s Gear VR headset—will be able to choose from multiple camera angles around a stadium, each of which will give them a wide, immersive view of the action.

https://youtu.be/kD_FWEiDz_E

“We’re excited to work with somebody like Intel, [a] leader in the sports technology space,” Kenny Gersh, executive vice president of business at Major League Baseball Advanced Media, told Fast Company, “to define the VR experience for baseball [and] present the game in a different way to our fans.”

While analysts have predicted that virtual reality will be a $38 billion industry by 2026, it’s not yet a mainstream technology. Companies like Samsung have sold millions of VR headsets, but data suggests few people are using them regularly. And that’s why Gersh said he’s not certain that VR will succeed as a mainstream technology. Still, Major League Baseball wants to be part of shaping what VR could become. “I’d rather be there and help shape it,” he said, “rather than ignore it, and if it does take off . . . we’re not there.”

That’s where companies like Intel are playing a vital role.

The Santa Clara, California, tech giant is, of course, best known as a chip maker. But its portfolio goes far beyond powering computers and other devices. Recently, it decided to be a leader in VR, acquiring two companies that it hopes will solidify that position. First, it bought Voke, a developer of the VR cameras and technology that’s now at the heart of the Major League Baseball project. It also purchased Replay Technology, which has built a system for panoramic, 360-degree capture and playback of sports highlights.

https://youtu.be/ZzrLFHd3q3w

Those two deals together could make VR sports a billion-dollar business for Intel, according to Axios, and will be “one of the big pillars for Intel for the future,” said Sankar Jayaram, the CTO of Intel Sports Group.

What’s clear is that Intel sees VR as a nascent technology, something that shows early promise for transforming a sports viewing experience that it says hasn’t fundamentally changed in 75 years, but will eventually be much more—a way to step into the action, being able to see plays in 360 degrees almost as if from the perspective of, say, Tom Brady.

In the short term, those views are going to be curated by Intel, its league partners, and broadcasters. But over time, the company hopes, it will be able to let fans personalize how they see the most important plays by their favorite teams, and eventually, even be able to “walk around” inside live action.

To be sure, the latter goal isn’t coming to a VR headset near you anytime soon. That’s going to take at least two years, if not more, Intel says. It’s coming, though, and when it does, fans should be able to experience sports in the way we’ve all wanted to our whole lives—being on the field, in the huddle, able to see how everyone’s moving, and following the ball as it moves. All without having to take a hit from 300-pound linebackers or 7-foot-tall centers, or having to actually try to hit a 95-mile-per-hour fastball.

Stereoscopic Now, Volumetric In The Future

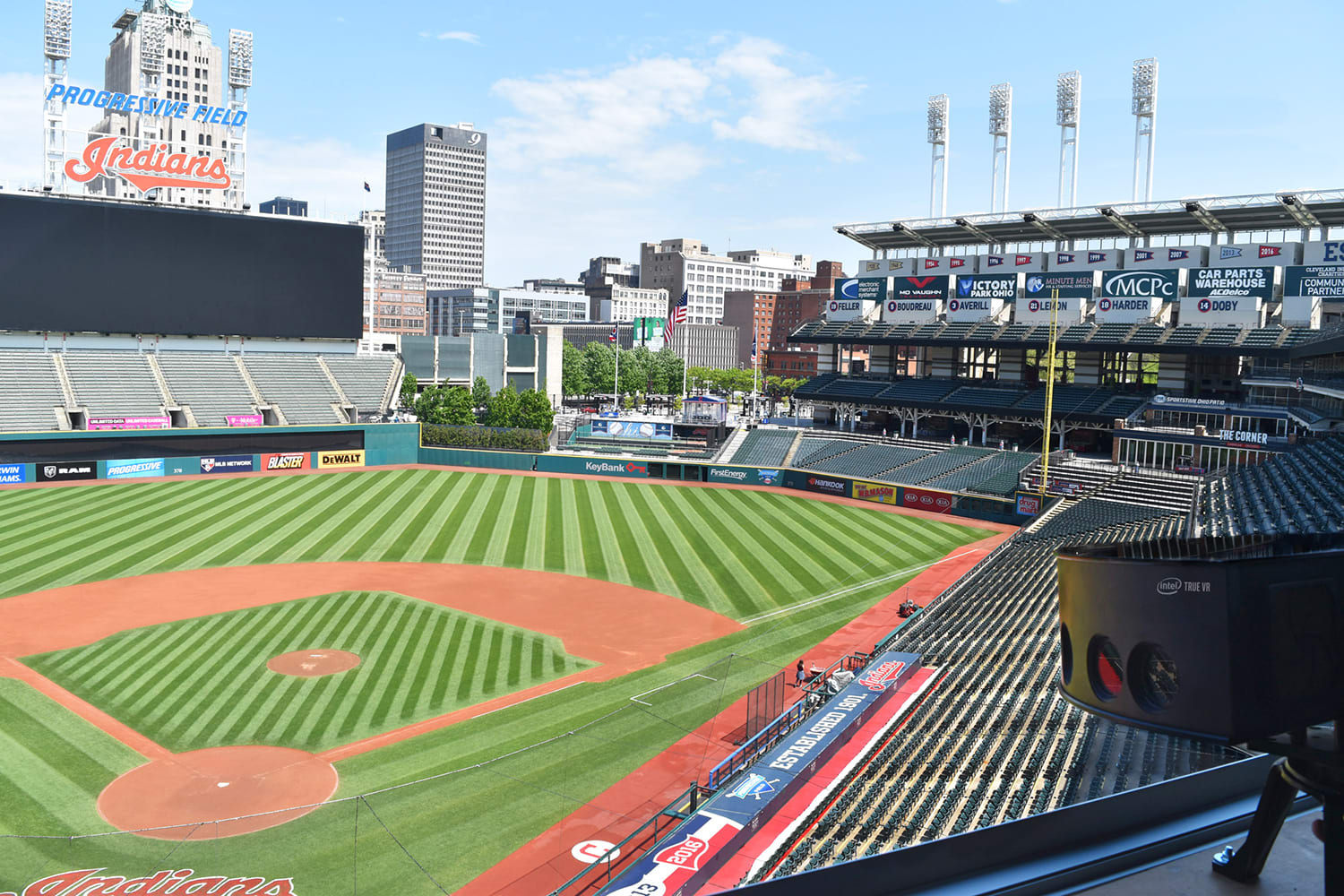

When Major League Baseball begins live-streaming games in VR with next Tuesday’s Cleveland Indians versus Colorado Rockies matchup, fans with a Gear VR will be able to select from four different camera views that place them in different parts of Denver’s Coors Field. They’ll also be able to see up-to-the-moment player and team stats, and listen to announcers tailoring their commentary for VR.

Gersh noted that what fans see when they immerse themselves in the first live MLB VR streams next week will likely have little in common with what they see by the end of the three-year partnership with Intel, given that VR capture, processing, and distribution technology will almost certainly improve significantly over the next few years.

One way it’ll likely get better is by the eventual merging of the stereoscopic technology from Intel’s True VR group and the volumetric capture that came via the acquisition of Replay Technology.

Fans have already gotten a taste of the volumetric approach—during NBA games, and more recently the Super Bowl.

You’ve no doubt seen it on TV. The broadcast shows a replay of an exciting LeBron James dunk, when suddenly it pauses, rotates, and shows the paused play from 360 degrees. It seems like computer-generated magic. But it’s not.

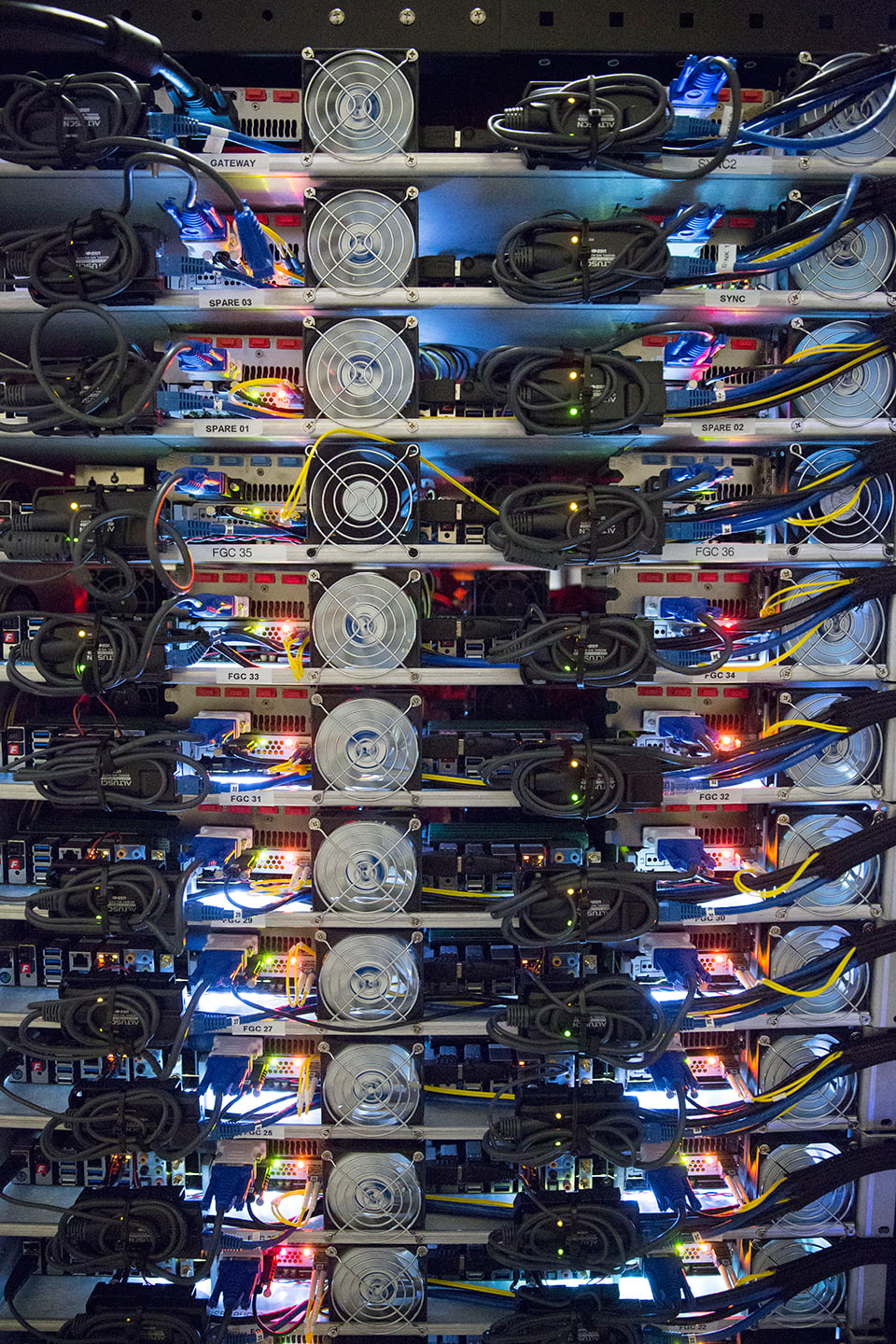

It’s actually the output of more than a couple dozen 4K cameras mounted all around arena and algorithms that automatically stitch all the imagery together into that one amazing replay.

For now, all that’s possible is those static replays, shown either during TV broadcasts or in curated highlights that could be available through a mobile app.

That’s just the beginning, though.

Starting this fall, the NFL will have this technology, called FreeD, installed in several stadiums, making it available to fans at games on JumboTrons or on mobile apps. Intel and the NFL have agreed to install the system at 12 stadiums, according to Preston Phillips, the director of business development for Intel Sports Group.

One of those venues is Levi’s Stadium, the Santa Clara home of the San Francisco 49ers. There, Intel has installed 38 5K cameras, which together capture terabytes of data, all of which is compiled into the futuristic replays.

The goal, according to Phillips? To eventually supplant the traditional broadcast experience, based on six static cameras, with a fully immersive, real-time VR viewing experience that could put fans right in the middle of the play.

Today, though, the technology is being used as a highlights product, he said. Fans at stadiums like Levi’s will see up to 11 of these clips a game—generally the “game-changing moments” that determine who wins or loses.

Leagues like the NFL will also be able to use those highlights in whatever ways they want, for example, sending them out to fans the day after games.

Yet, the 10-to-15-second replays can be produced in less than a minute, thanks to fiber connections between the cameras and a control room in each stadium. There, two Intel technicians, the “pilot and navigator,” in conjunction with the broadcasters, choose the plays they want to highlight, and turn them out. What fans see is essentially a virtual camera that shows the play from all angles.

While the highlights are produced at stadiums today, the goal is to eventually do the processing in the cloud. That would mean that Intel, the leagues, or the broadcasters could process clips from multiple venues in a single location as they scale up.

That all sounds great—until you realize that in a year or two, fans will be able to control these highlights themselves on their mobile devices. They’ll be able to choose the clips they want to watch, and eventually, even control the camera views themselves.

They’ll be able to share the clips with friends, and, Phillips said, fans will be able to “be Tom Brady as you watch the game.”

That will help fans better understand their favorite games, see how their favorite players explode off the line of scrimmage or dunk the ball, and simply get that much better an understanding of how the game works.

“This is going to bring me much, much closer to [sports] than ever before,” Hopper said. “I think that value, these experiences, you might hear them referred to as ‘fan engagement,’ but for me, it’s far more than that. It’s immersion into the passion I have for my sport, my team, my players.”

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.