Five years ago, physicist Austin Russell saw better laser sensors as essential to make self-driving cars a reality. That was early in the autonomous vehicle industry’s history, and early in Russell’s career. Actually, he was still in high school at the time.

Now 22–and having skipped college–Russell is founder and CEO of Luminar, a startup backed by PayPal and Palantir billionaire Peter Thiel (among others) that just formally unveiled itself and its new laser scanner for self-driving cars. The startup is going against big players like Velodyne and Quanergy in the market for lidar technology, which is something like radar but with infrared laser beams instead of radio waves.

Along with the scanner, Luminar announced $36 million in funding that will allow it to open a factory in Florida to build 10,000 scanners this year. They will go to automakers and other tech companies for autonomous car research, though Russell won’t say who these “strategic partners” are.

Lidar is a prerequisite for self-driving cars, says Russell, as his system can perceive distances down to a few millimeters and works in anything from bright sunlight to pitch black conditions, things that a standard camera can’t do. The lasers in Luminar’s sensor scan in front of and sometimes behind a vehicle out to at least 200 meters (about 600 feet); the time it takes the signal to bounce back off an object is used to calculate distance.

Lidar technology goes back to the 1960s. Luminar hasn’t invented anything fundamentally new, but it claims to have gotten performance up and costs down by custom-building every aspect of the system. “We’ve built this sensor from the chip-level up,” says Russell. “We’re making our own lasers, our own receivers, our own scanning mechanisms, our own processing electronics—all from scratch.”

Within a few seconds of talking to Russell, I begin to forget about his age. (He just turned 22 on March 14, Pi Day.) He has a level of composure well beyond the self-important arrogance of peers who just landed entry-level engineering jobs in the Valley. Physical stature—he stands 6-foot, 4-inches tall—a deep voice, and a full reddish beard lend a smidgen of gravitas. Russell betrays his youth, though, with occasional fits of goofy giggles during our conversation. For instance, he talks about the danger of lower-end lidar technology, “if they increase the power any more or try to get more range or resolution out of it, they’ll start frying people’s eyes—hahahahahahaha.”

Squeezing Into Cars—And Budgets

Russell’s system uses infrared wavelengths of 1550 nanometers, instead of the common 905nm wavelengths in less-expensive systems (all the way down to range finders used by golfers), which can irritate people’s eyes if the signal is too strong. The eye can’t even focus a 1550nm wavelength, so the power can be much higher—40x greater in Luminar’s case—without posing a hazard. A silicon-based receiver can’t pick up the longer 1550-nm wavelengths. Instead Luminar needs a pricier material called indium gallium arsenide (InGaAs).

Again, none of this is new, per se. Luminar’s claimed advantage is in getting the price for advanced lidar way down. Russell wouldn’t utter a word about what his system will cost, but an investigation by Bloomberg said Luminar is aiming for under $1,000, vs. up to tens of thousands for current top-of-the-line systems.

Cost is especially important because a fully autonomous car needs at least four lidar sensors, one at each corner of the vehicle, to provide a 360-degree view, says Russell. Unlike the big drums atop research cars like those designed by Alphabet’s Waymo division–which is currently suing Uber for allegedly stealing its lidar technology–Luminar’s sensors are just a bit bigger than a Nintendo Wii and fit into the car’s bumper. “Long term we are making sure this can be efficiently made and affordable to be [installed] on everything from a Honda Fit to a Bentley,” says Russell.

A car that uses lidar just to assist the driver in spotting obstacles or other dangerous conditions can get away with one scanner in the front bumper—a setup Russell shows me on two Luminar test cars, a Tesla Model S and a BMW i3.

A Clear View

Like any self-respecting startup, Luminar claims that it has the greatest technology out there—and it has attracted a lot of money from people who feel the same. The numbers are impressive. The system has a range of over 200 meters, versus, for instance, 120m for Velodyne’s hulking high-end HDL-64E unit. Quanergy’s S3 sensor is much smaller, similar to Luminar’s, but it can measure just a half million location points per second. Russell says that Luminar’s can measure “millions” per second.

Most of the talk around autonomous cars has been about artificial intelligence, with chip giants like Nvidia and Intel releasing compact supercomputers that are being tested by auto companies including Audi, Mercedes-Benz, and Tesla (Nvidia), and BMW (Intel). Luminar is making the case for providing cleaner data for the AI to munch on. “Because of such poor-quality data that the processing has to deal with, a lot of [the work] has shifted over to processing,” says Russell.

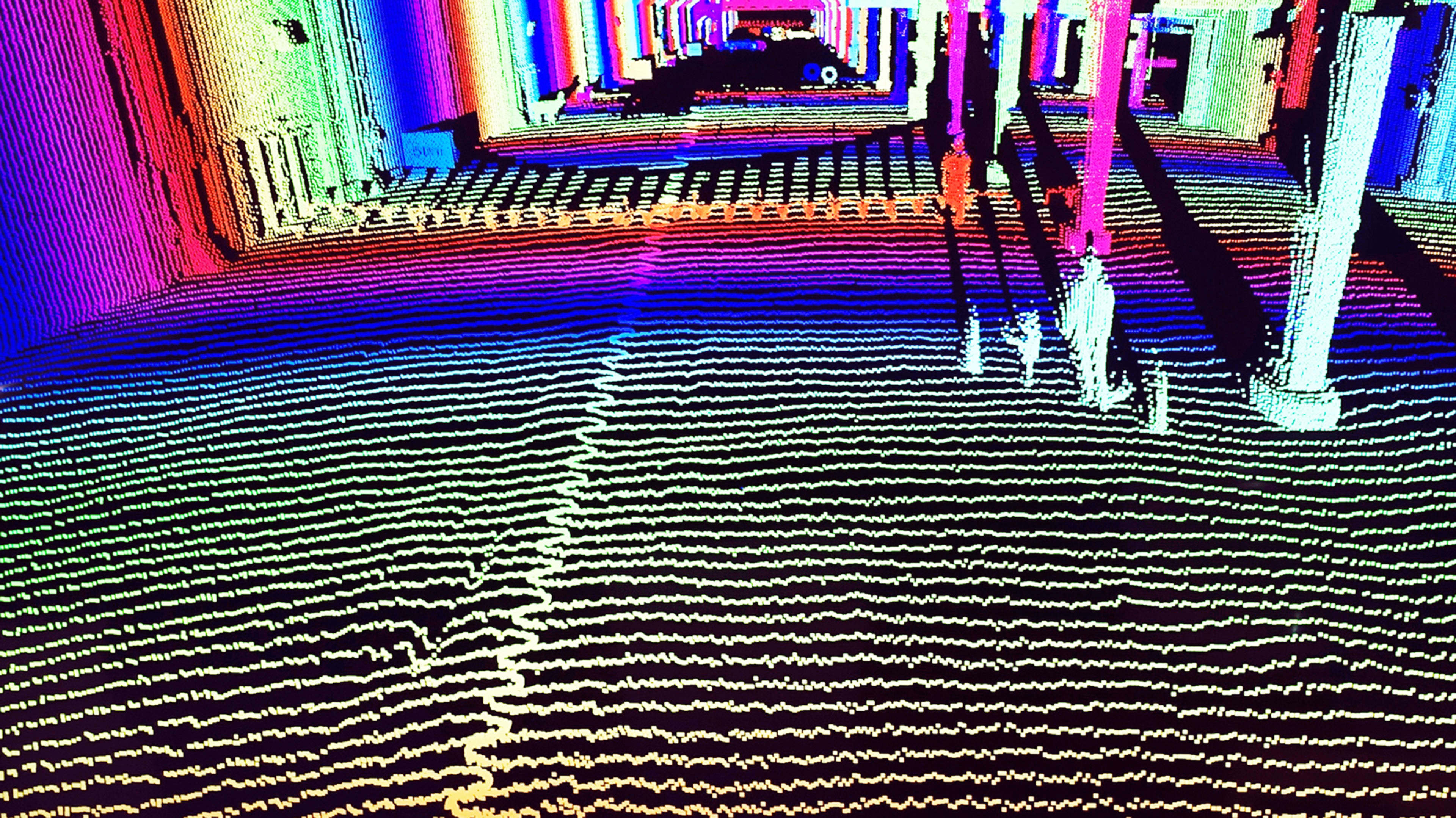

To impress me, Russell and his chief technology officer Jason Eichenholz start with a simulation of how they claim a lower-quality but still expensive lidar system views a cavernous, 235 meter-long stretch of warehouse space that runs along Pier 35 in downtown San Francisco. On a large LCD screen, sporadic wavy lines, like those on a topographic map, form a series of concentric arcs propagating off to the horizon. The lines are color-coded to indicate increasing distances—one of many visual niceties for the human viewers that the computer itself doesn’t need. Their colleague is riding a bicycle back and forth about 20 meters ahead of me. On the lidar screen, he appears as a few errant squiggles among those topographic lines.

Switching the system into high gear, the on-screen image changes to a detailed psychedelic rendering of the warehouse. I can make out the posts and beams holding up the roof, clear outlines of cars parked inside, and a cartoonish rendering of the bicyclist, with even the spokes of the wheels sometimes visible. That makes the computer’s job easier, says Eichenholz. “It’s less computationally intensive to figure out it’s a bike when it looks like a bike, versus, it’s just a bunch of dots,” he says.

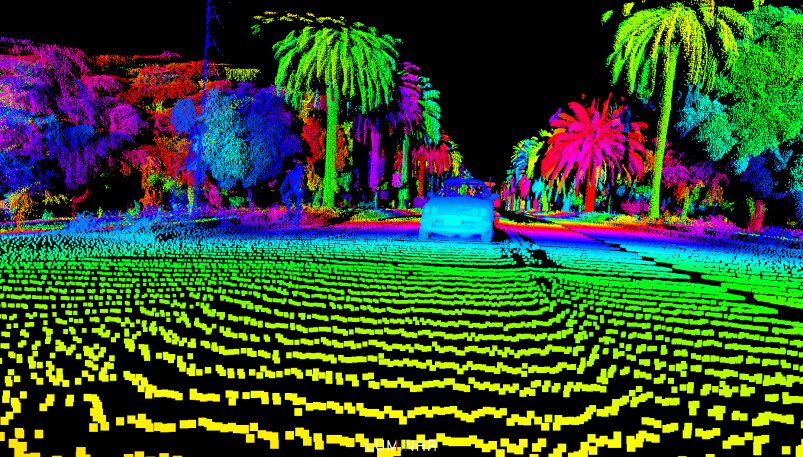

Later Russell shows me a similar before-and-after comparison on a nighttime drive along the San Francisco waterfront. In high-res mode, I can make out clear outlines of cars, people, and the palm trees along the Embarcadero boulevard as if they were all wrapped in multicolor glowing El wire for some Burning Man exhibit.

From an initial run of 10,000 sensors this year, Russell hopes to gear up quickly. “We’ll start looking at making hundreds of thousands or millions of units starting in early 2018,” he says. That’s all in line with ambitious promises by carmakers to have autonomous vehicles on the road by 2021. “They are just depending on the fact that something can come along to solve their problems,” he says, “hopefully we can help save the day on that.”

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.